Introducing AlmaLinux 9 on OCI Ampere A1 with Terraform

Ampere Computing is committed to providing our customers with the widest range of options possible for running cloud native workloads on Ampere® Altra® Multi Core Server Processors. These options include the choice of operating systems, such as AlmaLinux 9.

https://cloudmarketplace.oracle.com/marketplace/en_US/listing/127985893

AlmaLinux is a free open source Linux distribution, created by CloudLinux to provide a community-supported, production-grade enterprise operating system that is a binary-compatible downstream rebuild of Red Hat Enterprise Linux (RHEL) which is used by some of the largest web hosting providers.

The CloudLinux team has been working hard to achieve an “It Just Works” experience for its customers by enabling aarch64 on Ampere Altra-based platforms. Recently, AlmaLinux OS 9 became available for use on Ampere Altra A1 shapes within the Oracle Cloud Infrastructure (OCI) Marketplace.

AlmaLinux OS 9 includes the same industry standard metadata interfaces for instance configurations, Cloud-Init. This allows you to automate your workloads, in a similar fashion to other operating system options.

It is based on upstream Linux kernel version 5.14. This release contains enhancements around cloud and container development as well as improvements to the web console (cockpit). Additionally, release 9 delivers enhancements for security and compliance, including additional security profiles, greatly improved SELinux performance and user authentication logs.

In this post, we will build upon previous work to quickly start automating using AlmaLinux 9 on Ampere Altra processors within Oracle Cloud Infrastructure (OCI) using Ampere Altra A1 shapes.

Requirements

We will use the DevOps tool to launch an AlmaLinux virtual machine on Oracle OCI Ampere A1 compute platform, while passing in some metadata to configure it. Before you start:

- Install Terraform on your system.

- Sign up to Oracle OCI “Always Free” Account and find your credentials for API use

Using the oci-ampere-a1 terraform module

The oci-ampere-a1 terraform module code supplies the minimal amount of information to quickly have working Ampere A1 shapes on OCI “Always Free”. It has been updated to include the ability to easily select AlmaLinux as an option. To keep things simple from an OCI perspective, the root compartment will be used (compartment id and tenancy id are the same) when launching any shapes. Additional tasks performed by the oci-ampere-a1 terraform module.

- Operating system image id discovery in the user region.

- Dynamically creating SSH keys to use when logging into the shape.

- Dynamically getting region, availability zone and image id.

- Creating necessary core networking configurations for the tenancy

- Rendering metadata to pass into the Ampere A1 shape.

- Launch 1 to 4 Ampere A1 shapes with metadata and SSH keys.

- Output IP information to connect to the shape.

Configuration with terraform.tfvars

For the purpose of this we will quickly configure Terraform using a terraform.tfvars in the project directory. Please note that Compartment OCID are the same as Tenancy OCID for Root Compartment. The following is an example of what terraform.tfvars should look like:

tenancy_ocid = "ocid1.tenancy.oc1..aaaaaaaabcdefghijklmnopqrstuvwxyz1234567890abcdefghijklmnopq" user_ocid = "ocid1.user.oc1..aaaaaaaabcdefghijklmnopqrstuvwxyz0987654321zyxwvustqrponmlkj" fingerprint = "a1:01:b2:02:c3:03:e4:04:10:11:12:13:14:15:16:17" private_key_path = "/home/bwayne/.oci/oracleidentitycloudservice_bwayne-08-09-14-59.pem"

For more information regarding how to get your OCI credentials please refer to the following reading material:

Creating the main.tf

To use the terraform module you must open your favorite text editor and create a file called main.tf. Copy the following code which will allow you to supply a custom cloud-init template to terraform.

variable "tenancy_ocid" {} variable "user_ocid" {} variable "fingerprint" {} variable "private_key_path" {} locals { cloud_init_template_path = "${path.cwd}/cloud-init.yaml.tpl" } module "oci-ampere-a1" { source = "github.com/amperecomputing/terraform-oci-ampere-a1" tenancy_ocid = var.tenancy_ocid user_ocid = var.user_ocid fingerprint = var.fingerprint private_key_path = var.private_key_path # Optional # oci_vcn_cidr_block = "10.2.0.0/16" # oci_vcn_cidr_subnet = "10.2.1.0/24" oci_os_image = "almalinux9" instance_prefix = "ampere-a1-almalinux-9" oci_vm_count = "1" ampere_a1_vm_memory = "24" ampere_a1_cpu_core_count = "4" cloud_init_template_file = local.cloud_init_template_path } output "oci_ampere_a1_private_ips" { value = module.oci-ampere-a1.ampere_a1_private_ips } output "oci_ampere_a1_public_ips" { value = module.oci-ampere-a1.ampere_a1_public_ips }

Creating a cloud init template.

Using your favorite text editor create a file named cloud-init.yaml.tpl in the same directory as the main.tf you previously created. To make things slightly more interesting, in this template we are going to update and install some base packages using standard package management, add a group and put the default user in it, then add then necessary repositories to install the docker-engine, as well as some additional tools to utilize it. Once everything is running, we will start a container running a ‘container registry’ on the host. To proceed copy the following content into the text file and save it.

#cloud-config package_update: true package_upgrade: true packages: - tmux - rsync - git - curl - python3 - python36 - python36-devel - python3-pip-wheel - python38 - python38-devel - python38-pip - python38-pip-wheel - gcc - gcc - gcc-c++ - nodejs - rust - gettext - device-mapper-persistent-data - lvm2 - bzip2 groups: - docker system_info: default_user: groups: [docker] runcmd: - alternatives --set python /usr/bin/python3.8 - pip3.8 install -U pip - pip3.8 install -U setuptools-rust - pip3.8 install -U ansible - dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo - dnf update -y - dnf install docker-ce docker-ce-cli containerd.io -y - curl -L https://github.com/docker/compose/releases/latest/download/docker-compose-linux-aarch64 -o /usr/local/bin/docker-compose-linux-aarch64 - chmod -x /usr/local/bin/docker-compose-linux-aarch64 - ln -s /usr/local/bin/docker-compose-linux-aarch64 /usr/bin/docker-compose - docker-compose --version - pip3.8 install -U docker-compose - docker info - systemctl enable docker - systemctl start docker - docker run -d --name registry --restart=always -p 4000:5000 -v registry:/var/lib/registry registry:2 - docker info - echo 'OCI Ampere A1 AlmaLinux 9 Example' >> /etc/motd

Running Terraform

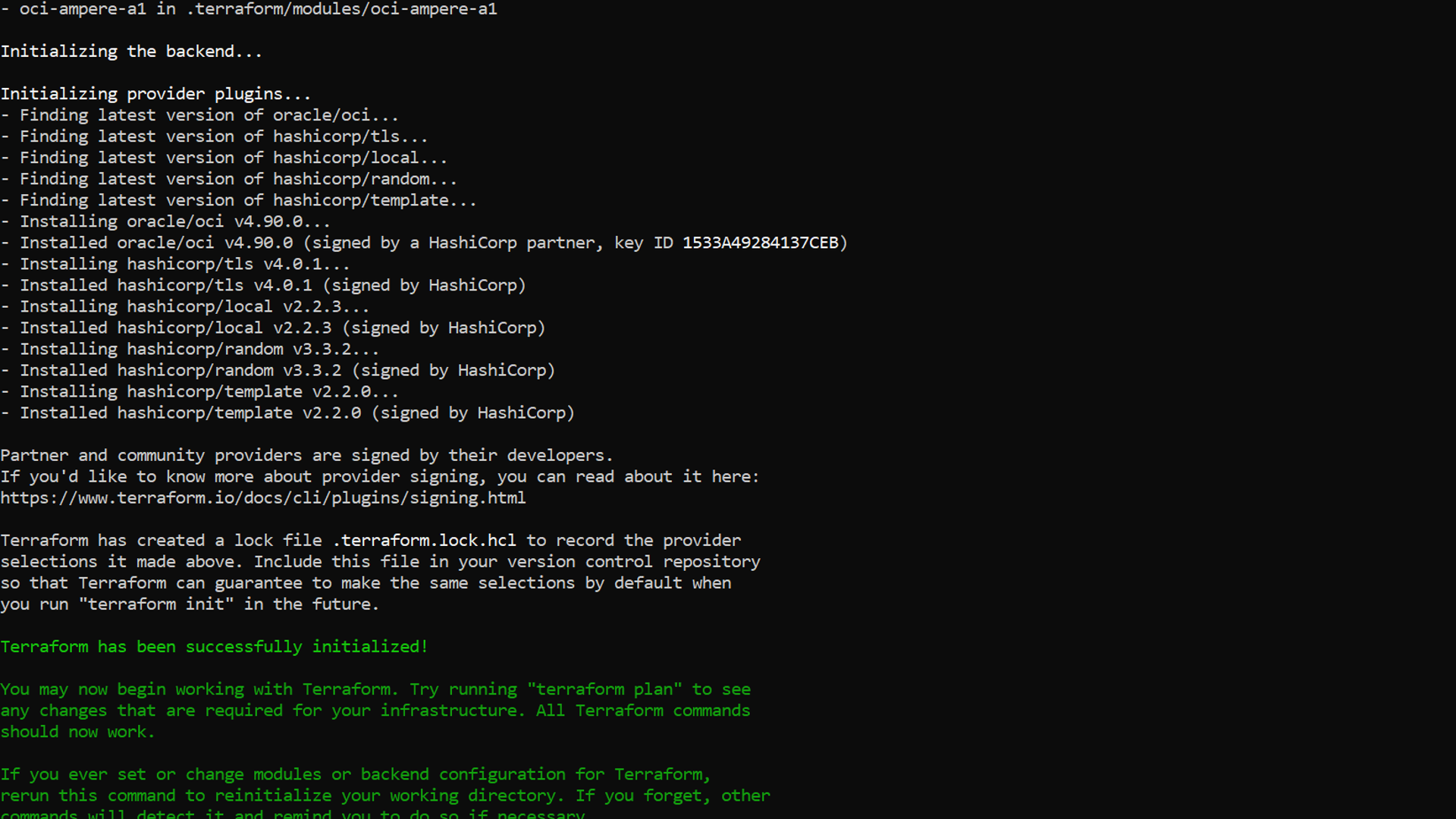

Executing terraform is broken into three commands. First you must initialize the terraform project with the modules and necessary plugins to support proper execution. The following command will do that:

terraform init

After ‘terraform init’ is executed it is necessary to run ‘plan’ to see the tasks, steps and objects. that will be created by interacting with the cloud APIs. Executing the following from a command line will do so:

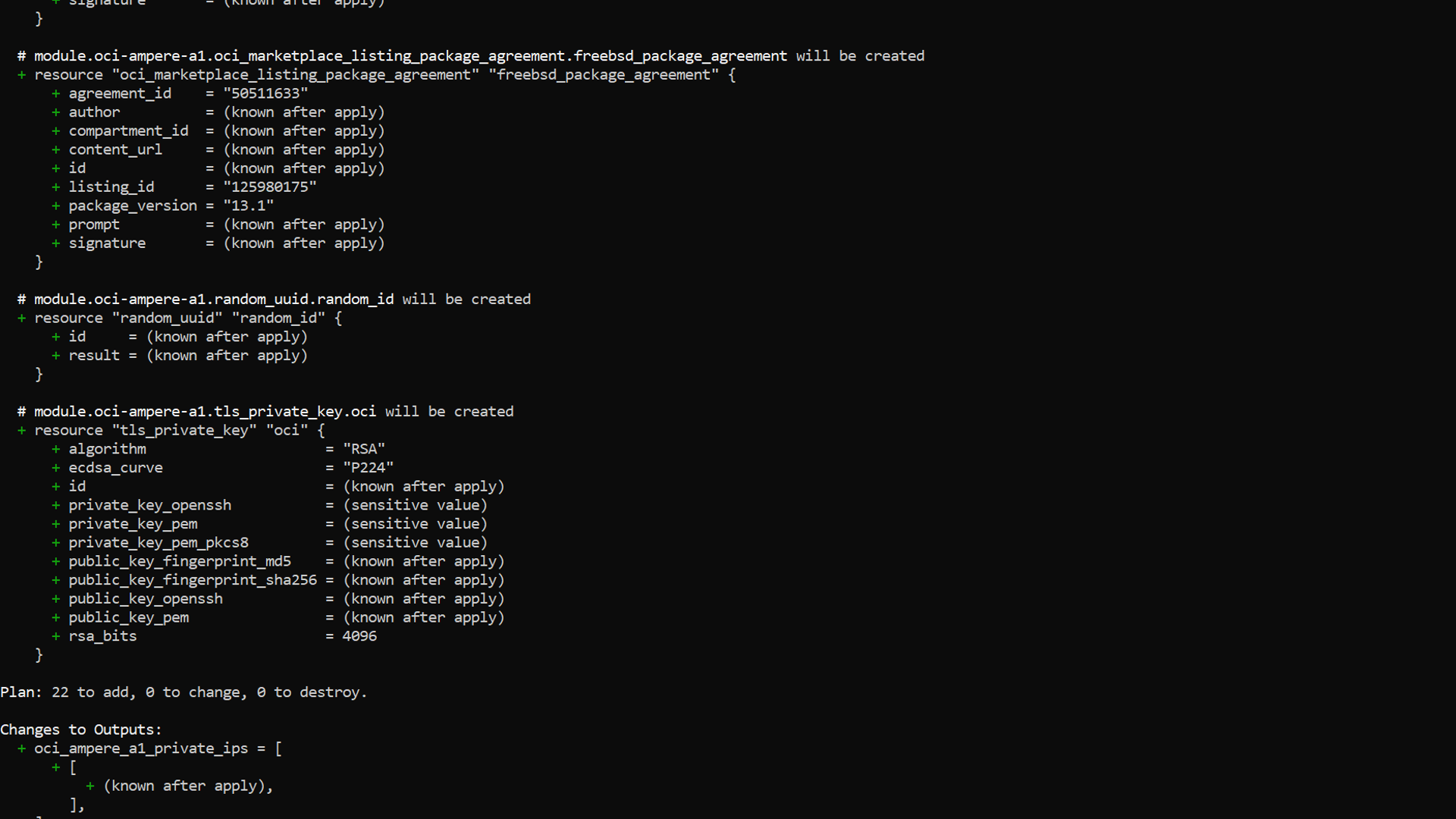

terraform plan

The output from a ‘terraform plan’ execution in the project directory will look like the following:

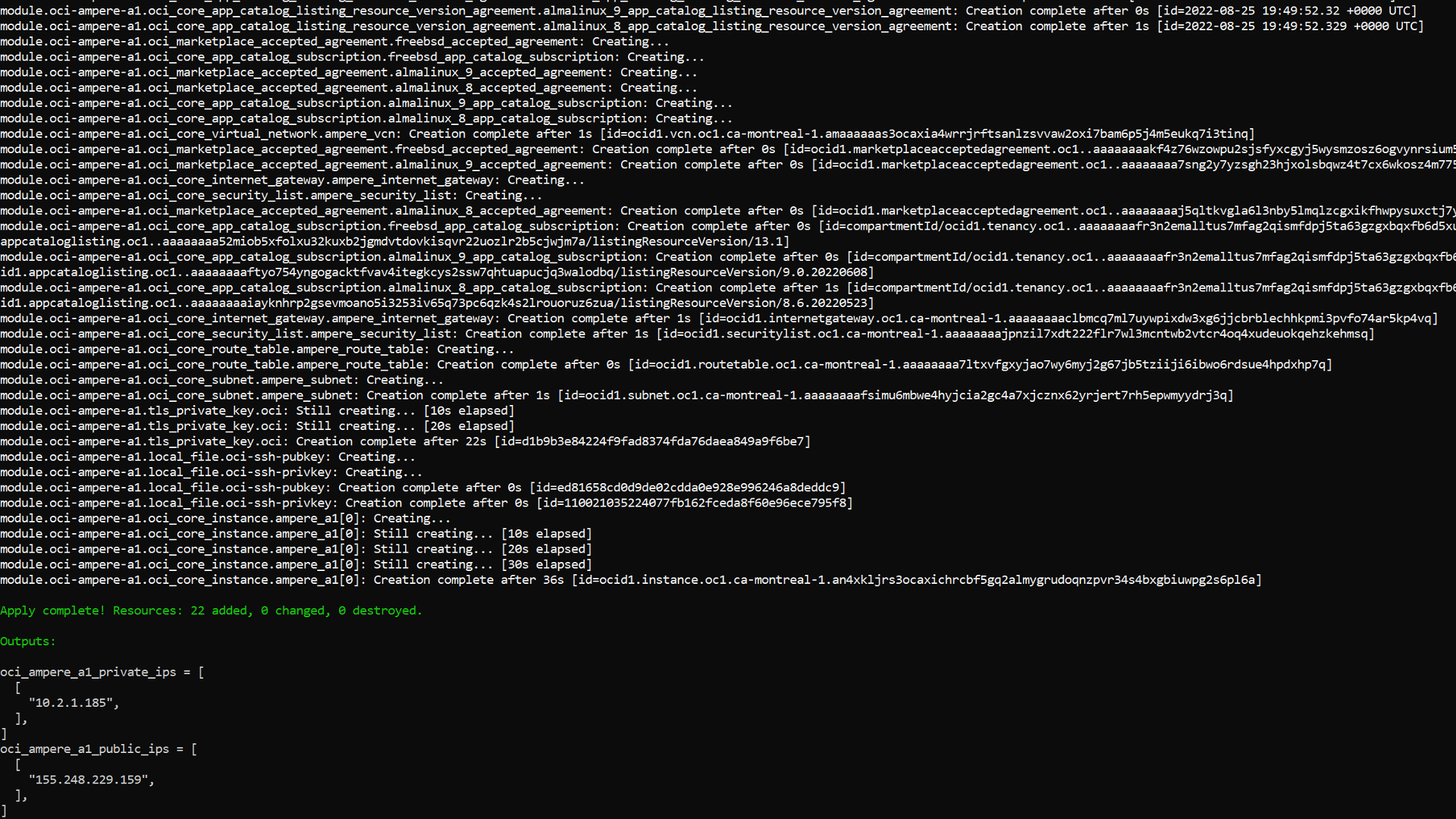

Finally, you will execute the ‘apply’ phase of the terraform execution sequence. This will create all the objects, execute all the tasks and display any output that is defined. Executing the following command from the project directory will automatically execute without requiring any additional interaction:

terraform apply -auto-approve

The following is an example of output from a ‘apply’ run of terraform from within the project directory:

Logging in

Next you’ll need to login with the dynamically generated SSH key that will be sitting in your project directory. To log in take the IP address from the output above and run the following SSH command:

ssh -i ./oci-is_rsa opc@155.248.228.151

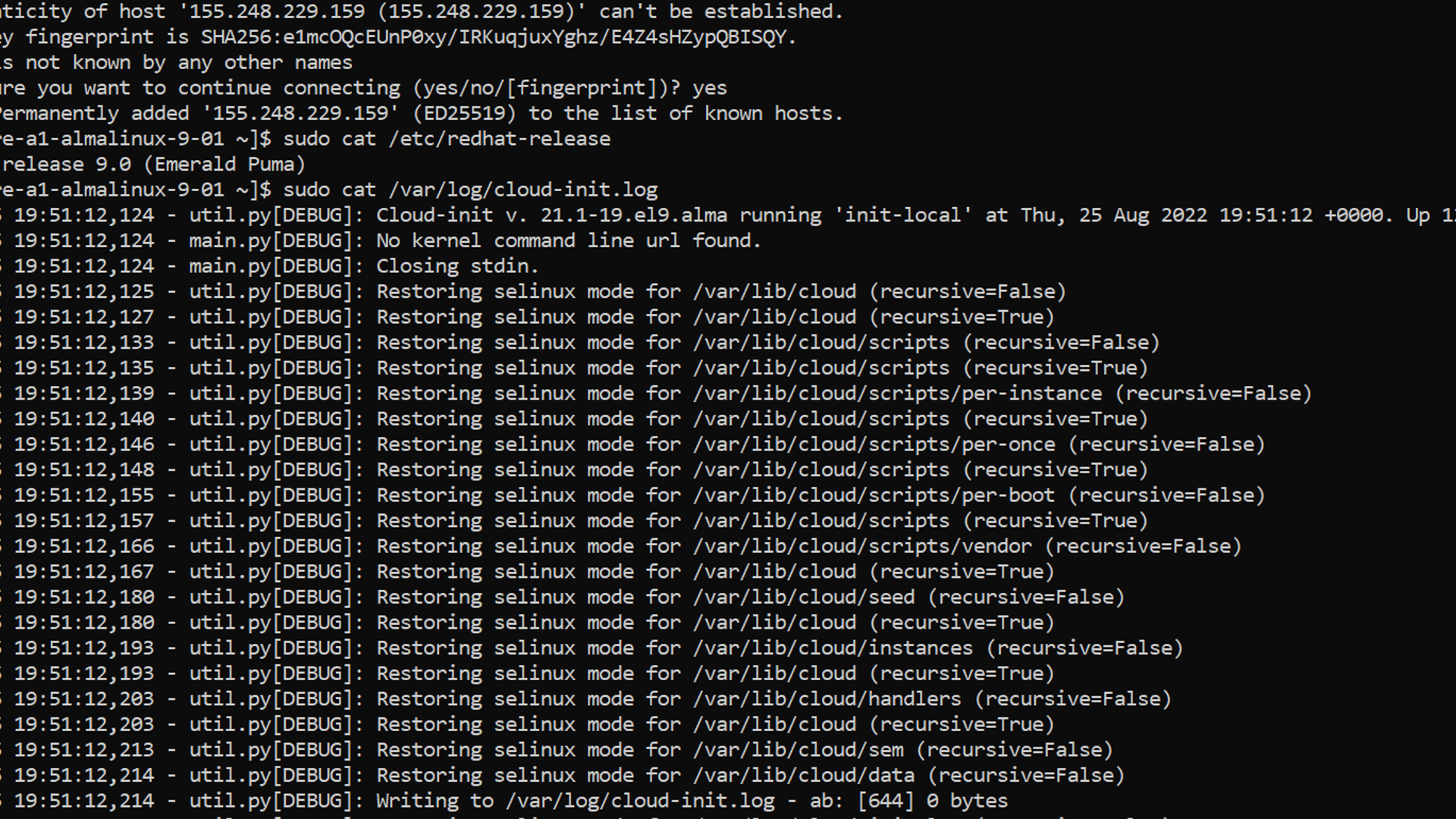

You should be automatically logged in after running the SSH command. The following is output from sshing into an shape and then running ‘sudo cat /var/log/messages’ to verify cloud-init execution and package installation:

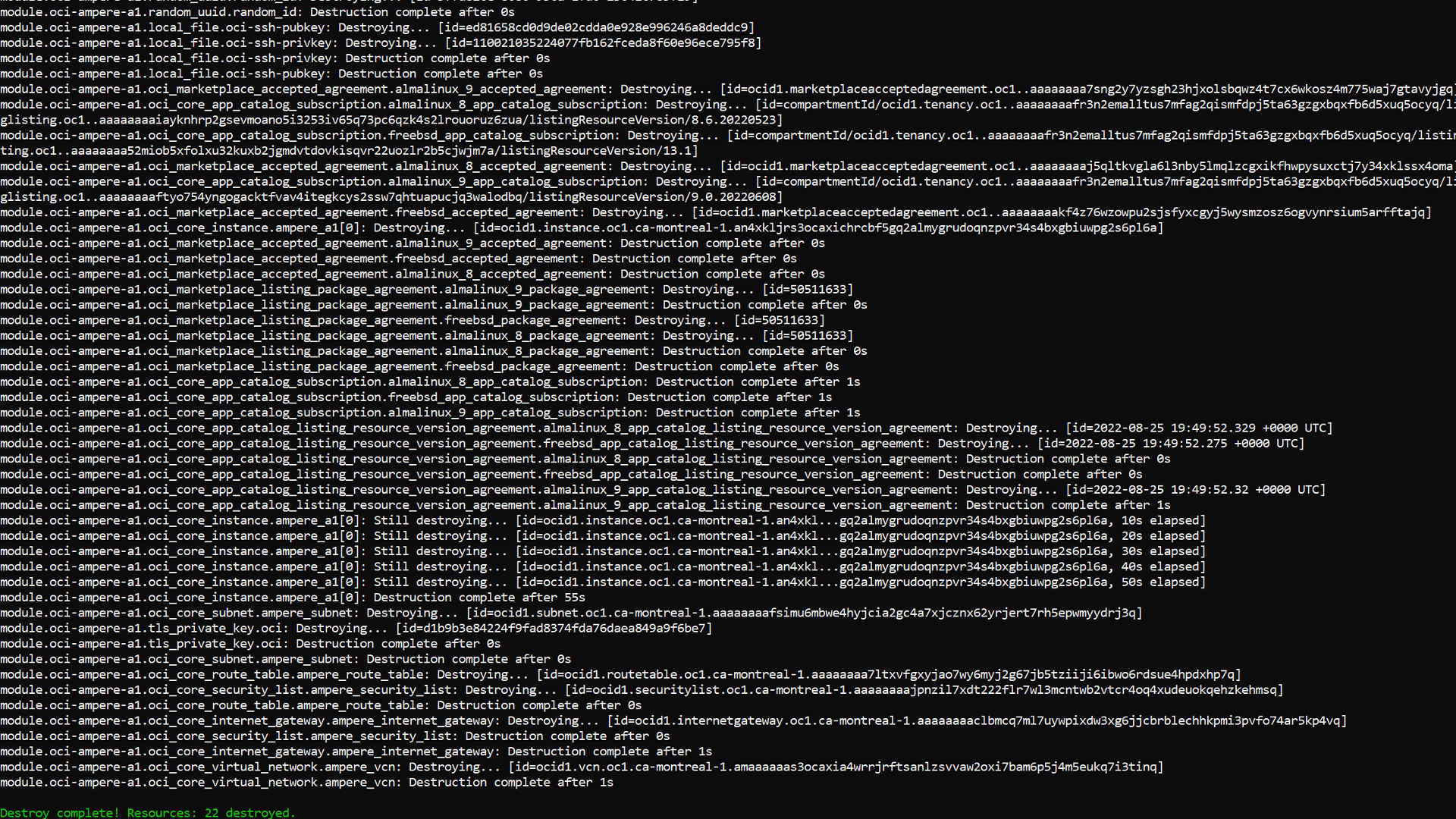

Destroying when done

You now should have a fully running and configured AlmaLinux 9 shape. When finished you will need to execute the ‘destroy’ command to remove all created objects in a ‘leave no trace’ manner. Execute the following from a command to remove all created objects when finished:

terraform destroy -auto-approve

The following is example output of the ‘terraform destroy’ when used on this project.

Now that you have seen it work, try modifying the cloud-init file with some changes and then performing the same workflow. You will soon be iterating quickly using AlmaLinux 9 with OCI Ampere A1 shapes.