Migrating Your NGINX Front End to HPE ProLiant RL300 in a Multi-Architecture Deployment

Many large-scale web applications today are deployed in vast data centers using multiple racks of servers, often spread across the country or the globe. Historically, the data center server space has been dominated by x86 processors. With the introduction of Ampere and other Arm -based CPUs at many Cloud Service Providers as well as the release of enterprise server options like the HPE ProLiant RL300 Gen11 featuring Ampere Altra Max processors, arm64 has become an attractive alternative to x86. Arm servers are the best choice for a range of workloads due to their high core counts, low power usage, linear scalability, and higher performance – especially on the front end of web service. There are many considerations that go into introducing a new architecture such as arm64 into your infrastructure, and there are enterprise tools that allow the successful implementation, management, and scaling of mixed architecture clusters. This allows organizations to pair certain workloads with the right architecture to optimize performance, price, and ease of deployment/management. Especially with the recent rise in energy costs globally, managing power consumption and footprint within IT stacks is critical to the financial health of organizations. Introducing Arm-based servers into your hardware footprint gives you a powerful tool to reduce power consumption without sacrificing performance and service levels. Co-hosting x86 and arm64 nodes in the same cluster is an ideal approach for weighing costs and benefits, and to leverage the mature software and hardware ecosystems that exist for both x86 and arm64. In addition, mixing architectures reduces vendor-lock risk especially for growing and diversifying businesses.

Specifically, applications that sit on the front end of enterprise cloud infrastructure such as web servers, search engines and content management systems are ideal targets to introduce scalable, power efficient arm64 architecture. Java is the de facto standard to develop these types of applications and as a script language can be used as is without any required porting. Thus, targeting front-end applications to start introducing multi-architecture delivers the fastest ROI, and allows repurposing of existing x86 machines for other legacy applications and microservices.

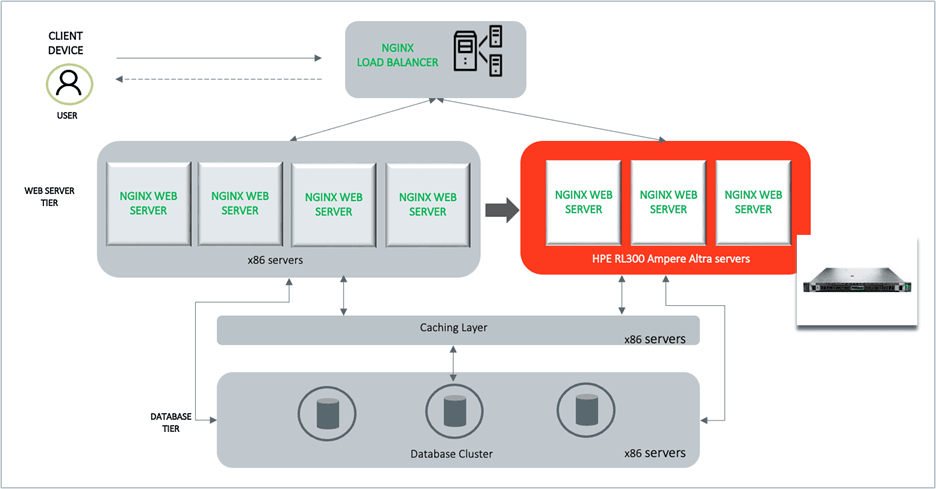

In this post, we will show you how to introduce the HPE RL300 server into an x86-only web service deployment and migrate part of your applications to this server using a lift-and-shift approach. Since most modern web applications use a micro-service based architecture, it's easier to migrate a single micro-service independently to a new Arm-based server without migrating the entire application to arm64. We will focus on the front-end micro-service as a use case for the multi-architecture deployment. NGINX is a widely used web server for many web-based applications such as online banking, social networks, e-commerce, or other platforms where multiple concurrent users navigate different applications within a given website. Specifically, we provide step by step instructions on how to scale such a popular web server using NGINX to HPE RL300 servers.

We also evaluate the results of a case-study using a scale-out composite web service and calculate the benefits of migrating the front-end NGINX tier to HPE RL300 servers. Based on the performance and performance/watt data, migrating NGINX to Ampere Altra Max processors from Intel Ice Lake processors will result in up to 1/3 rackspace savings and 1/3 power reduction. The rest of the application tiers are left intact on the x86 servers while the whole web service continues to run in a multi-architecture deployment without compromising performance.

Prerequisites

In order to try a multi-architecture deployment with x86 and arm64 servers, you’ll need to have at least two servers with web server software (NGINX) installed. One of the servers uses an x86 processor, referred below as host1. We use a second server which is the HPE ProLiant RL300 with Ampere Altra Max processor, referred below as host2.

Of course, users can also choose to run multiple virtual machines or containers on a specific server, rather than using full machines.

Installing NGINX Web Server

To display the web pages of your application to users, you’re going to employ NGINX, a high-performance web server as your application front end. Depending on the Operating System, you’ll use the YUM or APT package manager to install this software.

sudo yum -y update sudo yum install ca-certificates sudo yum -y install nginx

We start with NGINX deployed on x86 (host1). Allow connections to NGINX. If you have firewall enabled, allow regular HTTP traffic on ports 80,8080 and if you have configured SSL, you will also need to allow HTTPs traffic on port 443.

To start NGINX and verify the status, run:

sudo systemctl start nginx sudo systemctl status nginx

Connect to host1’s IP address or hostname from your web browser. If you see the NGINX default landing page, you have successfully installed NGINX and enabled HTTP traffic to your web server.

Configuring NGINX as a Load Balancer

In large-scale cluster deployments, you can have multiple servers that host the web application’s front-end services. Load balancing is an excellent way to scale out your application across these servers and increase its performance, user experience and redundancy.

NGINX can be configured as a simple yet powerful load balancer to improve your server’s resource availability and efficiency. All you need to do is setup NGINX with instructions on where to listen for connections and where to redirect them.

In order to configure load balancing, modify the NGINX configuration file. By default, the file is named **nginx.conf **and is placed in the **/etc/nginx **directory. The location may change depending on the package system used to install NGINX and the operating system.

Edit the NGINX configuration file on the x86 server (host1), define the following two segments, upstream and server. Follow the examples below:

# Define which servers to include in the load balancing scheme. http { upstream backend { server host1:8080; } # This server accepts all traffic to port 80 and passes it to the upstream. # Notice that the upstream name and the proxy_pass need to match. server { listen 80; location / { proxy_pass http://backend; } } # Use port 8080 for the web server configuration server { listen 8080; } }

To configure load balancing for HTTPS instead of HTTP, just use “https” as the protocol. Then use the following command to restart NGINX.

sudo systemctl restart nginx

Check that NGINX restarts successfully. When you enter the load balancer’s public IP address in your web browser, it should pass the request to the back-end server on host1.

Adding RL300 to Create a Multi-Architecture Deployment

Next, we will extend the front end of our deployment by running a new instance of NGINX on the HPE RL300 server (host2). Start by installing NGINX on the RL300 server using the same method described in the Installing NGINX Web Server section above. The open-source NGINX package works well on both x86 and arm64 servers. Ampere recommends certain optimizations to get the best performance out of NGINX. The instructions to compile and build NGINX from source for arm64 are provided here

Next step is to configure NGINX on this server (host2). We will not be configuring it as a load balancer. Use the default nginx.conf with port 8080 for the server sections. The nginx.conf file on host2 will not have the upstream section. The server section is shown below:

http { # This server accepts all traffic to port 8080 a server { listen 8080; } }

Once installed, start NGINX service and make sure you can reach the NGINX default landing page using the host2 IP address from your web server.

Next, update the NGINX load balancer service on host1 to include the new RL300 into the NGINX load balancer configuration. Edit the nginx.conf file on host1 and add the IP address of host2 in the upstream section.

# Define which servers to include in the load balancing scheme. # It's best to use the servers' private IPs for better performance and security. # Add the ip address for RL300 to the upstream. http { upstream backend { server host1:8080; server host2:8080; } # This loadbalancer accepts all traffic to port 80 and passes it to the upstream. # Notice that the upstream name and the proxy_pass need to match. server { listen 80; location / { proxy_pass http://backend; } } server { listen 8080; } }

Use the following command to restart NGINX:

sudo systemctl restart nginx

Now when you access load balancer IP address, it will round-robin the requests to both the servers configured in the upstream section. Your multi-architecture deployment for NGINX is now complete and user traffic is distributed across the NGINX instances running on x86 and arm64 servers.

Advantages of Migrating Front End (NGINX) of a Composite Web Application to a Heterogeneous Cluster

In order to understand the benefits of migrating a service like NGINX to Ampere Altra processors, we performed a scale-out analysis of a composite web service that is comprised of 4 tiers of micro-services. The tiers are defined as: Web front end (NGINX), key-value store (REDIS), object cache (Memcached) and backend database (MySQL). The applications chosen per tier were weighted as a percentage of the whole service. The load in our example was set to be 1.3M requests/second as received by the front-end tier of the service and processed by NGINX as the https server. Load on all other tier components were then set to a total performance level commensurate with their weight to establish the total load of the weighted 4 tier web service model. More details about the composite web service model can be found here.

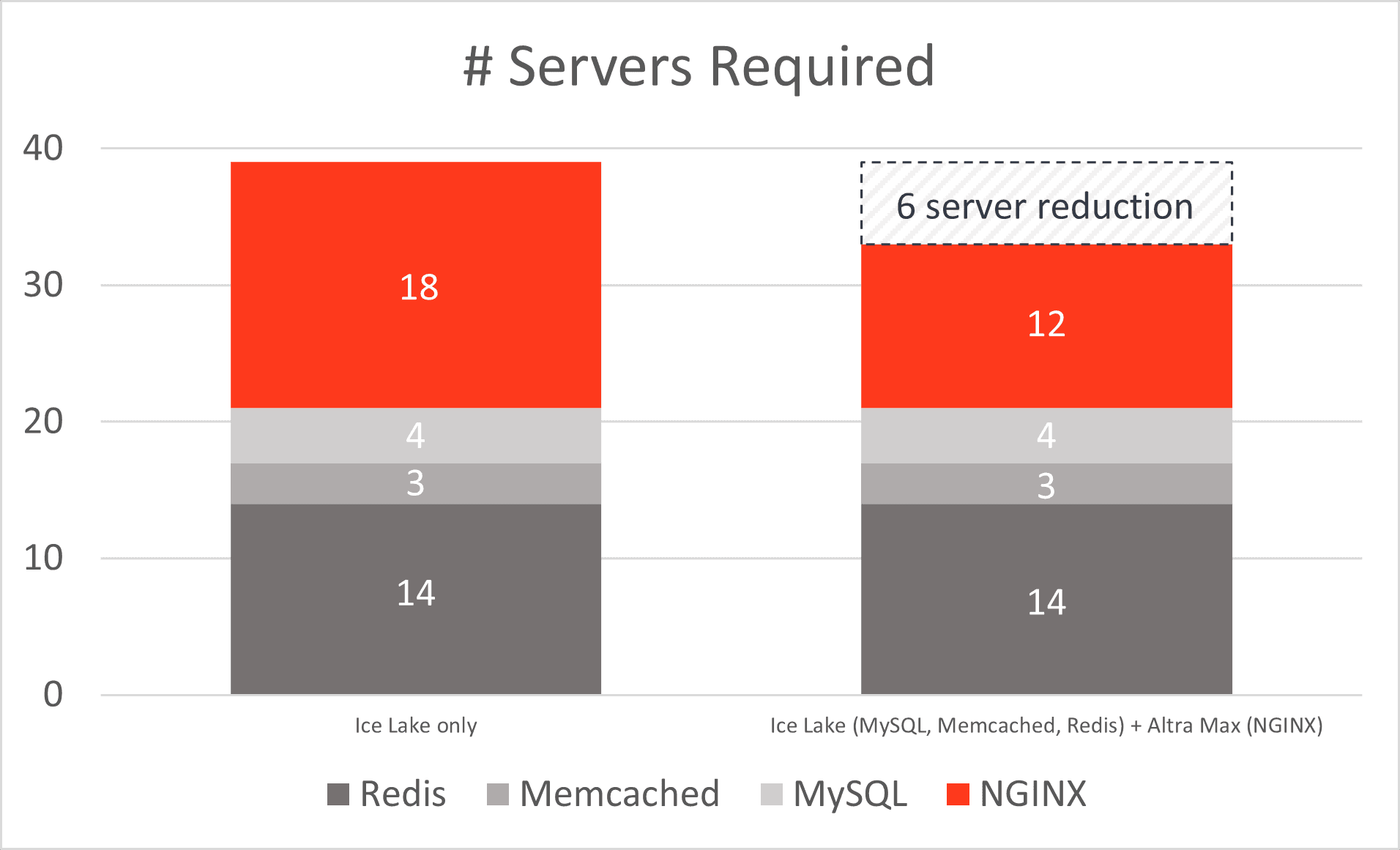

The graphs shown below compares a composite 4-tier web service with all the component micro-services of a web application running on Intel x86 servers and a mixed architecture deployment on Ampere arm64. In the mixed architecture scenario, the web service front end (NGINX) component is migrated to Ampere Altra Max, but the other 3 component micro-services continue to run on Intel x86 servers. Migrating even a single component (NGINX) of the web service to Altra Max significantly reduces the total number of servers needed as well as the overall power consumption for delivering the same performance measured in number of requests/second.

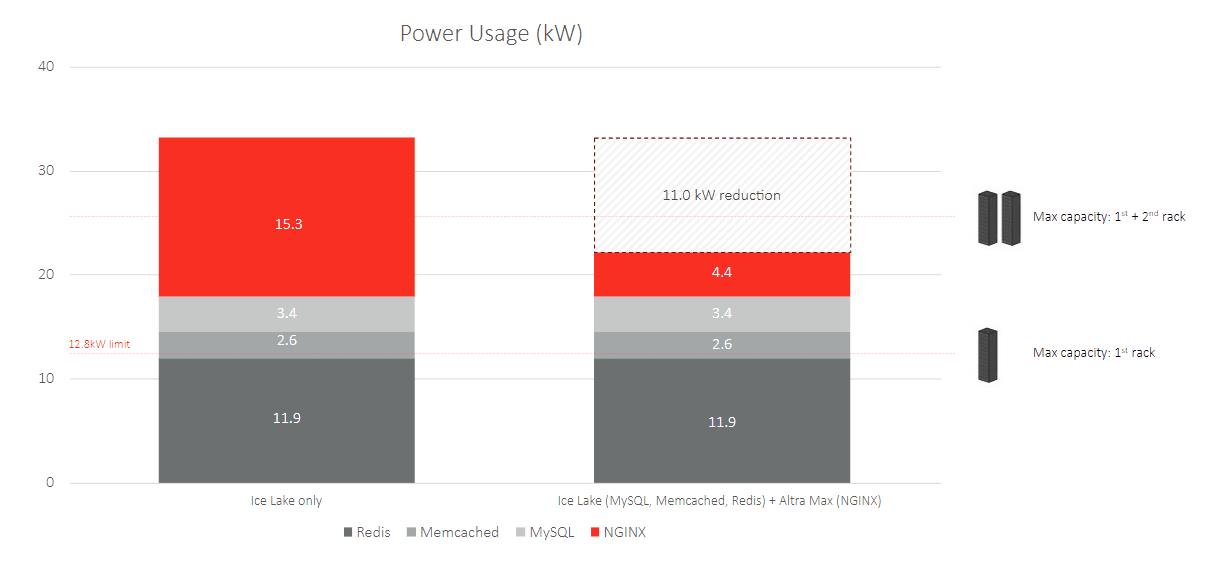

In a datacenter deployment with 12.8kW maximum power budget per rack, the transition of NGINX to Ampere Altra Max results in enough power savings to reduce footprint from 3 (38.4kW total budget) to 2 (25.6kW) racks.

A web service deployed exclusively on Intel servers requires 50% additional rack space and power compared to a heterogenous deployment with Altra Max and Intel servers. The legacy all-x86 setup will also consume approximately 11kW additional power to deliver the same performance as the heterogenous setup. Just for reference, that’s about $22,000 per year in power costs at California Prices (July 2023 data from EIA) assuming full utilization… or 68 tons of CO2.

Overall, the benefits of migrating to a multi-architecture deployment using HPE ProLiant RL300 Arm-based servers are clear. The code migration process is straight forward when using containerized images for micro-services such as NGINX. Features like load balancing and reverse proxy make it very easy to deploy and run multiple instances of the application on different servers with a mix of architectures that includes both x86 and arm64. In addition to all this, migrating to Ampere Altra Max processors for key workloads will result in huge power savings and reduced datacenter footprint without compromising the performance of your application.

Additional Resources

To get more information or trial access to Ampere Systems through our Developer Programs, sign-up for our Developer Newsletter, or join the conversation at Ampere’s Developer Community.