AI Compute: The Modern-day Chimera

The Chimera, an iconic hybrid in Greek mythology, combines a lion, a goat, and a serpent. It features a lion's head, a goat's body with another goat's head on its back, and a serpent's tail, and it breathes fire. The term 'chimera' now describes any mixed-idea concept.

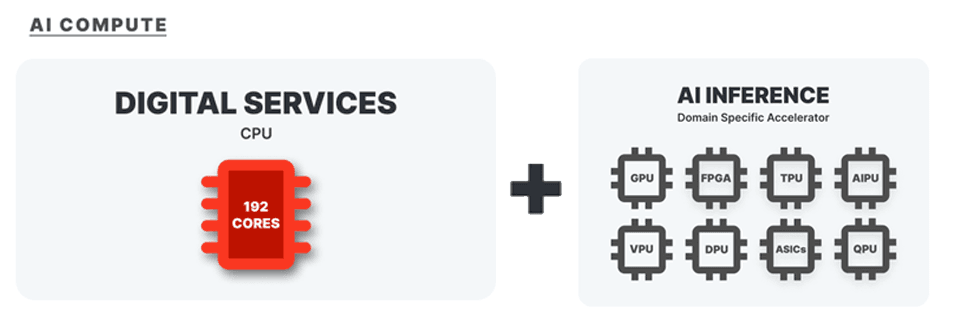

Imagine the CPU (central processing unit) and the DSA (domain-specific accelerator) as a modern-day Chimera. Much like the mythical beast, this technological hybrid possesses distinct strengths combined into a single, formidable entity. The CPU reigns as the master of control and logic, orchestrating tasks and maintaining order. The DSA excels with its specialized parallel processing prowess, tackling repetitive calculations essential for AI. The connectivity between them ensures seamless communication and power transfer, allowing the solution to unleash its full computational might.

AI Compute: Combining CPUs and DSAs for Cloud and AI Success

In today's world of ever-increasing computational demands, finding the right balance of processing power is essential. From traditional business applications to cutting-edge AI, organizations need flexible and efficient infrastructure. That's where the combination of CPUs and DSAs shines.

CPUs are the brains of a computer, handling a wide variety of tasks with versatility. Think of them as the masterminds behind your business applications, databases, and web servers. These generalists excel at sequential processing and managing a wide range of tasks. CPUs alone can run mainly traditional AI inference models very well along with low batch size LLMs. But when more horsepower is needed, bring in the DSAs.

DSAs are specialized for tasks like AI processing, making them incredibly fast at performing repetitive calculations simultaneously. They come in many shapes, sizes, and names: GPUs, TPUs, ASICs, FPGAs, VPUs, etc. Their specialized strength makes them the driving force behind AI inference. When it comes to large language models (LLMs) or highly specialized models and datasets, DSAs provide the additional raw power needed.

Why Combine CPUs and DSAs?

Here's where the true magic happens:

- Cost-Effective Versatility: By utilizing both CPUs and DSAs, cloud environments gain flexibility. Traditional workloads smoothly run on CPUs, while the largest or most specialized AI inference tasks can be offloaded to the DSAs for accelerated performance, optimizing cost and resource allocation.

- Seamless Workflows: In complex AI applications, CPUs and DSAs work together in a complementary way. The CPU can handle data preprocessing, task coordination, and overall management, while the DSA tackles the heaviest and most specialized computation for the AI model.

- Scalability Made Easier: Cloud environments with a mix of CPU and DSA instances can easily be scaled up or down based on the demands of traditional workloads and AI Inference, ensuring you're always utilizing resources efficiently.

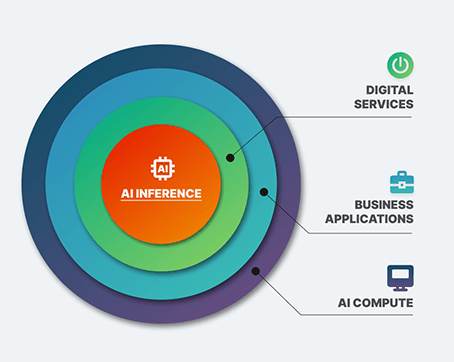

AI Will Become Part of All Applications

Forget siloed AI. The future demands AI as an essential building block for every service and application. As servers stay in use longer, you’ll need to design your next-generation infrastructure with maximum compute cores and AI acceleration. This will ensure your services are equipped for both current and future needs.

Ampere: Teaming up with Qualcomm and NetInt to Bring AI Compute to Market

Ampere and Qualcomm have teamed up to bring you a high-density compute solution that’s designed for demanding traditional workloads and cutting-edge AI. Our joint platform packs an incredible eight AI 100 Ultra accelerators to run AI inference and 192 Ampere compute cores for other Cloud-Native services into a compact 2U server. In a typically 12.5kw rack, this equates to hosting up to 56 AI accelerators with 1,344 computation cores, while eliminating the need for expensive liquid cooling. This is truly high-density compute.

Ampere has also joined forces with NetInt to unleash the power of AI-powered live transcoding. The Quadra Video Server Ampere Edition Video Server combines video processing units (VPU) for video encoding and decoding with OpenAI’s Whisper to support up to 30 simultaneously transcoded/translated live channels.

The Future of Cloud Computing and AI

The hybrid model of CPUs and DSAs is becoming the standard in cloud computing and AI. It allows organizations to:

- Run demanding AI applications while maintaining their essential business infrastructure.

- Optimize costs by maximizing the use of different hardware types.

- Adapt to changing workloads and future advancements in AI technology.

Unleash the transformative power of AI compute. This CPU-DSA combination will fuel your most ambitious AI projects and propel your cloud environment to unprecedented heights.