Unlocking the Memory Maze — A Quest for QoS

Have you noticed that despite loading our personal devices with multiple applications, we can almost always watch our favorite soccer or cricket game without interruption? What is working behind the scenes is a Quality-of-Service (QoS) capability — ensuring that system resources are adequately balanced between important critical applications and not-so critical, “running in the background” applications. The cache capacity and memory bandwidth that QoS capabilities provide enable mechanisms to ensure that system resources such as cache capacity and memory bandwidth are balanced across resource-intensive and resource non-intensive workloads.

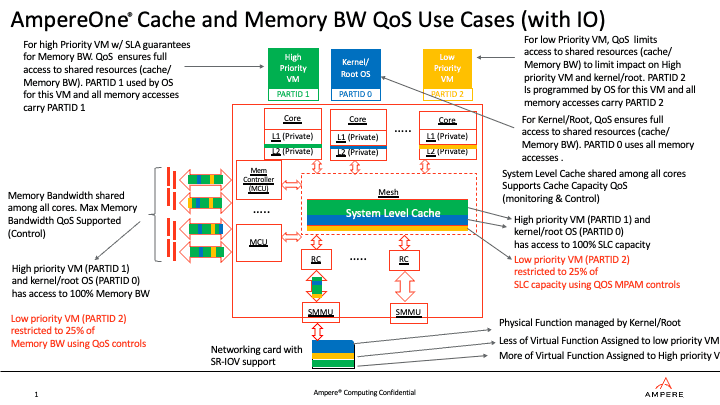

In a multi-tenant IaaS/PaaS environment with third-party and first-party workload co-located on an Ampere® SOC, a QoS capability ensures allocation of a minimum threshold of a shared micro-architectural resource to higher priority software — such as virtual machines (VMs), containers, and processes. It also enables limiting the impact that lower priority software has on the performance of higher priority software.

QoS allows shared memory resources such as system level cache (SLC) capacity and memory bandwidth to be effectively partitioned amongst contending VMs. The QoS capability provides mechanisms by which software entities such as VMs can be associated with a unique identifier referred to as a partition ID (PARTID) and then allocated to either a minimum capacity or maximum capacity of the shared SLC capacity and memory bandwidth that a PARTID uses.

That allows system software to effectively identify higher priority software and ensure the necessary allocation of the shared resources to that software. For instance, the highest priority for SLC and memory bandwidth would be provided to the operating system and hypervisor running on the SOC. In doing so, it identifies that software with a PARTID and programs the SLC and memory bandwidth QoS controls to provide memory accesses, with the PARTID having full access to the SLC and memory bandwidth as needed.

Similarly, using this mechanism, with multiple VMs vying for resources, the QoS capability delivers the necessary capacity of the shared resources to the higher priority VM with a SLA (service-level agreement) while lower priority VMs can be limited to cache and memory BW capacity, so it does not impact higher priority VMs.

How does the magic of QoS work?

AmpereOne® QoS enables two approaches that work together under system software control to apportion the performance-giving resources of the memory system: 1. Memory-system resource (memory bandwidth, cache capacity) partitioning 2. Memory-system resource usage monitoring

With usage monitoring, system software can determine what capacity of cache and bandwidth a higher priority VM needs and then combine that with the resource partitioning capability to ensure the VM has available capacity. As a result, high priority VMs with a SLA have guaranteed memory BW and access to SLC capacity, as QoS ensures the needed access to shared resources (cache, memory BW). Conversely, low priority VMs could be restricted to limit access to shared resources (cache capacity, memory bandwidth). Restricting lower priority VMs to lower usage also limits any performance impact on high priority VMs because of applications contending for resources.

Consider a scenario of co-execution of a latency-sensitive video server and a bandwidth- intensive mail server on two different cores, causing contention to access shared SLC and memory. In the absence of QoS, the response time of a latency-sensitive app can suffer because of unconstrained cache sharing and/or memory BW utilization by a BW-intensive mail application. A QoS-aware system ensures allocation of a minimum threshold of shared resources, whereby the memory bandwidth is partitioned to a certain limit to ensure the response time of the latency sensitive app is not negatively impacted.

It is indeed exciting for Ampere ecosystem partners to know that QoS provides a leap in workload consolidation density, performance consistency, and predictable service delivery with better SLA for cloud service providers’ (CSP) end customers. Moreover, efficient and optimal resource utilization also lowers the overall total cost of ownership for CSPs, allowing them to improve their IaaS/PaaS margins. Lastly, because certain applications can have preferential access to memory/SLC, it allows greater flexibility and tunability across the stack and guarantees faster responsiveness and performance for certain mission-critical applications/threads and processes in high load situations.

Here’s great news for those wanting to use it: Linux support for this feature will soon be upstreamed. The QoS feature is enabled in the firmware settings. No changes or recompilation is needed for the application or VM.

This is just one of the many AmpereOne Cloud Native features that I will be sharing soon. Stay tuned for more!

This animation showcases how QoS works for applications/VMs competing for shared resources...please share your views with me at smehta@amperecomputing.com so I can keep building great content. Together, we can learn and grow. See QoS in action in our anual roadmap video.