Smart Traffic Shaping: Unleashing Resilient performance with AmpereOne®

In a cloud environment where many users are running tasks concurrently on one processor, it is critical that noisy-neighbor effects and run-to-run variance is minimized. In a 192 core processor like AmpereOne®, it could be easy to encounter mesh congestion when the cores are running at full tilt and high utilization. In such scenarios, it becomes critical to minimize the interference between independent concurrent workloads with responsive traffic management techniques to achieve consistent performance at high CPU utilization.

Ampere traffic management uses algorithms for adaptive quality of service and specific flow control techniques to avoid common over-subscription issues, enabling highest core counts and predictable performance at high CPU utilization.

The picture (Fig 1) below demonstrates busy traffic through the SoC mesh. The complex network and random data pathways could introduce unnecessary delays and higher latency impacting performance. The highly parallel nature of communication in this system means there are multiple transactions in flight simultaneously.

To address this, Ampere developed adaptive traffic management, a technology within the core and SoC. The operating principle of adaptive traffic management is that downstream agents like memory controllers, caches or coherency engines communicate their “busyness” to requesters like cores; the cores then respond to this busyness by adjusting the rate and profile of traffic that they generate. This intelligent and adaptive traffic shaping allows Ampere to run a large and diverse set of workflows without throttling or destructive interference between them. As a result, AmpereOne® processors achieve consistent performance even at very high CPU utilization, where cores are handling potentially conflicting tasks.

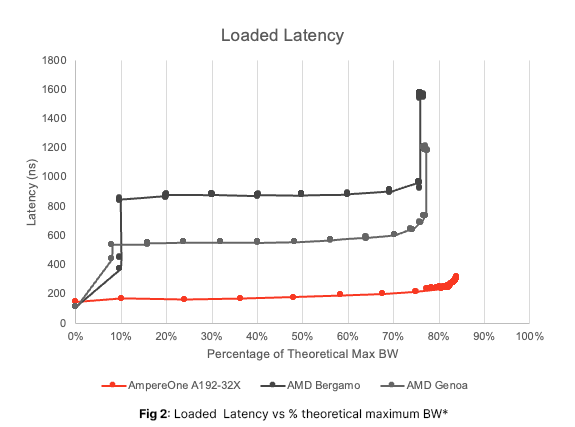

Hashing, a technique used for congestion management, reduces contention for coherency and DDR traffic, so that cores can respond dynamically based on their activity. For example, a core running a latency-sensitive application will respond differently than a core running a bandwidth-hungry workload. The graph below (Fig 2) shows a loaded latency of AmpereOne processors versus x86 CPUs. In this example, a single latency-sensitive application is run, and gradually bandwidth-hungry applications are added.

As the load on the memory subsystem increases, custom feedback on the system state is used by the CPU to adjust and provide fairness, allowing higher utilization, while keeping the latency on an AmpereOne® processor (shown in red) flat and low. In contrast, the competitive parts (in grey) show latency increases with the increased load. The configuration used for AMD and Ampere CPUs is noted in Appendix 1. Ampere’s adaptive traffic management ensures that bandwidth-hungry applications do not interfere with latency sensitive ones, and the hardware handles this automatically without requiring any software intervention.

The adaptive traffic management feature is automatically set by the firmware, and is a vital capability for delivering consistent, resilient, and scalable performance in AmpereOne processors.

*Appendix 1:

AmpereOne:

•HW: 1 x AmpereOne A192-32X

- (192c/192t, 3.2GHz)

- 8 x 64 GiB DDR5 5200 MHz

•OS: Fedora 38

•Kernel: 6.4.13-200.fc38. aarch64

AMD Genoa:

•HW: 1 x AMD EPYC 9654

- (96c/192t, 2.4/3.55GHz),

- 12 x 64 GiB DDR5 4800 MHz

•OS: Fedora 38

•Kernel: 6.4.13-200.fc38.x86_64

AMD Bergamo:

•HW: 1 x AMD EPYC 9754

- (128c/256t, 2.25/3.1GHz),

- 12 x 64 GiB DDR5 4800 MHz

•OS: Fedora 38

•Kernel: 6.4.13-200.fc38.x86_64

Disclaimer:

All data and information contained herein is for informational purposes only and Ampere reserves the right to change it without notice. This document may contain technical inaccuracies, omissions and typographical errors, and Ampere is under no obligation to update or correct this information. Ampere makes no representations or warranties of any kind, including express or implied guarantees of noninfringement, merchantability, or fitness for a particular purpose, and assumes no liability of any kind. All information is provided “AS IS.” This document is not an offer or a binding commitment by Ampere.

System configurations, components, software versions, and testing environments that differ from those used in Ampere’s tests may result in different measurements than those obtained by Ampere.

©2024 Ampere Computing LLC. All Rights Reserved. Ampere, Ampere Computing, AmpereOne and the Ampere logo are all registered trademarks or trademarks of Ampere Computing LLC or its affiliates. All other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.