DPDK Setup and Tuning Guide

on Ampere Processors

How to Build DPDK with Ampere Processors

DPDK is the Data Plane Development Kit that consists of libraries to accelerate packet processing workloads running on a wide variety of CPU architectures. It is designed to run on x86, POWER, and ARM processors and licensed under the Open-Source BSD License.

Pktgen-DPDK is a traffic generator powered by DPDK.

This guide is written for application architects and build engineers who want to know how to build DPDK and set-up the test environment with Ampere® Altra® and AmpereOne® processors.

The configuration and instructions below are based on Ampere Altra and can also be applied to AmpereOne. Please check the “DPDK on Ampere® AmpereOne® Processors” section at the bottom of this guide for DPDK version selection on AmpereOne.

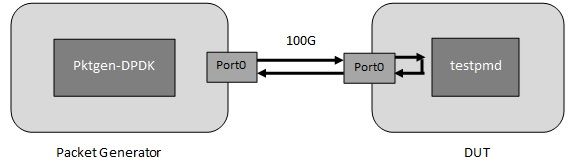

Hardware Configuration for Pktgen-DPDK

The configuration of the hardware is illustrated below.

Packet Generator:

- Ampere Altra Q80-30

- Mellanox ConnectX-6 Dx

- CentOS 8.5

- DPDK 23.03+ Pktgen-DPDK master

DUT:

- Ampere Altra Max M128-30

- Mellanox ConnectX-5 Ex

- CentOS 8.5

- DPDK 23.03

The packet generator and the DUT are connected back-to-back using a 100G cable.

Installing Prerequisites for Building DPDK

Download the MLNX_OFED for Mellanox’s NIC from Mellanox's website[1] for the target OS. Then, install the MLNX_OFED using this command. It will prompt you if some packages are missing from the system. A reboot may be required.

./mlnxofedinstall --add-kernel-support --upstream-libs --dpdk --skip-repo

Install other packages needed for building DPDK.

sudo yum install python3 meson ninja-build python3-pyelftools numactl-devel -y

Install packages needed for building Pktgen-DPDK.

sudo yum install libpcap-devel -y

Download and Build DPDK

Download the DPDK source code from DPDK website: https://fast.dpdk.org/rel/ and then build using the following commands.

wget https://fast.dpdk.org/rel/dpdk-23.03.tar.gz tar zxf dpdk-23.03.tar.gz cd dpdk-23.03 meson build ninja -C build sudo ninja -C build install

The DPDK libraries and binaries will be installed at /usr/local/lib64 and /usr/local/bin/.

Download and Build Pktgen-DPDK

This section is only applied on the packet generator system. Download the Pktgen-DPDK source code from github. As Pktgen-DPDK uses the DPDK libraries installed in prior section, please select a version which is close to the DPDK version. Then build using the following commands:

export PKG_CONFIG_PATH=/usr/local/lib64/pkgconfig git clone https://github.com/pktgen/Pktgen-DPDK.git cd Pktgen-DPDK make

System Health Check and Settings

Before starting your testing, some settings should be applied on both the packet generator and DUT system. Some options may be different depending on the Operating System (OS).

System Health Check

A system health check is always the first thing to do before any testing. Use common utilities like dmidecode, lscpu, lshw, lspci, fio, iperf/iperf3, etc. to check the BIOS version, CPU frequency, memory DIMM population, ethernet link speed, etc. Make sure all the components of the system are running in a functional and performant manner.

BIOS Settings

BIOS settings are dependent on the platform you are using. Here we list some generic options for Ampere Altra family processors recommended for DPDK.

Advanced->ACPI Settings->Enable ACPI Auto Configuration [Disabled]

Advanced->ACPI Settings->Enable CPPC [Disabled]

Advanced->ACPI Settings->Enable LPI [Disabled]

Chipset->CPU Configuration->ANC mode [Monolithic]

Chipset->CPU Configuration-> SLC Replacement Policy [Enhanced Least Recently Used]

Chipset->CPU Configuration->L1/L2 Prefetch [Enabled]

Chipset->CPU Configuration->SLC as L3$ [Disabled]

Please refer to the user manual of the platform for details of BIOS settings.

Hugepage Support

The hugepage size supported is different with the base kernel page size config. If the kernel page size is configured to 4K, the supported hugepage sizes are 64K, 2M, 32M, and 1G (a 1G hugepage is recommended). If the kernel page size is configured to 64K, the supported hugepage sizes are 2M, 512M, and 16G (512M or 16G hugepage is recommended).

We use 512M hugepage size in this document. Setting the number of hugepage using the following command:

echo 100 > /sys/devices/system/node/node0/hugepages/hugepages-524288kB/nr_hugepages

Alternatively, you can set the hugepage in the kernel boot options and the settings can be persistent after reboot:

default_hugepagesz=512M hugepagesz=512M hugepages=100

Please refer to the kernel document for more details about huge page:

https://www.kernel.org/doc/html/latest/admin-guide/mm/hugetlbpage.html

https://www.kernel.org/doc/html/latest/arm64/hugetlbpage.html

Performance Governor

Skip this step if you set the “Enable CPPC” to “Disabled” in the BIOS or need to set the CPU in performance governor to avoid the frequency scaling for power saving.

sudo cpupower frequency-set -g performance

Kernel Boot Options

Some other kernel boot options which are recommended:

iommu.passthrough=1: Bypass the IOMMU for DMA selinux=0: Disable SELinux at boot time isolcpus=xx-yy: Isolate a given set of CPUs from disturbance nohz_full= xx-yy: Stop the ticks for the given set of CPUs rcu_nocbs=xx-yy: Set to no-callback mode for the given set of CPUs rcu_nocb_poll

Please refer to the kernel document for details of these options:

https://www.kernel.org/doc/html/latest/admin-guide/kernel-parameters.html

NIC

Disable the pause frame of the ethernet interface used for testing:

ethtool -A <interface> rx off tx off

Set the MaxReadReq of the PCIe device to 4096 bytes. This is just an example for Mellanox NIC.

setpci -s <PCIe Address> 68.w=5950

Enable relax ordering and CQE Compression of the Mellanox NIC:

mst start mst status mlxconfig -d <PCIe Address> query |grep -e PCI_WR_ORDERING -e CQE_COMPRESSION mlxconfig -d <PCIe Address> set PCI_WR_ORDERING=1 mlxconfig -d <PCIe Address> set CQE_COMPRESSION=1

Different NIC may have different best settings, please refer to the user manual of the device for details.

SLC Installation

“SLC (System Level Cache) Installation” is a method to let the IO subsystem cache the IO data in the SLC directly, which can reduce the latency in the data path and improve performance of DPDK application. It needs some utilities to enable thi feature on Ampere Altra family processors and has some dependencies on the kernel. Please contact Ampere support for this feature.

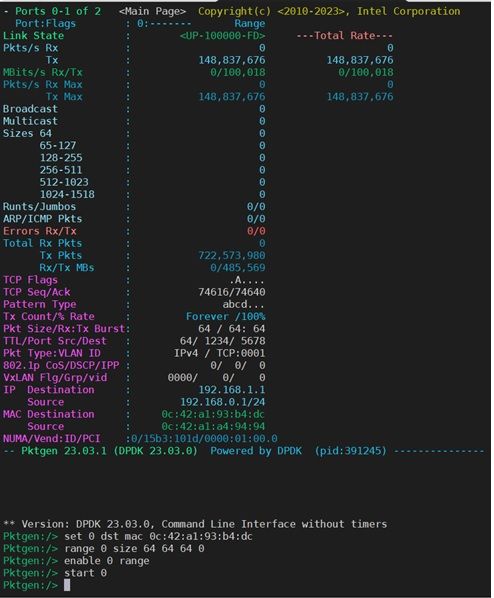

Start Pktgen Application

Start the pktgen application on the system used as packet generator. In this example, we use one 100G port as pkt transmitter.

export PKG_CONFIG_PATH=/usr/local/lib64/pkgconfig cd Pktgen-DPDK ./Builddir/app/pktgen --file-prefix pktgen -a 0000:01:00.0 -l 40-59 -n 8 -- -N -T -m [42-43:44-59].0

Enable the range from the prompt of Pktgen.

Pktgen:/> set 0 dst mac <mac> Pktgen:/> range 0 dst mac <mac> 00:00:00:00:00:00 00:00:00:00:00:00 00:00:00:00:00:00 Pktgen:/> range 0 size 64 64 64 0 Pktgen:/> enable 0 range Pktgen:/> start 0

Below is the screenshot of sending packets at 148.8Mpps, which is the line rate of 100G NIC interface.

Table-1 lists the line rate of different ethernet link speeds with different packet sizes.

| Ethernet Link Speed | Packet Size (Byte) | Line rate packet per second (Mpps) |

|---|---|---|

| 10GbE | 64 | 14.88 |

| 128 | 8.45 | |

| 256 | 4.53 | |

| 512 | 2.35 | |

| 25GbE | 64 | 37.2 |

| 128 | 21.11 | |

| 256 | 11.32 | |

| 512 | 5.87 | |

| 100GbE | 64 | 148.8 |

| 128 | 84.46 | |

| 256 | 45.29 | |

| 512 | 23.5 |

Start testpmd Application

Start the dpdk-testpmd application on the system under test. Here is an example (CMD-1) with 1 core and 3 rx queue/3 tx queue.

(CMD-1) dpdk-testpmd --file-prefix testpmd -l 40-79 -n 8 -a 0000:01:00.0,mprq_en=1,rxqs_min_mprq=1,rxq_cqe_comp_en=1,rxq_pkt_pad_en=1,txq_inline_max=1024,txq_inline_mpw=1024,txqs_min_inline=1,txq_mpw_en=1 -- -i --rss-ip --nb-cores=1 --burst=128 --txq=3 --rxq=3 --mbcache=512 --rxd=1024 --txd=1024 -a

In this example, we use the data inline mode by enabling the Multi-Packet Rx Queue (MPRQ), Enhanced Multi-Packet Write (eMPW) and CQE compression of Mellanox ConnectX-5, ConnectX-6 NIC. These features can save the PCIe bandwidth and improve performance when the PCIe back pressure is high. However, it consumes additional CPU cycles for data copying. It may hurt performance when PCIe back pressure is not high. Here is another example (CMD-2) which disables the data inline mode:

(CMD-2) dpdk-testpmd --file-prefix testpmd -l 40-79 -n 8 -a 0000:01:00.0,mprq_en=0,rxqs_min_mprq=1,rxq_cqe_comp_en=1,rxq_pkt_pad_en=1,txq_inline_max=0,txq_inline_mpw=0,txqs_min_inline=1,txq_mpw_en=1 -- -i --rss-ip --nb-cores=1 --burst=128 --txq=3 --rxq=3 --mbcache=512 --rxd=1024 --txd=1024 -a

The Rx/Tx comparison of single core between CMD-1(inline mode) and CMD-2(non-inline mode) is as below:

| Core | Mpps | CMD-1(inline mode) | CMD-2(non-inline mode) |

|---|---|---|---|

| 1 | Rx | 28.5 | 68.1 |

| Tx | 28.5 | 63.2 | |

| 6 | Rx | 148.8 | 148.8 |

| Tx | 148.1 | 60.2 |

When the PPS is not high, CMD-2 is recommended and when it reaches to high PPS, CMD-1 is recommended.

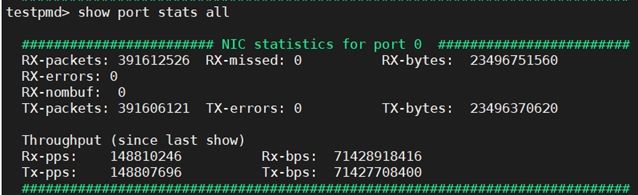

Some useful commands of testpmd:

show port stats all – Display the statistics data of each port

show fwd stats all – Display the forward statistics of each port

clear port stats all – Clear the statistics data of each port

You can increase the core count and txq/rxq number to achieve the line rate. Below is the screenshot indicating that both Rx-pps and Tx-pps are 148.8Mpps.

For more details about the dpdk-testpmd command-line options, please refer to Testpmd Application User Guide.

For more details about the poll mode driver(pmd) of Mellanox’s CX5 and CX6, please refer to “MLX5 poll mode driver”.

DPDK on Ampere AmpereOne Processors

AmpereOne AC03 is supported from DPDK v23.07;

AmpereOne AC04 is supported from DPDK v24.07.

DPDK version >= v24.07 is recommended for the AmpereOne family. The “SLC installation” feature is enabled on the AmpereOne family by default and can improvement the performance.