Deploying VoD on Canonical MicroK8s

3-Node Cluster on Ampere® Altra® Platforms

Overview

A newer version of this document has been released. Click here to view.

MicroK8s is an open-source system for automating deployment, scaling, and management of containerized applications. It provides the

functionality of core Kubernetes components, in a small footprint, scalable from a single node to a high-availability production cluster.

MicroK8s is a Zero-ops, pure-upstream Kubernetes, from developer workstations to production, it enables developers to get a fully

featured, conformant and secure Kubernetes system. It is also designed for local development, IoT appliances, CI/CD, and use at the

edge.

The combination of Ampere Altra processors and Canonical MicroK8s 3-node Compact Cluster is a great match for the scenarios

listed below:

- CDN Edge cloud

- Video service providers

- Digital service providers

- Small and Medium Businesses who develop Cloud Native applications

- 5G User Plane Function (UPF)

This guide contains two sections. The first section intends to explain how to deploy Canonical MicroK8s on Ampere Altra platforms. We will install OpenEBS Mayastor for block storage. The second section provides guidelines to deploy Video on Demand (VoD) as a Proof of Concept (PoC) demo on MicroK8s 3-node compact cluster with Mayastor. The estimated time to complete this tutorial is 1:00 hour.

Prerequisites

1. A DNS service such as Bind (named) runs on the bastion node

2. Three servers or VMs provisioned with Ubuntu 22.04.1 server (minimal with ssh server)

3. The following executable files on your laptop or workstation

- git

- kubectl version 1.24.x or later (available from https://kubernetes.io/docs/tasks/tools/)

- tar

- docker

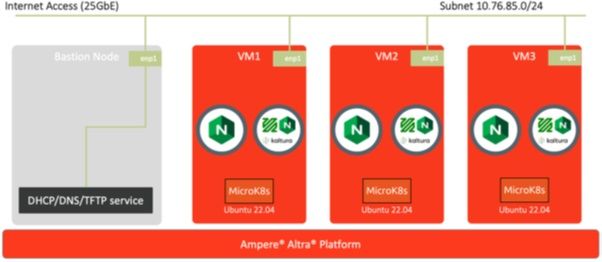

Figure 1: Network Overview of Canonical MicroK8s 3-Node Cluster

Setup Instructions

Deploying Canonical MicroK8s

The following are the steps to deploy Canonical MicroK8s on Ampere Altra platforms.

1. Verify 3 Ubuntu 22.04 servers and add their IP addresses into the hosts file under /etc

echo "10.76.85.90 u2204-1" | sudo tee -a /etc/hosts echo "10.76.85.91 u2204-2" | sudo tee -a /etc/hosts echo "10.76.85.92 u2204-3" | sudo tee -a /etc/hosts

2. Based on the requirements for enabling OpenEBS Mayastor clustered storage, update Ubuntu 22.04 servers using the steps listed

below (refer to https://microk8s.io/docs/addon-mayastor)

a. Enable HugePages on each server:

sudo sysctl vm.nr_hugepages=1024 echo 'vm.nr_hugepages=1024' | sudo tee -a /etc/sysctl.conf

b. The nvme_fabrics and nvme_tcp modules are required on all hosts. Install the modules:

sudo apt install linux-modules-extra-$(uname -r)

Then enable them:

sudo modprobe nvme_tcp echo 'nvme-tcp' | sudo tee -a /etc/modules-load.d/microk8s-mayastor.conf

c. Reboot the server:

sudo reboot

3. Verify the MicroK8s stable releases containing v1.24/stable:

$ snap info microk8s | grep stable | head -n5 1\. 25/stable: v1.25.2 2022-09-30 (4056) 146MB classic latest/stable: v1.25.2 2022-10-07 (4056) 146MB classic 1\. 26/stable: -- 1\. 25-strict/stable: v1.25.2 2022-09-30 (4053) 146MB - 1\. 24/stable: v1.24.6 2022-09-30 (4031) 198MB classic

4. Install MicroK8s on each server:

$ sudo snap install microk8s --classic --channel=1.24/stable microk8s (1.24/stable) v1.24.6 from Canonical? installed

5. Check whether the MicroK8s instance is running and ensure the datastore is localhost:

$ sudo microk8s status --wait-ready | head -n4 microk8s is running high-availability: no datastore master nodes: 127.0.0.1:19001 datastore standby nodes: none

6. Add user to MicroK8s group:

$ sudo usermod -a -G microk8s $USER $ sudo chown -f -R $USER ~/.kube

7. Create an alias for MicroK8s embedded kubectl. We can simply call kubectl after running newgrp microk8s:

$ sudo snap alias microk8s.kubectl kubectl $ newgrp microk8s

8. Run kubectl get node on each server:

u2204-1:~$ kubectl get node NAME STATUS ROLES AGE VERSION u2204-1 Ready <none> 3m27s v1.24.6-2+4d6cc142010b44 u2204-2:~$ kubectl get node NAME STATUS ROLES AGE VERSION u2204-2 Ready <none> 3m33s v1.24.6-2+4d6cc142010b44 u2204-3:~$ kubectl get node NAME STATUS ROLES AGE VERSION u2204-3 Ready <none> 3m43s v1.24.6-2+4d6cc142010b44

9. Join MicroK8s instances to the cluster. Make sure the firewall is not blocking the ports listed below (refer to https://microk8s.io/docs/services-and-ports):

- TCP: 80, 443, 6443, 16443, 10250, 10255, 25000, 12379, 10257, 10259, 19001, 32000

- UDP: 4789

u2204-1:~$ sudo microk8s add-node From the node you wish to join to this cluster, run the following: microk8s join 10.76.85.90:25000/abc319f1f671739bde225deb2a8ee69d/bd48bae5b378 Use the '--worker' flag to join a node as a worker not running the control plane, eg: microk8s join 10.76.85.90:25000/abc319f1f671739bde225deb2a8ee69d/bd48bae5b378 --worker If the node you are adding is not reachable through the default interface you can use one of the following: microk8s join 10.76.85.90:25000/abc319f1f671739bde225deb2a8ee69d/bd48bae5b378

10. Switch to node u2204-2, and join it to the cluster. Wait for 20~30 seconds:

u2204-2:~$ microk8s join 10.76.85.90:25000/abc319f1f671739bde225deb2a8ee69d/bd48bae5b378 Contacting cluster at 10.76.85.90 Waiting for this node to finish joining the cluster. .. .. .. .

11. Once it completes, check the cluster’s status:

u2204-1:~$ kubectl get node NAME STATUS ROLES AGE VERSION u2204-1 Ready <none> 7m44s v1.24.6-2+4d6cc142010b44 u2204-2 Ready <none> 109s v1.24.6-2+4d6cc142010b44

12. Repeat step 8 to generate the token for joining the third node to the cluster.

13. Switch to node u2204-3, and join it to the cluster. Wait for 20~30 seconds:

u2204-3:~$ microk8s join 10.76.85.90:25000/77a6436adb245400154bde41aeb04bc1/bd48bae5b378 Contacting cluster at 10.76.85.90 Waiting for this node to finish joining the cluster. .. .. ..

14. Check the cluster on node u2204-1:

u2204-1:~$ kubectl get node NAME STATUS ROLES AGE VERSION u2204-1 Ready <none> 9m14s v1.24.6-2+4d6cc142010b44 u2204-2 Ready <none> 3m19s v1.24.6-2+4d6cc142010b44 u2204-3 Ready <none> 46s v1.24.6-2+4d6cc142010b44

15. Run microk8s status. Ensure that it reports back “high-availability” as yes and the datastore master nodes list has all the nodes in the cluster after adding the third node to the cluster:

helm # (core) Helm 2 - the package manager for Kubernetes helm3 # (core) Helm 3 - Kubernetes package manager host-access # (core) Allow Pods connecting to Host services smoothly hostpath-storage # (core) Storage class; allocates storage from host directory ingress # (core) Ingress controller for external access mayastor # (core) OpenEBS MayaStor metallb # (core) Loadbalancer for your Kubernetes cluster metrics-server # (core) K8s Metrics Server for API access to service metrics prometheus # (core) Prometheus operator for monitoring and logging rbac # (core) Role-Based Access Control for authorisation registry # (core) Private image registry exposed on localhost:32000 storage # (core) Alias to hostpath-storage add-on, deprecated

16. Enable add-ons dns, helm3, and ingress:

u2204-1:~$ microk8s enable dns helm3 ingress Infer repository core for addon dns Infer repository core for addon helm3 Infer repository core for addon ingress Enabling DNS Applying manifest serviceaccount/coredns created configmap/coredns created deployment.apps/coredns created service/kube-dns created clusterrole.rbac.authorization.k8s.io/coredns created clusterrolebinding.rbac.authorization.k8s.io/coredns created Restarting kubelet Adding argument --cluster-domain to nodes. Configuring node 10.76.85.91 Configuring node 10.76.85.92 Adding argument --cluster-dns to nodes. Configuring node 10.76.85.91 Configuring node 10.76.85.92 Restarting nodes. Configuring node 10.76.85.91 Configuring node 10.76.85.92 DNS is enabled Enabling Helm 3 Fetching helm version v3.8.0. % Total % Received % Xferd Average Speed Time Current Dload Upload Total Spent Left Speed 100 11.7M 100 11.7M 0 0 3195k 0 0:00:03 0:00:03 --:--:-- 3195k Helm 3 is enabled Enabling Ingress ingressclass.networking.k8s.io/public created namespace/ingress created serviceaccount/nginx-ingress-microk8s-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-microk8s-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-microk8s-role created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-microk8s created rolebinding.rbac.authorization.k8s.io/nginx-ingress-microk8s created configmap/nginx-load-balancer-microk8s-conf created configmap/nginx-ingress-tcp-microk8s-conf created configmap/nginx-ingress-udp-microk8s-conf created daemonset.apps/nginx-ingress-microk8s-controller created Ingress is enabled

17. Enable the MetalLB add-on with the hosts’ IP addresses (10.76.85.90-10.76.85.92):

u2204-1:~$ microk8s enable metallb:10.76.85.90-10.76.85.92 Infer repository core for addon metallb Enabling MetalLB Applying Metallb manifest namespace/metallb-system created secret/memberlist created Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy/controller created podsecuritypolicy.policy/speaker created serviceaccount/controller created serviceaccount/speaker created clusterrole.rbac.authorization.k8s.io/metallb-system:controller created clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created role.rbac.authorization.k8s.io/config-watcher created role.rbac.authorization.k8s.io/pod-lister created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created rolebinding.rbac.authorization.k8s.io/config-watcher created rolebinding.rbac.authorization.k8s.io/pod-lister created Warning: spec.template.spec.nodeSelector[beta.kubernetes.io/os]: deprecated since v1.14; use "kubernetes.io/os" instead daemonset.apps/speaker created deployment.apps/controller created configmap/config created MetalLB is enabled

18. Enable the Mayastor add-on with the local storage and set default pool size as 20 GB:

u2204-1:~$ microk8s enable core/mayastor --default-pool-size 20G Checking for HugePages (>= 1024)... Checking for HugePages (>= 1024)... OK Checking for nvme_tcp module... Checking for nvme_tcp module... OK Checking for addon core/dns... Checking for addon core/dns... OK Checking for addon core/helm3... Checking for addon core/helm3... OK namespace/mayastor created Default image size set to 20G WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /var/snap/ microk8s/4031/credentials/client.config Getting updates for unmanaged Helm repositories... ...Successfully got an update from the "https://raw.githubusercontent.com/canonical/mayastor/ develop/chart" chart repository ...Successfully got an update from the "https://raw.githubusercontent.com/canonical/mayastorcontrol- plane/develop/chart" chart repository ...Successfully got an update from the "https://raw.githubusercontent.com/canonical/etcdoperator/ master/chart" chart repository Saving 3 charts Downloading etcd-operator from repo https://raw.githubusercontent.com/canonical/etcd-operator/ master/chart Downloading mayastor from repo https://raw.githubusercontent.com/canonical/mayastor/develop/ chart Downloading mayastor-control-plane from repo https://raw.githubusercontent.com/canonical/ mayastor-control-plane/develop/chart Deleting outdated charts WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /var/snap/ microk8s/4031/credentials/client.config NAME: mayastor LAST DEPLOYED: Wed Oct 12 19:24:54 2022 NAMESPACE: mayastor STATUS: deployed REVISION: 1 TEST SUITE: None ============================================================= Mayastor has been installed and will be available shortly. Mayastor will run for all nodes in your MicroK8s cluster by default. Use the 'microk8s.io/mayastor=disable' label to disable any node. For example: microk8s.kubectl label node u2204-1 microk8s.io/mayastor=disable

19. Check the status of Mayastor namespace:

u2204-1:~$ kubectl get all -n mayastor NAME READY STATUS RESTARTS AGE pod/csi-controller-b5b975dc8-kc96n 0/3 Init:0/1 0 75s pod/etcd-operator-mayastor-869bd676c6-l92l9 1/1 Running 0 75s pod/mayastor-cgfg5 0/1 Init:0/3 0 75s pod/mayastor-csi-hqzg8 2/2 Running 0 75s pod/mayastor-csi-grspd 2/2 Running 0 75s pod/mayastor-csi-5g6xn 2/2 Running 0 75s pod/etcd-jvqgv5r2m7 1/1 Running 0 65s pod/etcd-z5r6g7vcm4 1/1 Running 0 40s pod/core-agents-c9b664764-2r8pc 1/1 Running 2 (23s ago) 75s pod/etcd-mhp2mzv6qg 0/1 PodInitializing 0 8s pod/rest-75c66999bd-v2zgg 0/1 Init:1/2 0 75s pod/mayastor-blctg 0/1 Init:1/3 0 75s pod/msp-operator-6b67c55f48-4mpvt 0/1 Init:1/2 0 75s pod/mayastor-fq648 0/1 Init:1/3 0 75s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/core ClusterIP None <none> 50051/TCP 75s service/rest ClusterIP 10.152.183.106 <none> 8080/TCP,8081/TCP 75s service/etcd-client ClusterIP 10.152.183.135 <none> 2379/TCP 65s service/etcd ClusterIP None <none> 2379/TCP,2380/TCP 65s NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/mayastor 3 3 0 3 0 <none> 75s daemonset.apps/mayastor-csi 3 3 3 3 3 <none> 75s NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/mayastor 3 3 0 3 0 <none> 75s daemonset.apps/mayastor-csi 3 3 3 3 3 <none> 75s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/csi-controller 0/1 1 0 75s deployment.apps/msp-operator 0/1 1 0 75s deployment.apps/rest 0/1 1 0 75s deployment.apps/etcd-operator-mayastor 1/1 1 1 75s deployment.apps/core-agents 1/1 1 1 75s NAME DESIRED CURRENT READY AGE replicaset.apps/csi-controller-b5b975dc8 1 1 0 75s replicaset.apps/msp-operator-6b67c55f48 1 1 0 75s replicaset.apps/rest-75c66999bd 1 1 0 75s replicaset.apps/etcd-operator-mayastor-869bd676c6 1 1 1 75s replicaset.apps/core-agents-c9b664764 1 1 1 75s u2204-1:~$ kubectl get pod -n mayastor -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES etcd-operator-mayastor-869bd676c6-l92l9 1/1 Running 0 119s 10.1.107.196 u2204-1 <none> <none> mayastor-csi-hqzg8 2/2 Running 0 119s 10.76.85.92 u2204-3 <none> <none> mayastor-csi-grspd 2/2 Running 0 119s 10.76.85.91 u2204-2 <none> <none> mayastor-csi-5g6xn 2/2 Running 0 119s 10.76.85.90 u2204-1 <none> <none> etcd-jvqgv5r2m7 1/1 Running 0 109s 10.1.254.132 u2204-3 <none> <none> etcd-z5r6g7vcm4 1/1 Running 0 84s 10.1.107.197 u2204-1 <none> <none> core-agents-c9b664764-2r8pc 1/1 Running 2 (67s ago) 119s 10.1.182.67 u2204-2 <none> <none> etcd-mhp2mzv6qg 1/1 Running 0 52s 10.1.182.68 u2204-2 <none> <none> msp-operator-6b67c55f48-4mpvt 1/1 Running 0 119s 10.1.107.195 u2204-1 <none> <none> rest-75c66999bd-v2zgg 1/1 Running 0 119s 10.1.254.131 u2204-3 <none> <none> mayastor-fq648 1/1 Running 0 119s 10.76.85.90 u2204-1 <none> <none> mayastor-blctg 1/1 Running 0 119s 10.76.85.92 u2204-3 <none> <none> mayastor-cgfg5 1/1 Running 0 119s 10.76.85.91 u2204-2 <none> <none> csi-controller-b5b975dc8-kc96n 3/3 Running 0 119s 10.76.85.92 u2204-3 <none> <none>

20. Edit DaemonSet Nginx ingress controller:

u2204-1:~$ kubectl edit ds -n ingress nginx-ingress-microk8s-controller ## the original --publish-status-address=127.0.0.1 ## replace 127.0.0.1 with the hosts' IP addresses --publish-status-address=10.76.85.90,10.76.85.91,10.76.85.92 daemonset.apps/nginx-ingress-microk8s-controller edited

21. Run microk8s status to verify the add-ons’ status:

u2204-1:~$ microk8s status microk8s is running high-availability: yes datastore master nodes: 10.76.85.90:19001 10.76.85.91:19001 10.76.85.92:19001 datastore standby nodes: none addons: enabled: dns # (core) CoreDNS ha-cluster # (core) Configure high availability on the current node helm3 # (core) Helm 3 - Kubernetes package manager ingress # (core) Ingress controller for external access mayastor # (core) OpenEBS MayaStor metallb # (core) Loadbalancer for your Kubernetes cluster disabled: community # (core) The community addons repository dashboard # (core) The Kubernetes dashboard helm # (core) Helm 2 - the package manager for Kubernetes host-access # (core) Allow Pods connecting to Host services smoothly hostpath-storage # (core) Storage class; allocates storage from host directory metrics-server # (core) K8s Metrics Server for API access to service metrics prometheus # (core) Prometheus operator for monitoring and logging rbac # (core) Role-Based Access Control for authorisation registry # (core) Private image registry exposed on localhost:32000 storage # (core) Alias to hostpath-storage add-on, deprecated

22. Check StorageClass:

u2204-1:~$ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE mayastor-3 io.openebs.csi-mayastor Delete WaitForFirstConsumer false 3m58s mayastor io.openebs.csi-mayastor Delete WaitForFirstConsumer false 3m58s

23. Get Mayastor pool information from the cluster:

u2204-1:~# kubectl get mayastorpool -n mayastor NAME NODE STATUS CAPACITY USED AVAILABLE microk8s-u2204-3-pool u2204-3 Online 21449670656 0 21449670656 microk8s-u2204-1-pool u2204-1 Online 21449670656 0 21449670656 microk8s-u2204-2-pool u2204-2 Online 21449670656 0 21449670656

24. Add the second drive, /dev/nvme1n1 on each node to be a Mayastor pool:

u2204-1:~$ sudo snap run --shell microk8s -c ' $SNAP_COMMON/addons/core/addons/mayastor/pools.py add --node u2204-1 --device /dev/nvme1n1 ' mayastorpool.openebs.io/pool-u2204-1-nvme1n1 created root@u2204-1:~$sudo snap run --shell microk8s -c ' $SNAP_COMMON/addons/core/addons/mayastor/pools.py add --node u2204-2 --device /dev/nvme1n1 ' mayastorpool.openebs.io/pool-u2204-2-nvme1n1 created u2204-1:~$ sudo snap run --shell microk8s -c ' $SNAP_COMMON/addons/core/addons/mayastor/pools.py add --node u2204-3 --device /dev/nvme1n1 ' mayastorpool.openebs.io/pool-u2204-3-nvme1n1 created

25. Get Mayastor pool information:

u2204-1:~$ kubectl get mayastorpool -n mayastor NAME NODE STATUS CAPACITY USED AVAILABLE microk8s-u2204-3-pool u2204-3 Online 21449670656 0 21449670656 microk8s-u2204-1-pool u2204-1 Online 21449670656 0 21449670656 microk8s-u2204-2-pool u2204-2 Online 21449670656 0 21449670656 pool-u2204-1-nvme1n1 u2204-1 Online 10983949362790 0 10983949362790 pool-u2204-2-nvme1n1 u2204-2 Online 10983949362790 0 10983949362790 pool-u2204-3-nvme1n1 u2204-3 Online 10983949362790 0 10983949362790

26. Generate a YAML file test-pod-with-pvc.yaml, to create Persistent Volume Claim for Nginx container:

u2204-1:~$ cat << EOF > test-pod-with-pvc.yaml --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-pvc spec: storageClassName: mayastor accessModes: [ReadWriteOnce] resources: { requests: { storage: 5Gi } } --- apiVersion: v1 kind: Pod metadata: name: test-nginx spec: volumes: - name: pvc persistentVolumeClaim: claimName: test-pvc containers: - name: nginx image: nginx ports: - containerPort: 80 volumeMounts: - name: pvc mountPath: /usr/share/nginx/html

27. Create a Persistent Volume Claim (PVC) on Mayastor and a pod with Nginx container:

u2204-1:~$ kubectl apply -f test-pod-with-pvc.yaml persistentvolumeclaim/test-pvc created pod/test-nginx created u2204-1:~$ kubectl get pod,pvc NAME READY STATUS RESTARTS AGE pod/test-nginx 0/1 ContainerCreating 0 12s NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/test-pvc Bound pvc-919bc814-757f-4c20-8b7b-06e3da7f95e7 5Gi RWO mayastor 12s u2204-1:~$ kubectl get pod,pvc NAME READY STATUS RESTARTS AGE pod/test-nginx 1/1 Running 0 17s NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/test-pvc Bound pvc-919bc814-757f-4c20-8b7b-06e3da7f95e7 5Gi RWO mayastor 17s

28. Run kubectl exec -it pod/test-nginx -- sh to access Nginx container in pod/test-nginx to verify the PVC being bound with Nginx container inside the pod:

u2204-1:~$ kubectl exec -it pod/test-nginx -- sh # cd /usr/share/nginx/html # ls -al total 24 drwxr-xr-x 3 root root 4096 Oct 19 04:01 . drwxr-xr-x 3 root root 4096 Oct 5 03:25 .. drwx------ 2 root root 16384 Oct 19 04:01 lost+found # mount | grep nginx /dev/nvme2n1 on /usr/share/nginx/html type ext4 (rw,relatime,stripe=32) # lsblk | grep nvme2n1 nvme2n1 259:6 0 5G 0 disk /usr/share/nginx/html

29. Delete the PVC and Nginx container:

u2204-1:~$ kubectl delete -f test-pod-with-pvc.yaml pod/test-nginx deleted persistentvolumeclaim/test-pvc deleted If you’d prefer to use your local host’s kubectl command, running the following command will output the kubeconfig file from MicroK8s. % ssh u2204-1 microk8s config apiVersion: v1 clusters: - cluster: certificate-authority-data: LX1tLS0CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUREekNDQWZlZ0F3SUJBZ0lVY29oYWd0TmlnK3l6d2EvVkVoUTVmSi9 YY0NFd0RRWUpLb1pJaHZjTkFRRUwKQlFBd0Z6RVZNQk1HQTFVRUF3d01NVEF1TVRVeUxqRTRNeTR4TUI0WERUSXlNVEF4TW pFNE5ESXpNMW9YRFRNeQpNVEF3T1RFNE5ESXpNMW93RnpFVk1CTUdBMVVFQXd3TU1UQXVNVFV5TGpFNE15NHhNSUlCSWpBT kJna3Foa2lHCjl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF4NXR4SU9MN2VVeVp2NlhmbmNkSVg5cnIvUGNNbGk4ZnZ1 QU0KL2FQZTFOaGNuVlpxWnN3T3NyUnY2aHlDNm9PM1psTUVPQVhqTjlkOWwxMkxaWU9ueklYaHVRRWdOenZWTnJSNAp0M0N KQ0NYand0N2V6elI0NXQxTWMvSVhEbnVVSHFrcS80eWg1UTlkNkNjNVJQYTB2amFrWExCS2l2amxsNm1ECi9OTlJEUUMrYU sxU1Rwam1ZSGI1WDEyMk5wVlcraEp5bEwyblF6VC9ocStIaFc1bUZ1VnUrY1BrWThKTUdZeFUKKytsM2pJRzZTTTlsQTU4U 0xhQ1loU042ZUFGc1JPUGdVeHRCZ2hGTnk5Yno4bWdtQTRGS21QTWZVbFcvSlFycAprMi9keTE5Ry9YSWhJNFZ5eW80VHd2 Z01Jak5TMDhJcllOSFJKQmd2dmhpL0Jobndld0lEQVFBQm8xTXdVVEFkCkJnTlZIUTRFRmdRVSs1cU9xQkZqeHk3RG1SMkd uNmpsUTNRV0FoTXdId1lEVlIwakJCZ3dGb0FVKzVxT3FCRmoKeHk3RG1SMkduNmpsUTNRV0FoTXdEd1lEVlIwVEFRSC9CQV V3QXdFQi96QU5CZ2txaGtpRzl3MEJBUXNGQUFPQwpBUUVBQytUYVIwWmZzdDRZZTdwMU9HZzhUeUkyMm5zelZzUFZmMEpOW jduWGJFUXQ1RkhsMVBFVXdsT2hGV25JCmpOV2tHaTlHbHMvb2NWUGlqSDY0ZTNERjhpQ1RFZmJNYkRrY3QwTURWMk56T1Jn RUJNODlwRXJ0R3lYVXFSS2YKZE42U2o1WVRLWTRwcGk3Ykp6cVJ0bWNOWklFWWdMemZYKzhNS1NzVDQrNStEa0dzYUdFcVR nL2QvNDMvUEk1UQpsekNnbnJEVndmbmVjVUdQZDJ4NzhFNWxWSjYvUG51NnBuZGFqWjc1MWZqRjY2dEE2Uys3WjluczdCSm Z2UDNjCnQ2MWE5NjdiaUg1Znl5Z3ZJZTJNa0N3RldEbmxyTThRdVdjVGJzbFpmNUpsMjdEZXpncDVJWHJhNVpSQnFuQksKN HJvVWtCTUNIbzRKeGxHdmkxMytIaUtla3c9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCv== server: https://10.76.85.90:16443 name: microk8s-cluster contexts: - context: cluster: microk8s-cluster user: admin name: microk8s current-context: microk8s kind: Config preferences: {} users: - name: admin user: token: XVNmZjFkWFppZ3VkdmRkeWE5bVpMeWVrLy9LWEhtb2dtaVdkTWtxMkZCMD1K % ssh u2204-1 microk8s config > kubeconfig % mkdir ~/.kube % scp u2204-1/kubeconfig ~/.kube/config % export KUBECONFIG=~/.kube/config % kubectl get nodes NAME STATUS ROLES AGE VERSION u2204-1 Ready <none> 1h54m v1.24.6-2+4d6cc142010b44 u2204-2 Ready <none> 1h32m v1.24.6-2+4d6cc142010b44 u2204-3 Ready <none> 1h25m v1.24.6-2+4d6cc142010b44

Deploying Video on Demand Proof of Concept Demo on MicroK8s

The following are the steps to deploy VoD PoC demo on MicroK8s on Ampere Altra platforms.

1. Access the target Microk8s cluster with kubeconfig from your local host or directly on Microk8s server:

% kubectl get nodes -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OSIMAGE KERNEL-VERSION CONTAINER-RUNTIME u2204-2 Ready <none> 1h56m v1.24.6-2+4d6cc142010b44 10.76.85.91 <none> Ubuntu 22\. 04.1 LTS 5.15.0-50-generic containerd://1.5.13 u2204-3 Ready <none> 1h34m v1.24.6-2+4d6cc142010b44 10.76.85.92 <none> Ubuntu 22\. 04.1 LTS 5.15.0-50-generic containerd://1.5.13 u2204-1 Ready <none> 1h27m v1.24.6-2+4d6cc142010b44 10.76.85.90 <none> Ubuntu 22\. 04.1 LTS 5.15.0-50-generic containerd://1.5.13

2. Obtain the source code from the two GitHub repos:

- https://github.com/AmpereComputing/nginx-hello-container

- https://github.com/AmpereComputing/nginx-vod-module-container

$ git clone https://github.com/AmpereComputing/nginx-hello-container $ git clone https://github.com/AmpereComputing/nginx-vod-module-container

3. Deploy Nginx web server container in StatefulSet with its PVC template, service, and ingress:

$ kubectl create -f nginx-hello-container/MicroK8s/nginx-front-app.yaml

4. Deploy Nginx VoD container in StatefulSet with its PVC template, service, and ingress:

$ kubectl create -f nginx-vod-module-container/MicroK8s/nginx-vod-app.yaml

5. Get the deployment status in the vod-poc namespace:

% kubectl get pod,pvc -n vod-poc -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/nginx-front-app-0 1/1 Running 0 2h13m 10.1.182.74 u2204-2 <none> <none> pod/nginx-front-app-1 1/1 Running 0 2h12m 10.1.254.138 u2204-3 <none> <none> pod/nginx-front-app-2 1/1 Running 0 2h10m 10.1.107.206 u2204-1 <none> <none> pod/nginx-vod-app-0 1/1 Running 0 1h58m 10.1.182.75 u2204-2 <none> <none> pod/nginx-vod-app-1 1/1 Running 0 1h57m 10.1.107.207 u2204-1 <none> <none> pod/nginx-vod-app-2 1/1 Running 0 1h56m 10.1.254.139 u2204-3 <none> <none> NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE persistentvolumeclaim/nginx-front-app-pvc-nginx-front-app-0 Bound pvc-e88f082a-9a69-437dbe6c- b381867d155e 10Gi RWO mayastor 2h11m Filesystem persistentvolumeclaim/nginx-front-app-pvc-nginx-front-app-1 Bound pvc-94a55190-0c2c-4be0- 99a9-0c227cf51af9 10Gi RWO mayastor 2h10m Filesystem persistentvolumeclaim/nginx-front-app-pvc-nginx-front-app-2 Bound pvc-4bdd9ace-d727-4972- aa08-a93d41f60e94 10Gi RWO mayastor 2h10m Filesystem persistentvolumeclaim/nginx-vod-app-pvc-nginx-vod-app-0 Bound pvc-f80b47aa-17df-435e- 9591-ebcd046b19fc 20Gi RWO mayastor 1h57m Filesystem persistentvolumeclaim/nginx-vod-app-pvc-nginx-vod-app-1 Bound pvc-21b23a40-ccdb-44c5- 9f33-5b2a4e2efa28 20Gi RWO mayastor 1h56m Filesystem persistentvolumeclaim/nginx-vod-app-pvc-nginx-vod-app-2 Bound pvc-00841aea-062d-42a8- b983-e256c4a2fa0f 20Gi RWO mayastor 1h55m Filesystem % kubectl get svc,ingress -n vod-poc -owide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/nginx-front-app-svc ClusterIP 10.152.183.71 <none> 8080/TCP 2h18m app=nginx-front-app service/nginx-vod-app-svc ClusterIP 10.152.183.80 <none> 8080/TCP 2h18m app=nginx-vod-app NAME CLASS HOSTS ADDRESS PORTS AGE ingress.networking.k8s.io/nginx-front-app-ingress <none> demo.microk8s.hhii.ampere 10\. 76.85.90,10.76.85.91,10.76.85.92 80 2h18m ingress.networking.k8s.io/nginx-vod-app-ingress <none> vod.microk8s.hhii.ampere 10\. 76.85.90,10.76.85.91,10.76.85.92 80 2h18m

6. Next, we need to run an Nginx web server on the bastion node to host video and web bundle files for VoD PoC demo on MicroK8s.

7. You can create the first tarball with these pre-transcoded video files (under vod-demo) and subtitle file (with vtt as the file extension), and the second tar ball for nginx-front-app with HTML, CSS, JavaScript, etc. (under nginx-front-demo):

$ tar -zcvf vod-demo.tgz vod-demo $ tar -zcvf nginx-front-demo.tgz nginx-front-demo

8. Create a sub-directory such as nginx-html, and move vod-demo.tgz and nginx-front-demo.tgz to nginx-html, and then run the Nginx web server container within the directory:

% mkdir nginx-html % mv vod-demo.tgz nginx-html % cd nginx-html % docker run -d --rm -p 8080:8080 --name nginx-html --user 1001 -v $PWD:/usr/share/nginx/html docker.io/mrdojojo/nginx-hello-app:1.1-arm64

9. Go back to Rancher portal for deploying those bundle files to VOD pods:

a. For nginx-front-app pods, run the command nginx-front-app-0 to access the Nginx container in the pod:

% kubectl -n vod-poc exec -it pod/nginx-front-app-0 -- sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # wget http://[Bastion node's IP address]:8080/nginx-front-demo.tgz /usr/share/nginx/html # tar zxvf nginx-front-demo.tgz /usr/share/nginx/html # /usr/share/nginx/html # sed -i "s,http://\[vod-demo\]/,http:// vod.microk8s.hhii.amp/,g" *.html /usr/share/nginx/html # rm -rf nginx-front-demo.tgz

b. Repeat step 9.a for the other two pods, nginx-front-app-1 and nginx-front-app-2

c. For nginx-vod-app pods, run the nginx-vod-app-0 command to access the Nginx container in the pod:

% kubectl -n vod-poc exec -it pod/nginx-vod-app-0 -- sh / # cd /opt/static/videos/ /opt/static/videos # wget http://[Bastion node's IP address]:8080/vod-demo.tgz /opt/static/videos # tar zxvf vod-demo.tgz /opt/static/videos # mv vod-demo/* . /opt/static/videos # rm -rf vod-demo

d. Repeat step 9.c for the other two pods, nginx-vod-app-1 and nginx-vod-app-2

10. Get the records of domain name A published to DNS server:

10\. 76.85.90 demo.microk8s.hhii.ampere A 10min 10\. 76.85.91 demo.microk8s.hhii.ampere A 10min 10\. 76.85.92 demo.microk8s.hhii.ampere A 10min 10\. 76.85.90 vod.microk8s.hhii.ampere A 10min 10\. 76.85.91 vod.microk8s.hhii.ampere A 10min 10\. 76.85.92 vod.microk8s.hhii.ampere A 10min

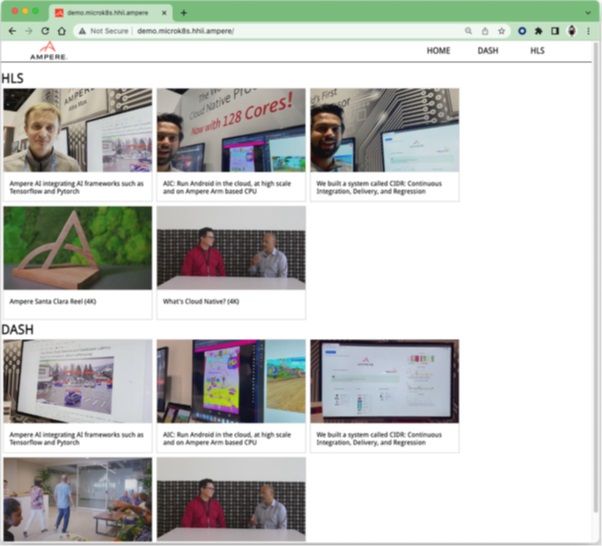

11. Open a browser to access http://demo.microk8s.hhii.ampere for testing VoD PoC demo on MicroK8s.

Figure 2: VoD PoC Demo on MicroK8s in a Browser

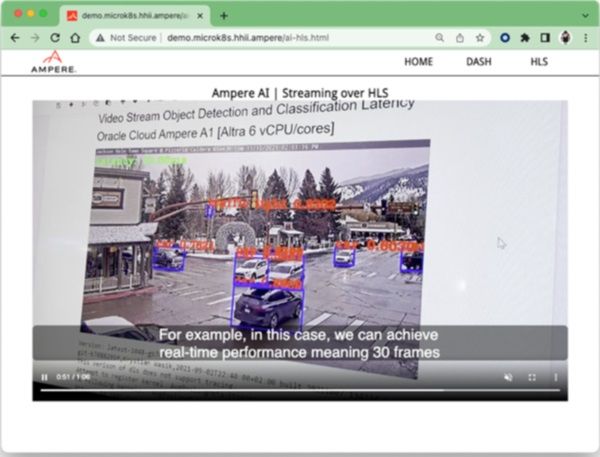

12. Click one of the rectangles. The browser will launch the HTML5 video player for streaming the video.

Figure 3: Video Streaming Playbacks on HTML5 Video Player

Figure 3: Video Streaming Playbacks on HTML5 Video Player

13. The VoD PoC demo on MicroK8s is now ready.

Figure 4: VoD Workloads on Canonical MicroK8s 3-Node Cluster

Figure 4: VoD Workloads on Canonical MicroK8s 3-Node Cluster

Revision History

| ISSUE | DATE | DESCRIPTION |

|---|---|---|

| 1.00 | November 7, 2022 | Initial release. |

Disclaimer

November 7, 2022 Ampere Computing reserves the right to change or discontinue this product without notice. While the information contained herein is believed to be accurate, such information is preliminary, and should not be relied upon for accuracy or completeness, and no representations or warranties of accuracy or completeness are made. The information contained in this document is subject to change or withdrawal at any time without notice and is being provided on an “AS IS” basis without warranty or indemnity of any kind, whether express or implied, including without limitation, the implied warranties of non-infringement, merchantability, or fitness for a particular purpose. Any products, services, or programs discussed in this document are sold or licensed under Ampere Computing’s standard terms and conditions, copies of which may be obtained from your local Ampere Computing representative. Nothing in this document shall operate as an express or implied license or indemnity under the intellectual property rights of Ampere Computing or third parties. Without limiting the generality of the foregoing, any performance data contained in this document was determined in a specific or controlled environment and not submitted to any formal Ampere Computing test. Therefore, the results obtained in other operating environments may vary significantly. Under no circumstances will Ampere Computing be liable for any damages whatsoever arising out of or resulting from any use of the document or the information contained herein. Ampere Computing 4655 Great America Parkway, Santa Clara, CA 95054 Phone: (669) 770-3700 https://www.amperecomputing.com Ampere Computing reserves the right to make changes to its products, its datasheets, or related documentation, without notice and warrants its products solely pursuant to its terms and conditions of sale, only to substantially comply with the latest available datasheet. Ampere, Ampere Computing, the Ampere Computing and ‘A’ logos, and Altra are registered trademarks of Ampere Computing. Arm is a registered trademark of Arm Limited (or its subsidiaries) in the US and/or elsewhere. All other trademarks are the property of their respective holders. Copyright © 2022 Ampere Computing. All rights reserved.