Deploying VoD on SUSE K3s 3-Node Clusters

on Ampere® Altra® Family Platforms

Overview

This demo showcases open source VOD services using K3s, a lightweight Kubernetes implementation, to demonstrate the cloud native characteristics of Altra and Altra Max processors for video services. The demo features:

- K3s – A Cloud Native Computing Foundation (CNCF) sandbox project that delivers a lightweight certified Kubernetes distribution with add-on functions and services.

- Three-node cluster – The fewest nodes required for a High Availability (HA) cluster.

- Longhorn – An official CNCF project that delivers a powerful cloud-native distributed storage platform for Kubernetes that can run anywhere; a key element for storing metadata, videos (mp4, mov, or avi), subtitles, for example, in Web Video Text Tracks (WebVTT) format, generated playlists, and segment files for VOD services.

- Nginx Ingress Controller – A load balancer for Kubernetes using an external load balancer; in this demo, Domain Name System (DNS). This appliance serves as a reverse proxy and distributing network for application traffic across multiple servers.

- Two pods:

- Nginx VOD module container as the backend for streaming video in HTTP Live Streaming (HLS) and Moving Picture Experts Group-Dynamic Adaptive Streaming over HTTP (MPEG-DASH) protocols

- Nginx WebApp container for serving HTML contents as the YouTube-like front-end

Prerequisites

- A DNS service such as Bind (named), supporting Full Qualified Domain Name (FQDN, such as k3s.hhii.amp) as the external load balancer

- A Rancher K3s cluster with Longhorn block storage service (refer to Deploying SUSE K3s 3-Node Cluster with Rancher on Ampere Altra Platforms).

- Executable files on your laptop or workstation:

- kubectl version 1.23.x or later https://kubernetes.io/docs/tasks/tools/

- git

- tar

- docker

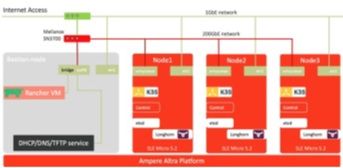

Figure 1: Network Overview of SUSE Rancher K3 Three-Node Cluster

Figure 1: Network Overview of SUSE Rancher K3 Three-Node Cluster

Setup Instructions

Deploy VOD PoC on Rancher K3s

1. Login to Rancher portal with your credential and choose the target k3s cluster (k3s-poc1).

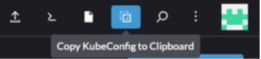

2. Click Copy KubeConfig to Clipboard on the right top function bar for kubeconfig:

3. Save the file config in** ~/.kube** in your home directory and run kubectl get nodes for testing.

$ cd ~/.kube $ cat << EOF > config ### paste the content here and type EOF by the end ### EOF $ kubectl get nodes NAME STATUS ROLES AGE VERSION node1.k3s.hhii.amp Ready control-plane,etcd,master 12h v1.23.6+k3s1 node2.k3s.hhii.amp Ready control-plane,etcd,master 12h v1.23.6+k3s1 node3.k3s.hhii.amp Ready control-plane,etcd,master 12h v1.23.6+k3s1

4. If you cannot save the file in ~/.kube, save it as** k3s.kubeconfig** in your working directory and export it as KUBECONFIG with the full file path:

$ cat << EOF > k3s.kubeconfig ### paste the content here and type EOF by the end ### EOF $ export KUBECONFIG=./k3s.kubeconfig $ kubectl get nodes NAME STATUS ROLES AGE VERSION node1.k3s.hhii.amp Ready control-plane,etcd,master 12h v1.23.6+k3s1 node2.k3s.hhii.amp Ready control-plane,etcd,master 12h v1.23.6+k3s1 node3.k3s.hhii.amp Ready control-plane,etcd,master 12h v1.23.6+k3s1

5. Obtain the source code from these GitHub repos:

- https://github.com/AmpereComputing/nginx-hello-container

- https://github.com/AmpereComputing/nginx-vod-module-container

$ git clone https://github.com/AmpereComputing/nginx-hello-container $ git clone https://github.com/AmpereComputing/nginx-vod-module-container

6. Deploy the Nginx web server container in StatefulSet with its PersistentVolumeClaim (PVC) template, service, and ingress:

$ kubectl create -f nginx-hello-container/SUSE-Rancher-K3s/nginx-front-app.yaml

7. Deploy the VOD container in StatefulSet with its PVC template, service, and ingress:

$ kubectl create -f nginx-vod-module-container/SUSE-Rancher-K3s/nginx-vod-app.yaml

8. Get the deployment status:

$ kubectl get pvc $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nginx-front-app-pvc-nginx-front-app-0 Bound pvc-698a3c95-3334-4b83-85ef-8b8f22d5a519 10Gi RWO longhorn 3m33s nginx-front-app-pvc-nginx-front-app-1 Bound pvc-420f57d8-ab02-4a20-8a0b-5423376d03fa 10Gi RWO longhorn 3m53s nginx-front-app-pvc-nginx-front-app-2 Bound pvc-d59a1d82-baca-4dac-a588-8a54e1b95817 10Gi RWO longhorn 3m23s nginx-vod-app-pvc-nginx-vod-app-0 Bound pvc-5c8a37d9-60c5-49c1-b7dc-8e77001f51ef 10Gi RWO longhorn 2m33s nginx-vod-app-pvc-nginx-vod-app-1 Bound pvc-069c663e-9fea-46f2-a438-8eb224f477e2 10Gi RWO longhorn 2m13s nginx-vod-app-pvc-nginx-vod-app-2 Bound pvc-94477b6f-8290-484a-9e21-205f92115a88 10Gi RWO longhorn 2m43s $ kubectl get statefulset NAME READY AGE nginx-front-app 3/3 6m57s nginx-vod-app 3/3 5m10s $ kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 72d nginx-front-app-svc ClusterIP 10.43.22.1 <none> 8080/TCP 12m nginx-vod-app-svc ClusterIP 10.43.192.145 <none> 8080/TCP 11m $ kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE nginx-front-app-ingress <none> demo.k3s.hhii.amp 10.76.85.101,10.76.85.102,10.76.85.103 80 13m nginx-vod-app-ingress <none> vod.k3s.hhii.amp 10.76.85.101,10.76.85.102,10.76.85.103 80 12m

9. Run an Nginx web server on a bastion node to host video and web bundle files for the VOD PoC demo on K3s.

10. Prepare a tarball with the pre-transcoded video files (under vod-demo) and subtitle file (filename extension tvv), and the second tarball for nginx-front-app with html, css, JavaScript, etc. (under nginx-front-demo):

$ tar -zcvf vod-demo.tgz vod-demo $ tar -zcvf nginx-front-demo.tgz nginx-front-demo

11. Create a subdirectory, such as nginx-html, then move vod-demo.tgz and nginx-front-demo.tgz to nginx-html, and run the nginx web server container with the directory:

% mkdir nginx-html % mv vod-demo.tgz nginx-html % cd nginx-html % podman run -d --rm -p 8080:8080 --name nginx-html --user 1001 -v $PWD:/usr/share/nginx/html docker.io/mrdojojo/nginx-hello-app:1.1-arm64

12. Go back to the Rancher portal to deploy thoee bundle files to VOD pods.

a.Access the Rancher portal, click the K3s cluster, click Workload > Pods, and change to the corresponding namespace.

b.Click the three-dot icon at the right of the nginx-front-app-[0-2] pod and choose Execute Shell. The portal displays a console for accessing the container.

c.When the console is ready, run these commands:

$ cd /usr/share/nginx/html/ $ wget http://[Bastion node's IP address]:8080/nginx-front-demo.tgz $ tar zxvf nginx-front-demo.tgz $ mv nginx-front-demo/* . $ rm -rf nginx-front-demo

d.Update the URL in each video player HTML file:

sed -i "s,http://\[vod-demo\]/,http://vod.k3s.hhii.amp/,g" *.html

e.Click the three-dot icon at the right of the nginx-vod-app-[0-2] pod and chose Execute Shell. The portal displays a console for accessing the container.

f.When the VOD container console is ready, execute these commands:

cd /opt/static/videos/ $ wget http://[Bastion node's IP address]:8080/vod-demo.tgz $ tar zxvf vod-demo.tgz $ mv vod-demo/* . $ rm -rf vod-demo

g. The VOD PoC demo is ready for testing.

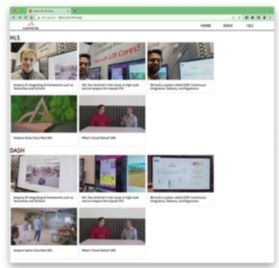

13. You can access the demo webapp through the ingress page (K3s cluster > Service Discovery > Ingress) and click the URL for nginx-front-app-ingress in the Target column. This displays a new tab for accessing the demo.

14. Open a browser to access the Nginx WebApp to test the VOD PoC demo.

15. Click one of rectangles. The browser opens the HTML5 video player for streaming video.

16. The VOD PoC demo on the SUSE K3s three-node cluster is ready!

Revision History

| ISSUE | DATE | DESCRIPTION |

|---|---|---|

| 0.50 | November 7, 2022 | Initial release. |

Disclaimer

November 7, 2022 Ampere Computing reserves the right to change or discontinue this product without notice. While the information contained herein is believed to be accurate, such information is preliminary, and should not be relied upon for accuracy or completeness, and no representations or warranties of accuracy or completeness are made. The information contained in this document is subject to change or withdrawal at any time without notice and is being provided on an “AS IS” basis without warranty or indemnity of any kind, whether express or implied, including without limitation, the implied warranties of non-infringement, merchantability, or fitness for a particular purpose. Any products, services, or programs discussed in this document are sold or licensed under Ampere Computing’s standard terms and conditions, copies of which may be obtained from your local Ampere Computing representative. Nothing in this document shall operate as an express or implied license or indemnity under the intellectual property rights of Ampere Computing or third parties. Without limiting the generality of the foregoing, any performance data contained in this document was determined in a specific or controlled environment and not submitted to any formal Ampere Computing test. Therefore, the results obtained in other operating environments may vary significantly. Under no circumstances will Ampere Computing be liable for any damages whatsoever arising out of or resulting from any use of the document or the information contained herein.

Ampere Computing reserves the right to make changes to its products, its datasheets, or related documentation, without notice and warrants its products solely pursuant to its terms and conditions of sale, only to substantially comply with the latest available datasheet. Ampere, Ampere Computing, the Ampere Computing and ‘A’ logos, and Altra are registered trademarks of Ampere Computing. Arm is a registered trademark of Arm Limited (or its subsidiaries) in the US and/or elsewhere. All other trademarks are the property of their respective holders. Copyright © 2022 Ampere Computing. All rights reserved.