Building and Testing AArch64 Containers with Ampere® Altra® and GitHub Actions

In our last article, we walked you through the simple steps to use an Ampere Altra instance on Oracle Cloud Infrastructure to add AArch64 to your build matrix for GitHub projects. In this article, we will look at building and distributing an AArch64 container with your program pre-installed.

Building a container

The first thing we need is a Dockerfile. Since the whole point of creating a container is to have a fast, minimal environment for our small application, I chose Alpine Linux as the container runtime. It is a tiny container base image (8MB) which is popular in container development. Our initial Dockerfile is very minimal, fetches the container image, and copies the “fizzbuzz” executable from our pre-existing build environment from part 1 into the container, then sets the executable as the entry point to the container (that is, the executable that will run automatically when the container runs).

FROM alpine:latest

WORKDIR /root/

COPY ./fizzbuzz ./

ENTRYPOINT [“./fizzbuzz”]

For this tutorial, I used Podman, but the command lines work similarly with Docker. Those of you who have worked in containers for a while can see where this is going...

[dneary@fedora fizzbuzz-c]$ podman build -f ./Dockerfile -t fizzbuzz:1

STEP 1/4: FROM alpine:latest

STEP 2/4: WORKDIR /root/

--> Using cache a6bfe2eb0767b7966a2e25e3f1fdd638b5a74aaf8abf2abd12f54a8f20fdf9be

--> a6bfe2eb076

STEP 3/4: COPY ./fizzbuzz ./

--> 772088bc011

STEP 4/4: ENTRYPOINT ["./fizzbuzz"]

COMMIT fizzbuzz:1

--> 4afe789ccb9

Successfully tagged localhost/fizzbuzz:1

4afe789ccb91e3ce11907a81f0b0ebbe559b65b10d9e50ff86da8a610670434d

Looks like that worked! And when we run our container...

[dneary@fedora fizzbuzz-c]$ podman run fizzbuzz:1

{"msg":"exec container process (missing dynamic library?) `

/root//./fizzbuzz:

No such file or directory","level":"error","time":"2022-07-11T23:36:34.000019606Z"}

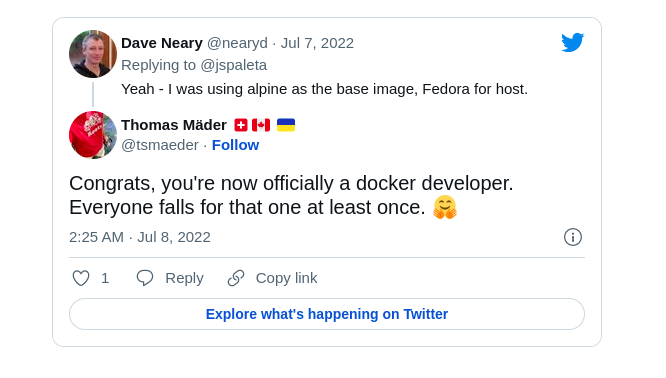

Oops. What happened? It turns out, making this mistake is a rite of passage in container development.

The issue is that a binary built on a Fedora host is compiled to dynamically link to glibc, while Alpine Linux is based on Busybox, and used musl, a small libc implementation intended for embedded environments in addition to desktops and servers. As a result, when we run our binary file in Alpine, the libraries it needs are not found, and we get the error message above.

We have a few options to rectify this. 1. We can statically link our binary on the host and copy an all-in binary which will embed a static version of glibc. 2. We can add a compatibility layer to Alpine to enable it to run binaries dynamically linked to glibc. 3. The third and best option, we can build our application in an Alpine Linux container. Our new Dockerfile installs the “build-base” package on Alpine, before copying our C file and Makefile and compiling fizzbuzz inside the container.

FROM alpine:latest WORKDIR /build/ # Install C compiler and Make RUN apk --no-cache add build-base COPY fizzbuzz.c Makefile ./ RUN make clean && make all ENTRYPOINT ["./fizzbuzz"]

It’s still simple, and it works! When we build a new container and run it with Podman, we get the familiar FizzBuzz output we expect. However, when we run “podman images fizzbuzz,” we see a problem:

REPOSITORY | TAG | IMAGE ID | CREATED | SIZE localhost/fizzbuzz | 2 | a5725263caa8 | 3 minutes ago | 192 MB localhost/fizzbuzz | 1 | 4afe789ccb91 | 19 minutes ago | 5.84 MB

Optimizing for size

Our small lightweight container just blew up from under 6MB to 192MB! It’s not that surprising, considering that we just installed GNU Make, and the entire C and C++ development stack, but still! Is there a way to copy our newly built executable back to a small image?

Indeed there is – we use multi-stage containers to return to a previous layer of our container, and copy the file from our build environment over. We tag our build environment with the “builder” tag, and then copy our executable to a pristine container afterwards. The Dockerfile now looks marginally more complicated.

FROM alpine:latest AS builder WORKDIR /build/ # Install C compiler and Make RUN apk --no-cache add build-base COPY fizzbuzz.c Makefile ./ RUN make clean && make all FROM alpine:latest AS app WORKDIR /root/ # Add our executable from the builder container COPY --from=builder /build/fizzbuzz ./ ENTRYPOINT ["./fizzbuzz"]

But our problem of image size is nicely resolved! After moving to a multi-stage build, our container images are back to a manageable 5.84MB:

We are now ready to start building on our custom runner in a GitHub action. We are going to use the Docker stack which is pre-installed on the GitHub “ubuntu-latest” hosted runner, and we will use Podman on our self-hosted AlmaLinux instance on OCI.

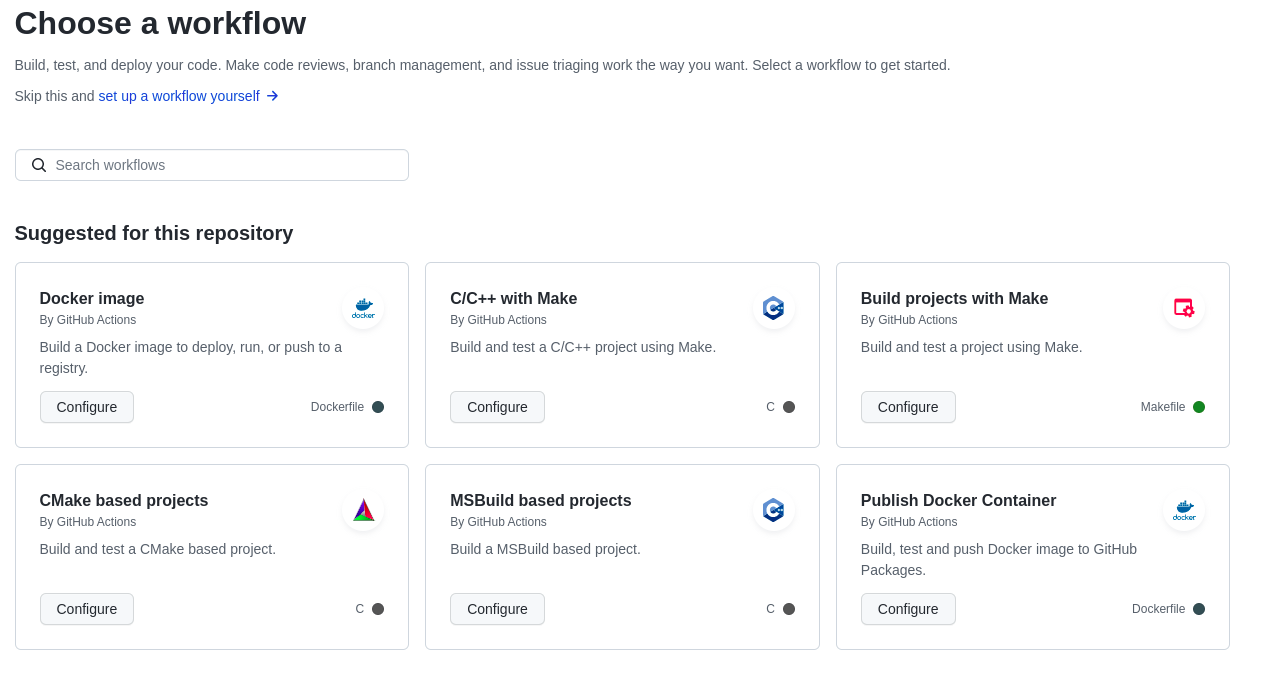

Once we have added a Dockerfile to the repository, GitHub Actions helpfully suggests a “build a Docker container” action when we choose “Add a new workflow”:

Our own Docker image workflow looks like this. There is quite a lot of duplication in the new workflow file, because we are using a different toolchain on each runner. Unfortunately, there is no easy way to create a build matrix which indicates which container runtime and builder to use for different hosts, while sharing the rest of the actions.

name: Docker Image CI

on:

push:

branches: [ "master" ]

pull_request:

branches: [ "master" ]

jobs:

x86-fizzbuzz-container:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Build the Docker image

run: docker build . --file Dockerfile --tag fizzbuzz:latest –-tag fizzbuzz:${{ github.sha }}

- name: Run the Docker image

run: docker run fizzbuzz

aarch64-fizzbuzz-container:

runs-on: [self-hosted, linux, ARM64]

steps:

- uses: actions/checkout@v3

- name: Build the Docker image with Buildah

uses: redhat-actions/buildah-build@v2

with:

image: fizzbuzz

tags: latest ${{ github.sha }}

containerfiles: |

./Dockerfile

- name: Run the Docker image

run: podman run fizzbuzzAfter installing the Podman and Buildah tools on our runner, we finally have our container building natively and running on two different operating systems, after every push to GitHub! If you have made it this far, congratulations! There are a few next steps we could take to improve this further such as:

- Mounting the project source directory inside your container before building

- Running a set of smoke tests to verify that the last commit has not broken anything

- Automatically uploading containers to a container registry after building them

- Potentially building a multi-arch container

However, building out a CI pipeline further is beyond the scope of this article. Building out CI and CD on top of Ampere processors will be the topic of a future article series.

Many thanks to the Podman community, the very comprehensive GitHub Actions documentation, and the fine container development folks from Twitter for your assistance with some beginner questions.

We’re unlocking the creativity of the development community and matchmaking it with our world-class processors. We invite you to learn more about our developer efforts, find best practices, insights, and tools at:

https://amperecomputing.com/developers

To keep up to date with news and events, and to hear about our latest content as it is published, sign up to our developer newsletter.