Accelerating the Cloud

Part 3: Transitioning to Cloud Native Pre-Flight Checklist

As we showed in Part 2 of this series, redeploying applications to a cloud native compute platform is generally a relatively straightforward process. For example, Momento described their redeployment experience as “meaningfully less work than we anticipated. Pelikan worked instantly on the T2A (Google’s Ampere-based cloud native platform) and we used our existing tuning processes to optimize it.”

Of course, applications can be complex, with many components and dependencies. The greater the complexity, the more issues that can arise. From this perspective, Momento’s redeployment experience of Pelikan Cache to Ampere cloud native processors offers many insights. The company had a complex architecture in place, and they wanted to automate everything they could. The redeployment process gave them an opportunity to achieve this.

Applications Suitable for Cloud Native Processing

The first consideration is to determine how your application can benefit from redeployment on a cloud native compute platform. Most cloud applications are well-suited for cloud native processing. To understand which applications can benefit most from a cloud native approach, we take a closer look at the Ampere cloud native processor architecture.

To achieve higher processing efficiency and lower power dissipation, Ampere took a different approach to designing our cores – we focused on the actual compute needs of cloud native applications in terms of performance, power, and functionality, and avoided integrating legacy processor functionality that had been added for non-cloud use-cases. For example, scalable vector extensions are useful when an application has to process lots of 3D graphics or specific types of HPC processing, but come with a power and core density trade-off. For applications that require SVE like Android gaming in the cloud, a Cloud Service Provider might choose to pair Ampere processors with GPUs to accelerate 3D performance.

For cloud native workloads, the reduced power consumption and increased core density of Ampere cores means that applications run with higher performance while consuming less power and dissipating less heat. In short, a cloud native compute platform will likely provide superior performance, greater power efficiency, and higher compute density at a lower operating cost for most applications.

Where Ampere excels is with microservice-based applications that have numerous independent components. Such applications can benefit significantly from the availability of more cores, and Ampere offers high core density of 128 cores on a single IC and up to 256 cores in a 1U chassis with two sockets.

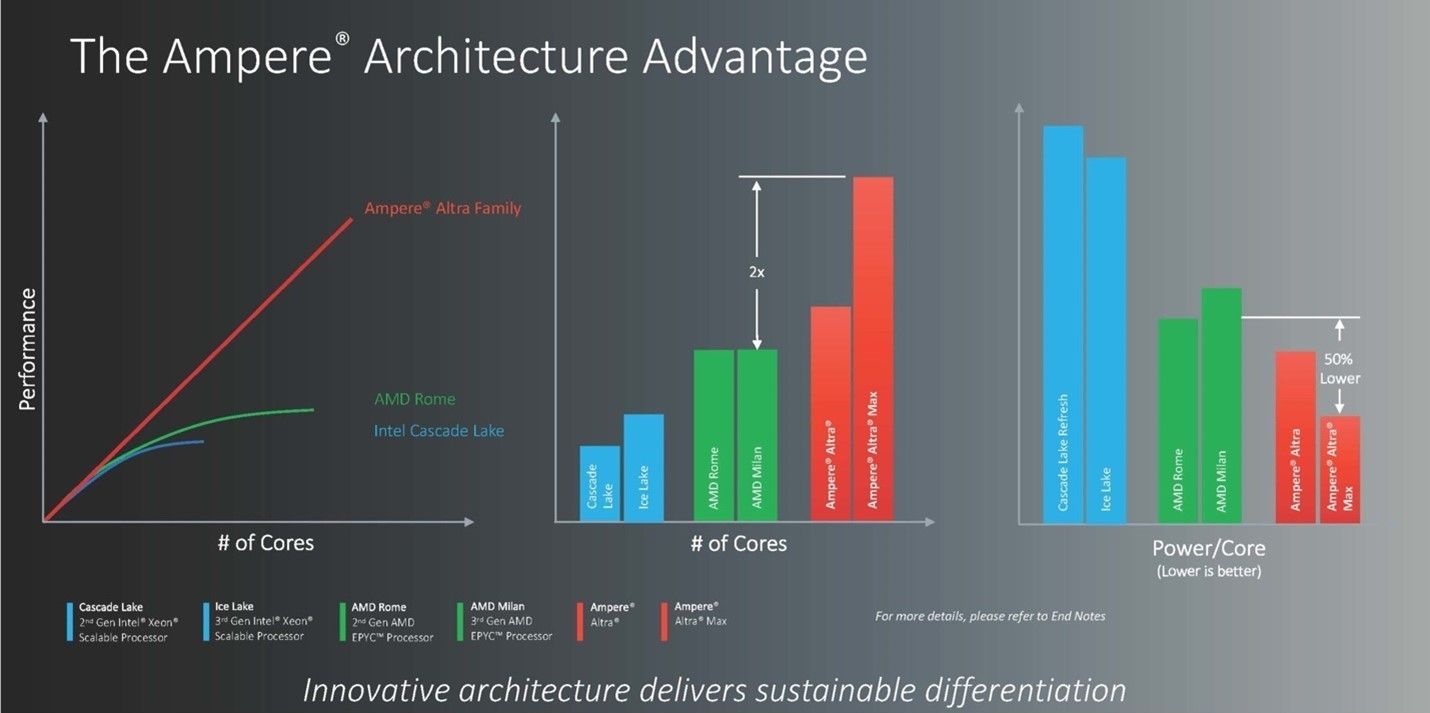

In fact, you can really see the benefits of Ampere when you scale horizontally (i.e., load balance across many instances). Because Ampere scales linearly with load, each core you add provides a direct benefit. Compare this to x86 architectures where the benefit of each new core added quickly diminishes (see Figure 1)

-Figure 1: Because Ampere scales linearly with load, each core added provides a direct benefit. Compare this to x86 architectures where the benefit of each added core quickly diminishes.

-Figure 1: Because Ampere scales linearly with load, each core added provides a direct benefit. Compare this to x86 architectures where the benefit of each added core quickly diminishes.

Proprietary Dependencies

Part of the challenge in redeploying applications is identifying proprietary dependencies. Anywhere in the software supply chain where binary files or dedicated x86-based packages are used will require attention. Many of these dependencies can be located by searching for code with “x86” in the filename. The substitution process is typically easy to complete: Replace the x86 package with the appropriate Arm ISA-based version or recompile the available package for the Ampere cloud native platform, if you have access to the source code.

Some dependencies offer performance concerns but not functional concerns. Consider a framework for machine learning that uses code optimized for an x86 platform. The framework will still run on a cloud native platform, just not as efficiently as it would on an x86-based platform. The fix is simple: Identify an equivalent version of the framework optimized for the Arm ISA, such as those included in Ampere AI. Finally, there are ecosystem dependencies. Some commercial software your application depends upon, such as the Oracle database, may not be available as an Arm ISA-based version. If this is the case, this may not yet be an appropriate application to redeploy until such versions are available. Workarounds for dependencies like this, such as replacing them with a cloud native-friendly alternative, might be possible, but could require significant changes to your application.

Some dependencies are outside of application code, such as scripts (i.e., playbooks in Ansible, Recipes in Chef, and so on). If your scripts assume a particular package name or architecture, you may need to change them when deploying to a cloud native computer platform. Most changes like this are straightforward, and a detailed review of scripts will reveal most such issues. Take care in adjusting for naming assumptions the development team may have made over the years.

The reality is that these issues are generally easy to deal with. You just need to be thorough in identifying and dealing with them. However, before evaluating the cost to address such dependencies, it makes sense to consider the concept of technical debt.

Technical Debt

In the Forbes article, Technical Debt: A Hard-to-Measure Obstacle to Digital Transformation, technical debt is defined as, “the accumulation of relatively quick fixes to systems, or heavy-but-misguided investments, which may be money sinks in the long run.” Quick fixes keep systems going, but eventually the technical debt accrued becomes too high to ignore. Over time, technical debt increases the cost of change in a software system, in the same way that limescale build-up in a coffee machine will eventually degrade its performance.

For example, when Momento redeployed Pelikan Cache to the Ampere cloud native processor, they had logging and monitoring code in place that relied on open-source code that was 15 years old. The code worked, so it was never updated. However, as the tools changed over time, the code needed to be recompiled. There was a certain amount of work required to maintain backwards compatibility, creating dependencies on the old code. Over the years, all these dependencies add up. And at some point, when maintaining these dependencies becomes too complex and too costly, you’ll have to transition to new code. The technical debt gets called in, so to speak.

When redeploying applications to a cloud native compute platform, it's important to understand your current technical debt and how it drives your decisions. Years of maintaining and accommodating legacy code accumulates technical debt that makes redeployment more complex. However, this isn’t a cost of redeployment, per se. Even if you decide not to redeploy to another platform, someday you’re going to have to make up for all these quick fixes and other decisions to delay updating code. You just haven’t had to yet.

How real is technical debt? According to a study by McKinsey (see Forbes article), 30% of CIOs in the study estimated that more than 20% of their technical budget for new products was actually diverted to resolving issues related to technical debt.

Redeployment is a great opportunity to take care of some of the technical debt applications have acquired over the years. Imagining recovering a portion of the “20%” your company diverts to resolving technical debt. While this can add time to the redeployment process, taking care of technical debt has the longer-term benefit of reducing the complexity of managing and maintaining code. For example, rather than carry over dependencies, you can “reset” many of them by transitioning code to your current development environment. It’s an investment that can pay immediate dividends by simplifying your development cycle.

Anton Akhtyamov, Product Manager at Plesk, describes his experience with redeployment. “We had some limitations right after the porting. Plesk is a big platform where a lot of additional modules/extensions can be installed. Some were not supported by Arm, such as Dr. Web and Kaspersky Antivirus. Certain extensions were not available either. However, the majority of our extensions were already supported using packages rebuilt for Arm by vendors. We also have our own backend code (mainly C++), but as we already previously adapted it from x86 to support x86-64, we just rebuilt our packages without any critical issues.”

For two more examples of real-world redeployment to a cloud native platform, see Porting Takua to Arm and OpenMandriva on Ampere Altra.

In Part 4 of this series, we’ll dive into what kind of results you can expect when redeploying applications to a cloud native compute platform.