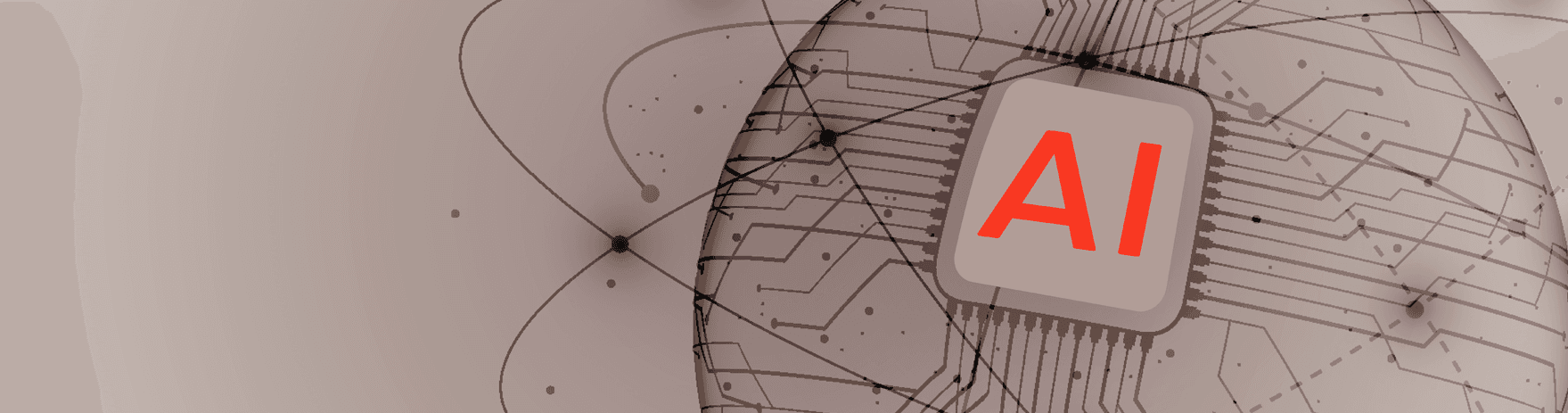

4 Steps to Lowering the Cost of AI Deployment

AI adoption is accelerating, but the economics aren’t keeping up. Service providers face razor-thin margins, and small to medium-sized businesses are being suffocated by the cost of services and hardware. Nearly three-quarters (71%) of businesses are failing to monetize AI effectively, even though almost 90% consider it mission critical.

The problem? The computational and energy demands of AI are driving up infrastructure costs at an unsustainable rate. As a result, the focus is shifting from building the biggest models to running them efficiently. This guide offers four strategies to optimize AI infrastructure costs without sacrificing performance:

1. Define service-level requirements.

2. Use smaller models where possible.

3. Deploy models strategically across CPUs and accelerators.

4. Maximize efficiency through CPU virtualization and containerization.

The Scale Problem

Large language models (LLMs) with hundreds of billions or even trillions of parameters represent AI’s frontier. But their immense size makes them difficult and costly to run. A single LLM can exceed the memory capacity of even advanced GPUs, requiring distribution across clusters—a process called model parallelism. While effective, it drives up costs through networking overhead, synchronization, and energy use.

The solution is not just more compute, but smarter deployment.

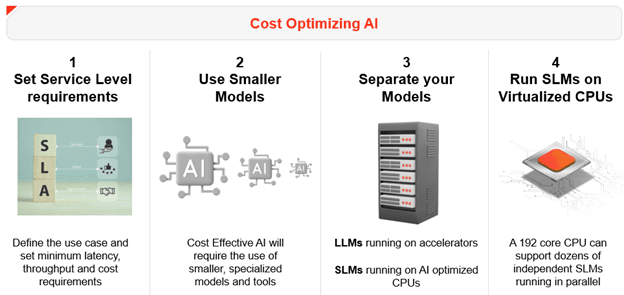

Step 1: Define Your Needs

Before considering hardware, clearly define your service-level requirements. For many common gen AI use cases, the service level will be based on required Tokens Per Second (TPS), but there can be others as well. These metrics are the foundation for all your infrastructure decisions. Here's what you need to consider:

- Real-time vs. Batch: Does your application require immediate, real-time responses, such as a live chatbot or a fraud detection system? Or can you afford to process data in batches, perhaps once an hour or overnight, like with document analysis or image processing? The difference in hardware requirements and costs between these two scenarios can be massive.

- Latency Tolerance: What is an acceptable delay for your users or downstream systems? Is it a few milliseconds, a few seconds, or even several hours? Human interaction for example does not require sub millisecond latencies. Don't over-provision for near-instant responses if your use case doesn't demand it.

- Peak vs. Average Load: Avoid provisioning for your absolute peak usage if those spikes are infrequent and short-lived. Instead, understand your average load and plan for scalable infrastructure that can handle temporary bursts without requiring constant, expensive over-provisioning.

Example: Increasing a chatbot from 5 TPS (human reading speed) to 25 TPS can drive costs up 30x, with little user benefit. Right-sizing requirements avoids unnecessary spending.

Step 2: Use Smaller Models for Bigger Impact

The “bigger is better” mindset is fading (see previous blog: LLMs: Bigger is Not Always Better). Thanks to quantization, distillation, and efficient architectures, smaller models can deliver strong performance at a fraction of the cost.

Using the smallest model that still meets your requirements allows deployment on more affordable hardware, making AI accessible without supercomputers or massive GPU clusters. The goal is to find the balance between performance and cost, and often, the most efficient solution can come in smaller packages.

Step 3: Deploy Compute Strategically for Agentic AI

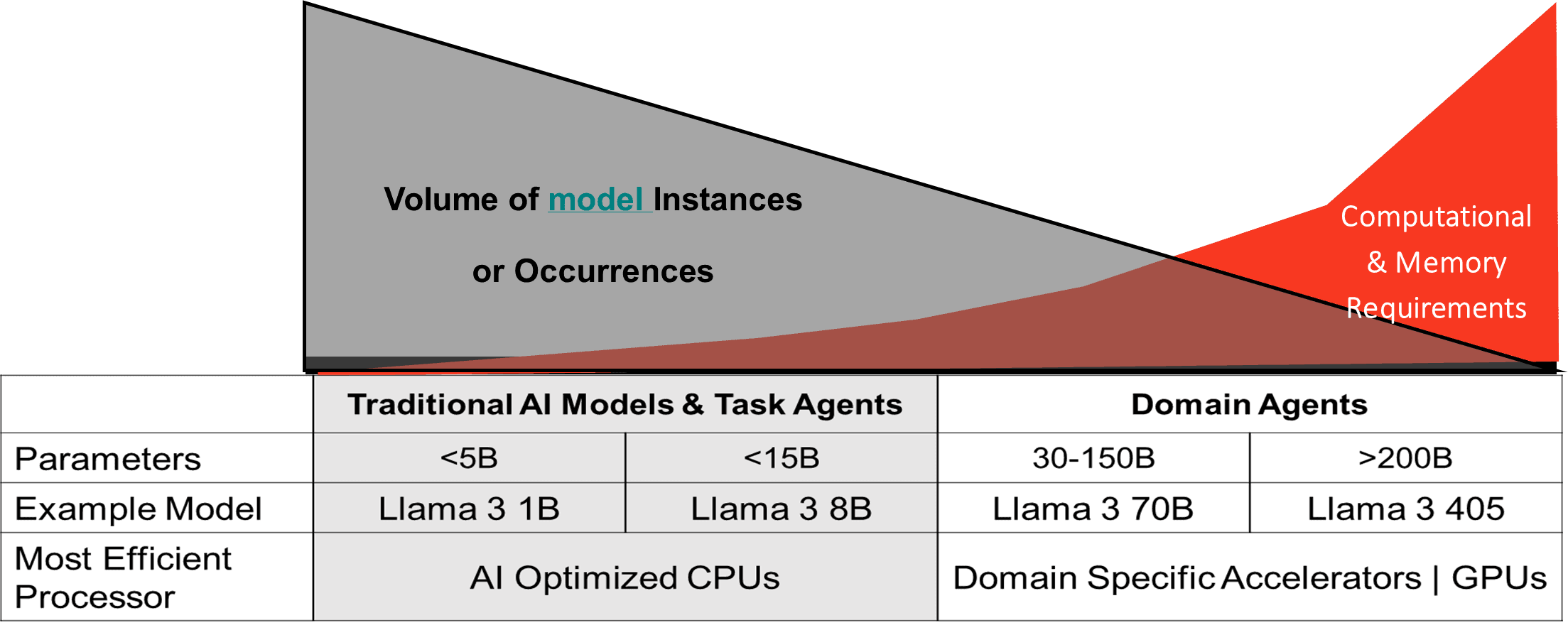

AI is evolving into systems of cooperating agents—domain models supported by specialized task models. This hierarchical approach allows for greater efficiency and includes:

- Domain Agents (LLMs), which handle broad reasoning and planning.

- Task Models (SLMs), which are optimized for narrow jobs like classification, retrieval, or code generation.

This split supports a strategic hardware mix, rather than making an “either/or” choice between CPUs and specialized AI accelerators:

- Run smaller models on CPUs via virtualization and containers for scale and cost savings.

- Reserve accelerators for large models requiring the lowest latency and highest throughput.

This ensures expensive accelerators are used efficiently with LLM workloads rather than sitting idle and driving up costs. For a list of Ampere®’s domain specific accelerator partners, please visit the AI Platform Alliance.

The following chart gives a rough paradigm for selecting the most appropriate and more importantly – cost optimized – hardware selected by the size of the model parameter count.

Step 4: Leverage CPU Virtualization and Containerization to Maximize Efficiency and Your AI Budget

For many inference workloads, CPUs are emerging as highly effective, especially when running smaller models in parallel.

Here’s why:

- Virtualization/Containerization*: CPUs excel at virtualization and containerization, enabling multiple secure workloads on one physical chip. This significantly improves resource utilization, security and efficiency, which greatly reduces the cost of running many models simultaneously.

- Core Count*: High-core CPUs, like AmpereOne® M with 192 cores, allow massive concurrency without noisy-neighbor effects that can reduce performance.

- Mature Open Ecosystem: Decades of open-source hypervisor and virtualization development make CPUs a robust and cost-effective choice.

Once considered impractical, running genAI inference on virtualized CPUs is now viable thanks to smaller, more efficient models and higher core counts. This means you can now effectively run these workloads on virtualized CPU cores, unlocking significant cost savings and greater flexibility.

Building a Sustainable and Cost-Effective AI Future

Optimizing your AI infrastructure is no longer an afterthought—it's a critical component of a sustainable and scalable AI strategy which unlocks business value for your enterprise, service or use case. By 1. defining precise service levels, 2. using smaller, efficient models, 3. deploying with a hierarchical agentic approach, and 4. leveraging CPU virtualization, you can reduce costs, increase flexibility, and build a future-proof AI strategy.

To learn more about running AI inference on Ampere CPUs, visit our AI solutions website.