Sustainable Computing, GPU-Free AI Inference and Cloud Native Service Evolution: A CloudFest 2024 Wrap-up

CloudFest ‘24 delivered an even bigger, more engaging live event experience in its 20th anniversary with more than 8,000 attendees. This being Ampere’s third year at CloudFest — the premier cloud industry event in the European region and beyond — there were themes both old and new that dominated the event from our perspective. The community of customers and partners we visited with are all shaped and focused by one or more of the following forces at play in the cloud and the region at large:

- The global race to net zero adds pressure to building a more sustainable computing infrastructure

- AI build-out in both service and compute infrastructure is stretching every budget (money, power, space, etc.)

- Cloud service modernization (what we call cloud native evolution) is more important than ever to deliver competitive services on a worldwide scale and stage while maintaining security and data sovereignty

Building a sustainable cloud future

Three years ago, customers were curious about the possibility of saving energy in computing if it helped their bottom line while possibly netting ESG gains. There was some natural skepticism about the efficiency that an Ampere compute installation could deliver on our promises. This year, with over 25 regional customers up and running, the verdict is in: The savings in energy and money are real and, in many cases, produce better-than-expected returns. Now the objective is to scale: More workloads, more racks, more locations, all while consuming less.

In this year’s keynote, our Chief Product Officer, Jeff Wittich, hit this trend and doubled down on our promise to deliver more sustainable compute in the age of AI. The Ampere value proposition is expanding with the launch of our new AmpereOne processors — continuing our more-for-less promise. Jeff highlighted the efficiency advantages our customers will continue to see with this new line of products as measured in performance per rack and specifically with AI Inference workloads.

The service expansion theme was underscored as Scaleway joined us on stage for the second year in a row, this year announcing the beta availability of “Kapsule”, a managed service for Kubernetes running on Ampere servers. Adrienne Jan, Scaleway's Chief Product Officer, heralded the deployment of Ampere servers with Kubernetes as marking a "new era in the European technology landscape." She emphasized the eco-efficiency of Ampere processors and the pivotal role of cloud native managed servers in enhancing Scaleway's COP-Arm instances. This combination, alongside existing services on legacy x86 systems, unlocks unprecedented efficiency.

GPU-free AI inference

Jeff's keynote highlighted Ampere processors as a sustainable alternative to GPUs for AI inference services, emphasizing their performance and power efficiency for general-purpose workloads. He noted that with approximately 85% of AI workloads in data centers or on the edge being inference-oriented, the majority of AI deployments can avoid the high costs and energy demands of traditional GPUs, thanks to Ampere's ability to deliver AI inference more economically and with lower energy consumption.

Continuing on these themes, the panel session, “Emerald City Silicon: Controlling Data Center Power Use in a Resource-hungry World”, offered some powerful takeaways:

- Data centers are being stretched thin and are increasingly unwelcome neighbors in power-challenged geographies

- The GPU-infused AI bow wave will break the system under current course and speed

- Cooling the compute infrastructure in many geographies is getting more complicated and increasingly exotic

- There are more responsible options available (such as Ampere CPUs), but the established technology giants are contra-motivated (and architecturally challenged) to offer power efficiency in the current industry climate

- We are all responsible as global technologically inclined citizens to prioritize power efficiency over a great many other considerations when building out the next generation of cloud services

You can catch the replay of our keynote, “Sustainable Computing for the Age of AI”, panel talks, and other Ampere activities at our CloudFest event page.

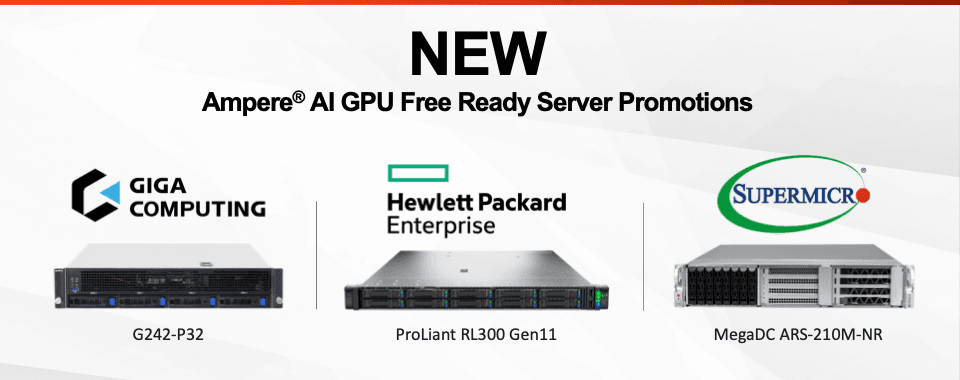

New partner platform announced

CloudFest served as the platform for unveiling a full range of GPU-free AI inference-ready servers by Ampere's partners. HPE debuted its RL300-based AI inference server, joining Supermicro and Giga Computing's existing lineup. These offerings can deliver 2-3 times more efficient inference compared to similar x86 platforms across various AI models. This efficiency is achieved using Ampere-optimized, prepackaged open frameworks, including PyTorch, TensorFlow, and ONNX.

These integrated platforms address growing industry demand for performance, flexibility, and environmentally responsible solutions for on-premise customers. In addition, Ampere instances offered through a new cadre of regional and global cloud services partners offer up to 4x performance per dollar for real-time and batch inference workloads while maintaining exceptional power efficiency for the ESG-conscious cloud customer.

Ampere ecosystem growth poised for growth of sustainable cloud native services

The Ampere partner ecosystem is experiencing robust growth. At CloudFest, we were pleased to welcome more than 24 partners, showcasing service and system availability for our European and global customers directly from our booth.

After engaging with numerous service providers, MSPs, and software vendors at this year's event, the prospects for developers embracing cloud native processing have never been more promising — enhancing performance, efficiency, and cost-effectiveness in cloud native services.

Additionally, the growth in managed Kubernetes services beyond major hyperscale providers is remarkable. Various boutique and start-up MSPs are now offering multi-architecture support, integrating Arm64 platforms with the traditional x86 base, utilizing managed k8s services on a variety of cloud providers.

This evolution towards a multi-cloud, multi-architecture environment paves the way for a sustainable and heterogeneous service infrastructure, enabling the selection of the most suitable tools for specific needs.

Finally, Broadcom's adjustment of the VMware licensing schedule has significantly benefited cloud native communities globally. This change serves as a powerful incentive for providers to expedite their transition to cloud native software and hardware services build-out.

Ampere stands out as the ideal platform for these emerging services — you won't find a more dense container or VM server platform anywhere. Compared to legacy x86 racks, Ampere racks achieve 3-4 times greater container density at the rack level. This advantage stems from their higher core count and more efficient power usage, making Ampere compute installations superior within the same power budget specifications for racks.

For developers: $300 in bare metal cloud credits!

Developers interested in experiencing Ampere servers can take advantage of a limited-time offer. Our partner, phoenixNap, is running an exclusive promotion that provides credits for Bare Metal Cloud services, specifically using the HPE Proliant RL300.

This opportunity allows developers to evaluate the impressive capabilities of these GPU-free AI inferencing servers. Experience firsthand their superior performance, which comes at a fraction of the power and cost of traditional GPU-equipped systems. This is your chance to achieve more efficient inferencing at a lower cost.

For more information:

Visit the Ampere CloudFest event page

Visit the HPE Partner page

Take advantage of Bare Metal Cloud credits with phoenixNAP

Sign up to our Developer Program

All data and information contained in or disclosed by this document are for informational purposes only and are subject to change. This document may contain technical inaccuracies, omissions and typographical errors, and Ampere is under no obligation to update or otherwise correct this information. Ampere makes no representations or warranties of any kind, including express or implied guarantees of noninfringement, merchantability or fitness for a particular purpose, regarding the information contained in this document and assumes no liability of any kind. Ampere is not responsible for any errors or omissions in this information or for the results obtained from the use of this information. All information in this presentation is provided “as is”, with no guarantee of completeness, accuracy, or timeliness.

© 2024 Ampere Computing LLC. All rights reserved. Ampere®, Ampere® Computing, Altra® and the Ampere® logo are all trademarks of Ampere Computing LLC or its affiliates.

AI Semis Market Landscape – D2D Advisories