Azure Efficiency Solution Brief

Cloud Native Applications on the Azure with Ampere Altra VMs

Run Cloud Native Applications on the Azure Cloud with Ampere Altra VMs

Last year, Ampere announced the general availability of cloud instances Dpsv5 and Epsv5 featuring the Ampere Altra processors on Azure infrastructure—addressing the ever-expanding needs of customers developing and deploying cost-effective, scale-out, and cloud-native workloads. The Azure Ampere VMs have up to 64 virtual CPU cores, 8 GB of memory per core, 40 Gbps of networking bandwidth, and local, attachable storage. Microsoft describes them as “engineered to efficiently run scale-out, cloud-native diverse set of Linux workloads,” including open-source databases, Java, .NET, in-memory applications, big data analytics, web, gaming, and media servers. Ampere and Microsoft have developed a robust ecosystem of partners, enabling developers to get the most out of Ampere’s Azure Instances through software porting, optimization, and developer outreach.

Developers and software engineers face many challenges while trying out new VMs, onboarding workloads to a new architecture, or running new cloud native applications as microservices on the cloud. For workloads at scale, one of the primary challenges is effectively orchestrating multi-tenant VM instances in a performance-aware and cost-effective manner. Seamless architectural transition, scalability, uninterrupted user experience, and cloud service costs are some of the other demands that cloud customers, developers, and infrastructure engineers face.

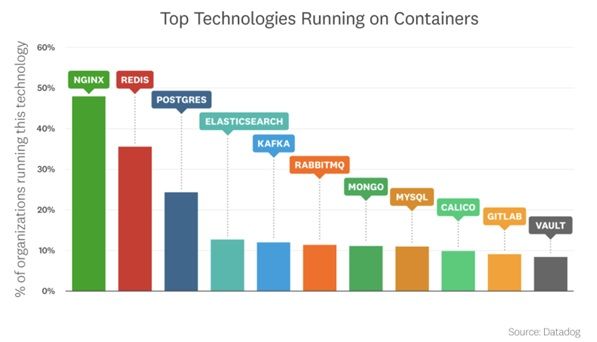

Modern engineering teams are increasingly expanding their use of containers and container-based microservice applications. The most popular off-the-shelf container images—as referenced by the DataDog research—highlight the breadth of applications used in common service installations in today’s cloud compute.

Since support for Arm from the open-source community has grown exponentially over the last few years, there is a tremendous variety of new software and services available.

Key applications with validated ARM-based versions include:

- Redis

- NGINX

- Postgres

- Kafka

- Elasticsearch

The applications listed above have also been benchmarked on Ampere VMs relative to Intel and AMD-based instances for performance and price-performance on Azure Cloud infrastructure.

Cloud Native Workloads and Ampere’s Leadership Performance

Cloud Native Processors enable CSPs to increase compute density in the datacenter with a compelling performance advantage, while enabling developers to reduce day-to-day operating costs and accelerate VM performance. For the wide spectrum of general-purpose workloads—such as web services, databases, In-memory caching, video services—running in cloud-native environments, Ampere VMs built upon the foundational energy efficient Cloud Native Processors demonstrate scalable, predictable high performance, and significantly improved price-performance results.

Ampere VMs Optimized for a Variety of Solution Domains.

A good example of a typical cloud native service that benefits from the Ampere-based instances is a web service. These services are a massive superset of applications run in clusters of interrelated microservices. In this case, individual microservices would perform one small part of the multiple tasks required to formulate results or content for users of the web-based service. Web microservices can run thousands or tens of thousands of processes to compose a complex web query. Ampere’s Cloud Native Processors, software, and Dps/Eps VMs combine to provide high performance and efficiency for web services.

The 4-5 typical service layers in a particular application can be defined as:

- Web front end

- Key value store, object cache

- Search

- Backend database

- Messaging

The Ampere team has optimized and benchmarked all the web service layers that make up a cloud native application. The table below provides references to the workload briefs and tuning guides for further reading.

| Web Tier | Application | WL brief | Tuning Guide |

|---|---|---|---|

| Front end | NGINX | Nginx Brief | Nginx Tuning Guide |

| Search engine | ElasticSearch | Elastic Search Brief | |

| Key Value Store and Caching Tier | Redis | Redis Brief | |

| BackEnd | PostgreSQL | PostgreSQL-Tuning-Guide | |

| Messaging | Kafka |

In Depth Performance Analysis of Key Workloads:

NGINX is one of the most popular web servers used in the cloud. In our tests, the Dpsv5 VMs demonstrated up to 30% better price-performance compared to similar Dsv5 and Dasv5 VMs and 20% higher throughput at a stringent p.99 SLA of 1ms.

To achieve 1M requests per second with NGINX at a 1ms p.99 latency, you will need up to one-third less Dpsv5 virtual machines than Dsv5 and Dasv5 VMs. The result is a $1.7M annual cloud capex savings with no loss in performance.

ElasticSearch: Elasticsearch is commonly deployed in the cloud due to its scalability, ease of use, and extensibility—it can be deployed on hundreds of nodes seamlessly—making it a popular choice for microservice-based applications. Dpsv5 Ampere VMs deliver 28% better price-performance than the Dsv5 VMs and 26% better than Dasv5 VMs — a compelling value and price performance advantage.

AI inference performance with MLPerf Resnet50 v1.5 in single stream and offline scenarios represent the most common use cases for image classification in the data center—reflecting both latency sensitive (one frame or image at a time) and bulk throughput (as many as you can return in parallel) operations respectively. We ran both these benchmarks using TensorFlow 2.7 with Ampere AI container images on the D16ps v5 VM using the fp16 data format. Comparisons made to the legacy x86 run using TensorFlow 2.7 with DNNL using fp32 data formats show that accuracies for these models using the stated formats are nearly exact. The comparison also shows that Ampere AI technology yields over 2x performance gain over the legacy x86 VMs and even more compelling 2x to 3x price-performance value over the legacy x86 based VMs.

To understand the impact of cloud savings with Azure Ampere VMs for a web service composite, let’s consider average metrics of the production volume of cloud IaaS compute (vCPU) for enterprise customers/end users as 100K vCPU per year. The spend ($) on monthly basis ranges from $1.5M - $3.3M and on annual basis ranges from $18M - $36M as explained in Note 1.

Note1: The range is assumed based upon payment plan/model from pay as you go as the highest cost to 3 yr. contract w/ ~50% discount. This cost is assumed based upon the baseline compute cost for IaaS excluding any additional/extended features like storage, network acceleration, memory optimization, etc.

It was assumed that Intel, considering its scale, would incur the highest IT cost of $36M. This was taken as a baseline for downstream calculations. Assuming a weightage score of 30% (NGINX), 30% (Redis), 20% (Postgres), 10% (Elasticsearch), and 10% (Kafka), yearly spend and throughput were calculated for each of the prioritized five workloads. This throughput value was normalized across all three silicon providers (Intel, AMD, Ampere) to reverse calculate their IT investment on each of the WLs. This calculated investment per WL was added to get a total IT spend for each—AMD and Ampere. It was observed that up to 30% less Ampere VMs per year relative to x86 VMs are required to get the same throughput.

The analysis resulted in the conclusion that for a normalized throughput (req/sec) across the three silicon providers, Ampere Altra processor based VMs can save the IT spend by approx. $11M relative to Intel Icelake based VMs, and by $7M relative to AMD Milan based VMs.

Testing Predictability

The cloud computing paradigm typically implies multitenancy. Services will in some part be comprised of virtual machines. However, it is likely your applications will share compute resources with applications run by other customers. This makes it likely that your application performance may vary over the course of time as other “neighbors” consume shared resources (noisy neighbor syndrome).

To understand the effects of noisy neighbors, Redis was tested and throughput was monitored under a load on two different VMs—the Dpsv5 and Dsv5 virtual machines. A fixed number of noisy neighbors—in the form of video encoding tasks, a popular cloud workload—were periodically introduced. It was observed that the noisy neighbors resulted in a drop in performance for the Dsv5 instances due to Simultaneous Multithreading and Turbo frequencies. On the contrary, Redis running on the Dpsv5 instances maintained the same level of throughput.

Testing Scalability

In theory, cloud resources are meant to be used to their fullest for the sake of efficiency. In the real world, however, the unpredictable nature of internet traffic makes right sizing your infrastructure challenging. Should you run your applications at 60% of max capacity and overpay for your service or should you run them close to max capacity and risk your application violating your SLAs? These challenges and choices are resolved by using cloud native instances. In this example, containers running x264 were scaled and frames per second processed by each of the containers were measured. It was observed that as more containers were added, the frames per second processed by each did not scale linearly for a virtual machine built on legacy architecture. Conversely, the frames per second processed by every x264 container on a Microsoft Azure Dpsv5 VM based on Ampere Altra scaled linearly. This linear scalability allows developers to scale their micro-services with the confidence that the compute will deliver performance at every level of demand for their deployments.

The results below show media encoding output on Ampere VMs and demonstrate the predictability and scalability of Ampere VMs.

Software Migration

The cloud native paradigm is designed to be, among other things, multi-architecture friendly. Therefore, the software ported to Arm64 can coexist with legacy code and alternative architectures. The applications abstract the underlying architecture with a runtime layer and—in most cases—the development is ‘write once, run anywhere’. Most open-source applications for Arm64 architecture are either available readily as Docker containers or through software repositories. Applications such as in-memory caches like Redis, databases like PostgreSQL, and Big Data software stacks like Spark run very well on Ampere Altra virtual machines with little to no source code changes on any cloud service provider.

The extensive ecosystem of applications that are run daily in regression further exemplifies the strength of the S/W and tools available on Azure. Ampere has tested and verified a set of 130+ images to run on the Dpsv5 platform through daily regressions. Common software packages found in many cloud-native stacks show the maturity of the ecosystem on the Dpsv5 instances. Refer to the link https://amperecomputing.com/solutions/azure?referrer=azure to access all the containerized applications packaged for easy deployment and delivery via Docker Hub or other image repositories.

To get step-by-step directions on how to move your workloads to Ampere VMs, refer to the tuning guides: https://amperecomputing.com/tuning-guides and developer access program at: https://amperecomputing.com/developers

Conclusion

Ampere VMs are 1.44x more compute efficient than AMD and 1.32x more than Intel VMs—where compute efficiency is defined as number of VMs needed when throughput is normalized. Ampere VMs are also up to 1.26x faster (lower latency) than AMD and Intel VMs for a composite web-service application With IT cost savings of up to $11M relative to Intel and $7M relative to AMD, Ampere VMs also prove to be leading the price-performance metrics for cloud native workloads or applications.

About Ampere

Built for sustainable cloud computing, Ampere Computing’s Cloud Native Processors feature a single-threaded, multiple core design that’s scalable, powerful, and efficient.

Additional Information

Evaluate your software readiness via Ampere Ready Software: https://amperecomputing.com/solution

Assess your stack readiness using workload briefs: https://amperecomputing.com/developers

Let us know what you’d like us to add: developer@amperecomputing.com