Data Analytics - Hadoop

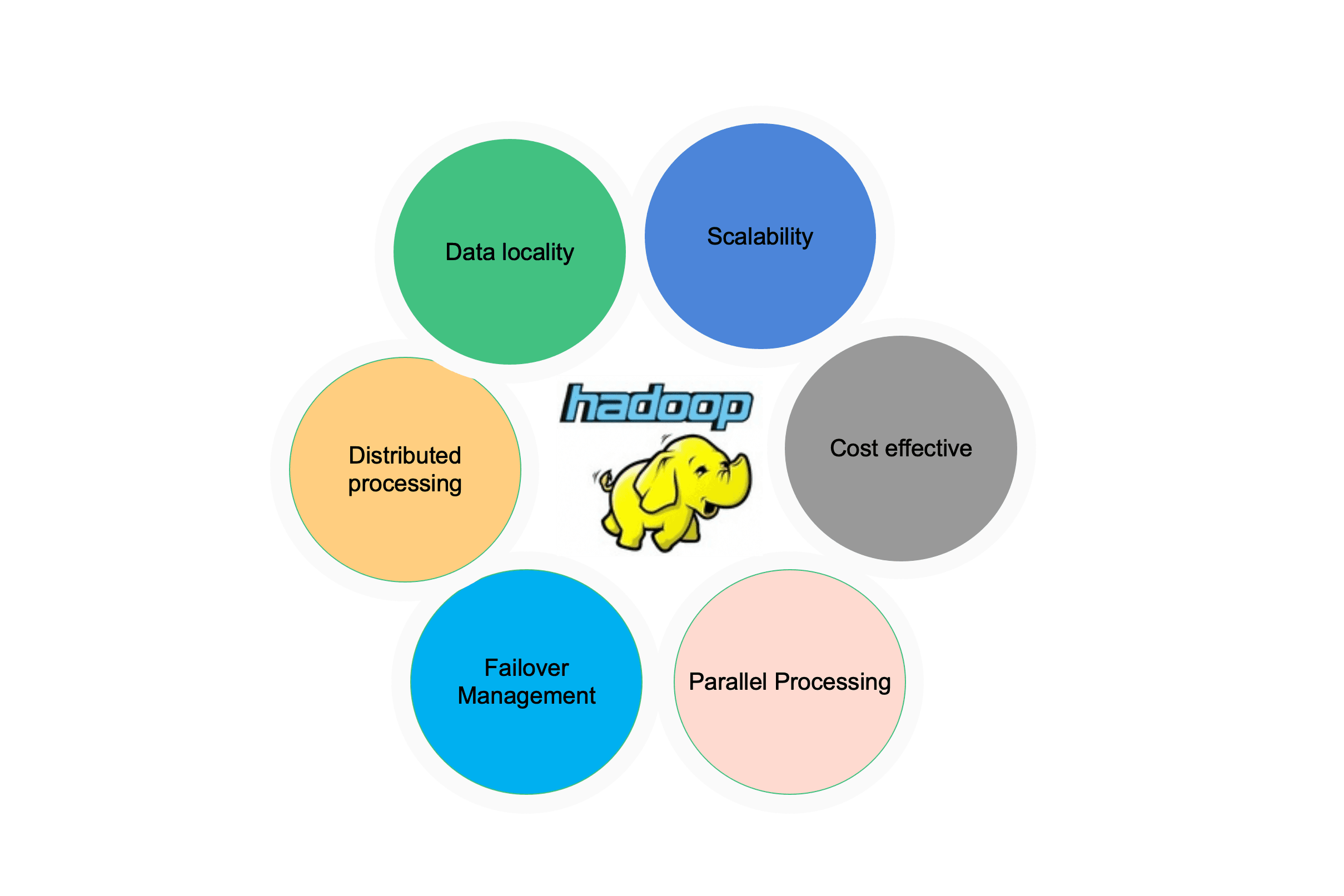

Features

Hadoop clusters multiple computers to analyze data in parallel. It consists of four main modules, HDFS, YARN, Man Reduce and Hadoop Common.

Applications collect data in various formats and seed it to the cluster. The namenode has metadata information of all these chunks of data. A MapR job runs against this data in HDFS across data nodes. All of these tasks are computationally intensive. The data has to be pulled from HDFS, that demands high-performance storage, has to be coordinated across different computers that demand a high speed network, and has to be quickly processed by fast processors for thousands of tasks before aggregated by reducers to organize the final output.

Why Hadoop?

Scalability

Hadoop can process vast amount of data and can be easily scaled by adding more nodes to the cluster.

Data Protection

Replication on Hadoop's HDFS ensures robustness of data being stored, and data is Highly Available.

Fault tolerance

Hadoop eco system is designed under the assumption that hardware failures are inevitable on individual machines or racks of servers. The software framework is designed to handle such failures and provides business continuity.

Cost control

Hadoop delivers compute and storage on more affordable commodity hardware.

Open source innovation

Hadoop is a open-source software framework backed by global open source communities. The power of open source community provides quicker development and bug fixes.