Building a Petabyte Ceph Cluster with Micron, Supermicro and Ampere

Continuing our efforts from last year, we’ve extended our testing and evaluation of Ceph storage performance. We’ve shown significant efficiency advantages of Ampere® Altra® (up to 80 cores) -based systems over common x86 platforms in our white paper, along with interoperability testing of Arm64 and x86 nodes. In this post, we want to expand upon this work and present lab performance results on Ampere Altra Max M128-30 (128 single-threaded cores, 3.0GHz frequency).

Ceph is a popular open-source choice for storage among cloud environments, OpenStack, Kubernetes, and other container-based platforms. Ceph is a software-defined storage solution that is used to address block, file, and object storage for your organization. It offers different interfaces to cater to various storage needs within a single cluster. This can eliminate the need for multiple storage solutions or specialized hardware, thereby helping reduce costs and management overhead. We see customer demand due to its ability to scale, easily paired with reliability, fault-tolerance, especially in the age of AI compute where data demands are growing exponentially – whether they are object, block or file data types.

Generally, when we test storage workloads such as Ceph, our goal is to maximize throughput within constraints such as multiple architectures, (scale-up, scale-out) multiple access methods such as block, file, erasure coding, etc. These workloads are typical of what our customers would do in their shop.

For this effort, we partnered with Supermicro and Micron to deliver a scale-up POC. This means delivering maximum storage capacity across a minimal number of storage nodes (with many data drives in each) while aiming to minimize power consumption. Scale-out architectures, on the other hand, are generally used to increase performance (large number of storage nodes) at the expense of distributing smaller number of disks across the nodes. A scale-out architecture typically increases BOM, network, power and space costs.

Test setup and methodology

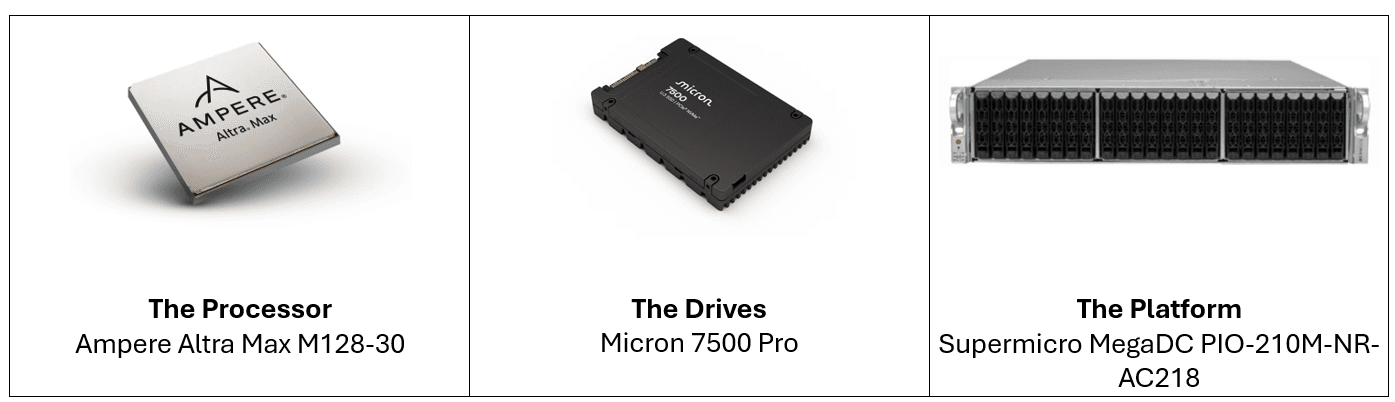

We deployed three storage nodes to minimize blast radius and to prove out linear performance scaling. By partnering with Supermicro and Micron, we can deliver these results on data center grade hardware designed for performance, minimal power consumption and high reliability. These three solutions stood out with the best performance.

The Supermicro MegaDC ARS-210M-NR base model is modified as an OEM configuration to handle as many as 24 individual U.3 storage drives. Micron’s 7500 Pro SSD continues to be a popular choice for those looking at a balance of performance, capacity, and price. It is well- suited for workload domains ranging from AI to traditional databases, content delivery, web service and much more. Ampere’s M128-30 processor with 128 cores allows for massive parallel processing applications, a key ingredient to building scale-up architectures similar to this one.

The 3-node Ceph server cluster BOM contained:

- Nodes: 3 x Supermicro ARS-210M-NR

- CPU: 3 x Ampere Altra Max M128-30 (128 cores, 3.0GHz)

- Memory: 8 x 32GB DDR4-3200 per node

- Storage: 24 x Micron 7500 PRO 15360GB U.3 SSD per node ; 1 x 1TB M.2 OS drive per node

- Network: 1 x AOC-A100G-m2CM (100GbE Private and 1 x 100GbE Public) Ethernet connections per node

- OS: Ubuntu 24.04.1

- Ceph Version: Squid 19.2.0

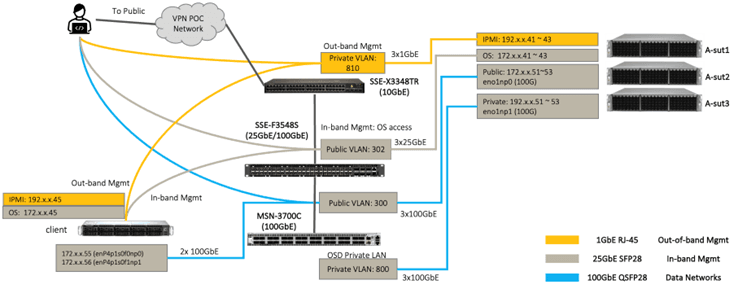

In total, the configuration featured 72 storage drives across the cluster (OS drives excluded) and 1 petabyte in total capacity. The network topology including client and switch devices in this lab setup was as follows:

A-sut1, A-sut2 and A-sut3 (“A-sut” refers to “Ampere system under test”) represent the three Supermicro nodes respectively.

A-sut1, A-sut2 and A-sut3 (“A-sut” refers to “Ampere system under test”) represent the three Supermicro nodes respectively.

Test methodology

We chose to measure Ceph performance with Read and Write IOPS (input/output per second) using FIO tool with RBD engine. Writes are typically more demanding due to locking, whereas Reads are more performant as multiple processes can read data in parallel. A storage architecture is considered "performant" when it can handle increasing reads and writes in a linear manner, while maintaining acceptable latency levels.

We chose block storage for these tests as Ceph block storage is widely used in several applications like databases, virtual machines, Kubernetes CSI, and others. More importantly, it is commonly used by several of our customers.

Test results

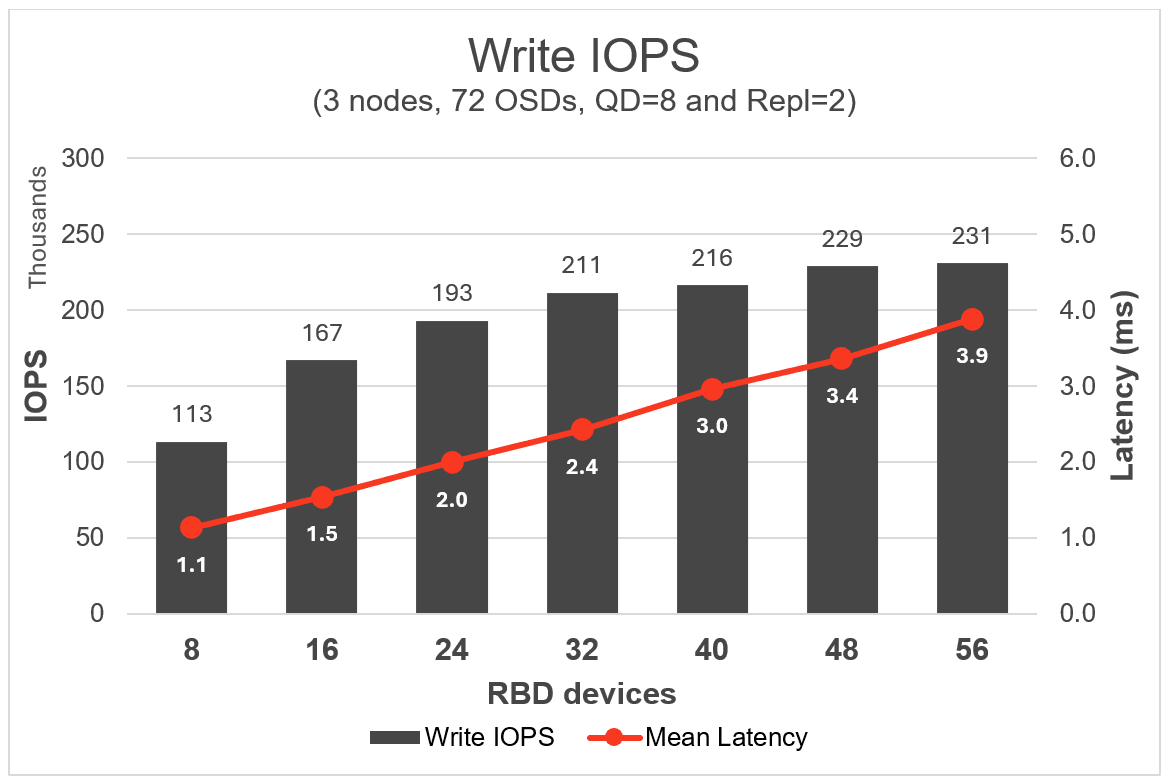

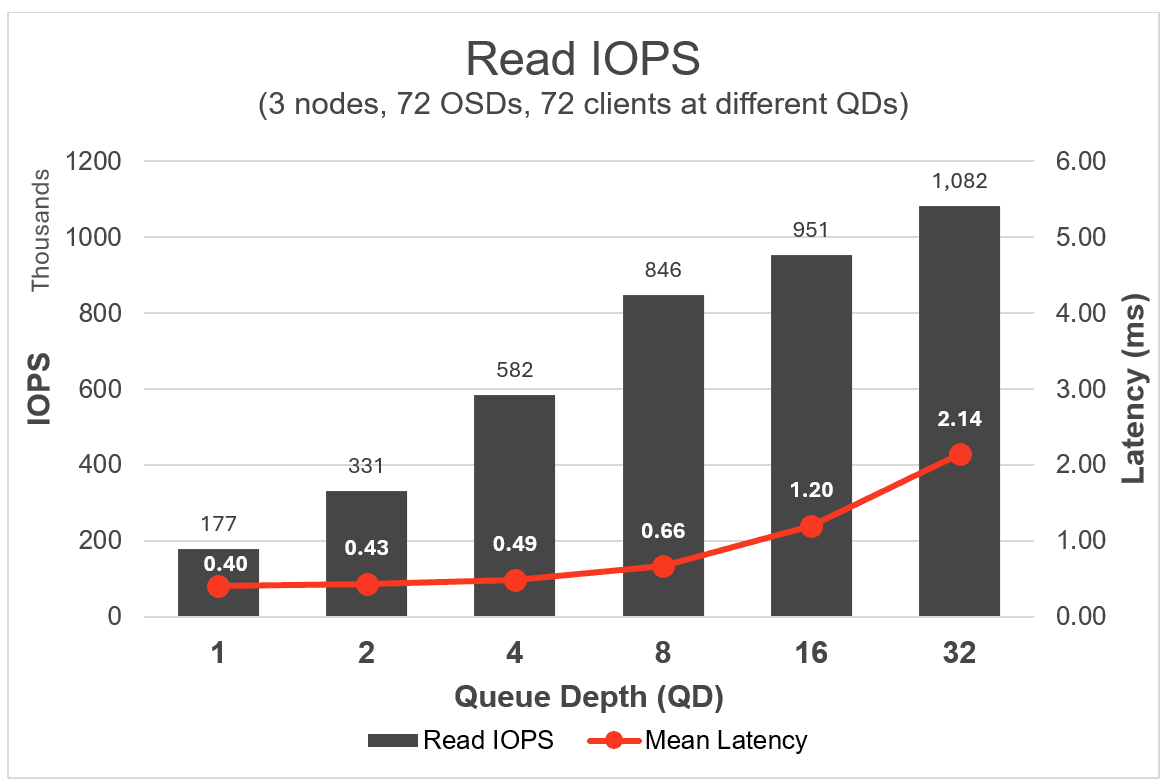

During the performance testing phase, we scaled both the Write IOPS and Read IOPS test with a focus on showcasing linear and predictable performance scaling. Ampere’s design philosophy is to produce processors with linear, predictable, and power efficient capabilities for various workloads, including storage.

RBD (Rados Block Device) LUNs (Logical Unit Numbers, i.e. data drives) were pre-populated with data. Read and Write FIO tests were conducted on those devices. The count and QD (Queue Depth; stacking up in-order, discrete IO operations for disk devices) were incrementally increased to determine the optimal IOPS and latency. The tests aimed to maintain latencies below 4 milliseconds as this is a performance threshold used by several of our storage customers and may be considered industry accepted.

Write Tests

- We achieved approximately 230k write IOPS with a mean latency of 3.5ms.

- Increasing beyond 56 RDB devices drove write latencies beyond 4ms and only delivered marginal IOPS increase from the cluster.

- Ampere recommends RBD device count of 48 or 56 for balanced trade-off between latency and throughput.

Read Tests

- We achieved a Read IOPS of 1.1M with a mean latency of 2.1ms from this cluster.

- Increasing beyond 32 QD or devices (disk drives) drove latencies beyond 4ms and only delivered marginal IOPS increase from the cluster.

- Ampere recommends QD of 16 or 32 for balanced trade-off between latency and throughput.

Depending on your use case, scale-up storage architectures might be the right approach to hit your sweet spot of total capacity, acceptable latency and throughput. Partnering with Supermicro and Micron has allowed Ampere to provide flexible platforms to build Ceph clusters that meet your needs. For more information visit us at amperecomputing.com. Our Storage Solutions page provides further insight into Ceph and other workloads. We invite you to learn more about our developer efforts, find best practices, insights, and give us feedback at: https://developer.amperecomputing.com. When you’re ready to chat, please contact the Ampere Sales team here.

Footnotes

All data and information contained herein is for informational purposes only and Ampere reserves the right to change it without notice. This document may contain technical inaccuracies, omissions and typographical errors, and Ampere is under no obligation to update or correct this information. Ampere makes no representations or warranties of any kind, including express or implied guarantees of noninfringement, merchantability, or fitness for a particular purpose, and assumes no liability of any kind. All information is provided “AS IS.” This document is not an offer or a binding commitment by Ampere.

System configurations, components, software versions, and testing environments that differ from those used in Ampere’s tests may result in different measurements than those obtained by Ampere.

©2024 Ampere Computing LLC. All Rights Reserved. Ampere, Ampere Computing, AmpereOne and the Ampere logo are all registered trademarks or trademarks of Ampere Computing LLC or its affiliates. All other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.