3 Reasons Expensive Power is the Biggest Data Center Challenge

3 Reasons Expensive Power is the Biggest Data Center Challenge

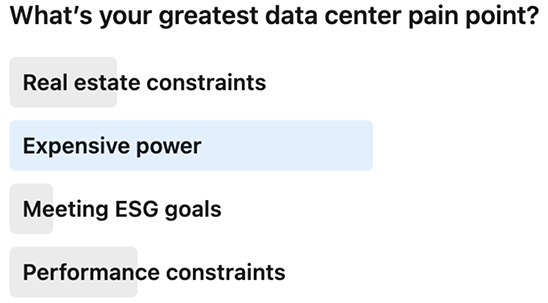

What are the top 3 greatest challenges for data center operators? According to our recent poll, respondents overwhelmingly chose expensive power as their number one concern. While data center energy consumption is leading the list of top pain points, it is closely followed by other performance and efficiency concerns for data center operators, including:

- Expensive power

- Performance constraints

- Real estate constraints

Limited performance and real estate nearly tied in polls conducted on two social media platforms, with performance constraints only barely edging out real estate. Interestingly, real estate constraints have been linked to access to power and may be another consequence of power-hungry hardware in data centers. Regardless, given the current economic and energy climate, it’s no surprise that power efficiency is on the top of every data center operator's mind, and by far the majority response.

What’s your greatest data center pain point? Respondents replied that expensive power is their number one data center challenge

What’s your greatest data center pain point? Respondents replied that expensive power is their number one data center challenge

electricity costs for industrial or commercial purposes. These rate increases have not been even across markets, with some markets experiencing little to no change from

last year to others that have seen YoY price growth exceed 40 to 50%."

If you’re experiencing a YoY price increase even close to 40%, finding solutions has become job one. Some popular remedies that address rising power costs can be quite expensive to implement and others are only marginally effective. These common practices include:

- Implementation of Cloud Native practices like containerization

- Consolidation or infrastructure

- Power management tool upgrades

- Innovative cooling technologies and techniques

- Energy efficiency practices

While each of these measures has its merits, they do not address the core of the problem of power-hungry servers—powered by ever larger, hotter, and resource consuming CPUs. Here are three reasons why continuing to build data center infrastructure on legacy x86 architecture will keep “expensive power” at the top of your data center pain point list:

- Performance of legacy CPUs is increasingly power inefficient

- Cooling is a growing operational cost

- Offsetting greenhouse gasses is getting more expensive

Power is at the core of data center challenges. Becoming more efficient will require adopting the right CPU—designed to meet the requirements of modern data centers—and reimagining how to achieve greater performance, heat dissipation, and the path to sustainability.

1 - The cost of power is rising as CPUs get bigger and more demanding

It’s a stressful time for data center operators as they find themselves suddenly over budget without having made any changes to planned operational costs. Add to that, the growing demand for compute power and the expectation of consistent performance coupled with moratoriums on data center build out and limited access to power.

For most data center operators, the current climate is creating the perfect storm of higher costs and lower profits. Even so, leading CPU manufacturers continue to update and upgrade their x86 processor architecture—developed 40 years ago for a completely different kind of compute—to deliver higher performance, while demanding more and more power.

increasing, and the leading-edge chips are increasingly becoming more power hungry, not less."

As a result, data centers running servers powered by legacy x86 processors are faced with a variety of inefficiencies, including:

- Racks operating at maximum allocated power with unused rack space

- Data center sprawl and wasted space caused by partially filled racks

- Noisy neighbors in shared tenancy impacting performance and stability during peak times

- Increased carbon footprint

- Lack of vacancy and compute availability for new customer or expansion for current customers

Increasing costs, carbon emissions, and complexity of advanced cooling systems and the dependency on limited resources are collectively driving data centers toward a new era of compute. One where the CPUs themselves are more efficient, require less power, and emit less heat.

2 - Newer generations of legacy x86 CPUs increasingly require advanced cooling

Provisioning more power to servers powered by older technologies increases the amount of waste heat and the need for advanced cooling technologies. As data centers equipped with larger, power-hungry CPUs get hotter and hotter, more air flow is needed to keep them cool—and more power is needed to run more fans at a faster rate.

When air cooling isn’t enough, liquid cooling and immersion systems must be deployed. While these systems promise to be more efficient and provide higher levels of cooling than traditional air conditioning systems, they are also more complex and expensive to implement and maintain. Many data centers are stuck because they cannot retrofit to use these advanced systems.

Data center cooling challenges include:

- Water scarcity: Air cooling systems dependent on water will face resource limits before they are able to satisfy all their requirements.

- Nascent technology: Liquid cooling and immersion technologies are just now entering the data center market. Without standards for cooling liquids or deployment, data centers encounter environmental and delivery challenges.

- Energy costs: In an energy challenged world, cooling costs are still contributing to 20% to 40% of the power requirements for data centers.

- Expensive Overhauls: Many data centers aren't built to use newer liquid and immersion techniques—requiring cost prohibitive overhauls for implementation.

Each of these inefficiencies contributes to the overall PUE of the data center, a number that has been leveling off in the last couple of years.

of 1.55 at their largest data center. Data center operators aim to get their PUE ratio as close to 1 as possible, with many of the newest data centers from hyperscale and

colocation providers offering greater efficiency than those running older technologies.”

The inherently low power consumption and higher core count of Cloud Native Processors decreases the number of chips required in every server without sacrificing performance. With less processors drawing power, the need to artificially improve performance through expensive advanced cooling systems is alleviated and the sprawl of partially filled racks is reduced.

3 - Reaching net zero requires a new approach to data center design

Options for reaching ESG goals and carbon neutrality are limited for data center operators reliant on legacy x86 processor architecture. Purchase Power Agreements (PPAs) and renewable carbon credits are getting more expensive as demand grows along with rapid data center expansion.

Five years ago, data centers could buy enough carbon credits to offset greenhouse gas emissions. To keep up with demand, data center operators must now consider a two-pronged approach to energy conservation and sustainability.

Before defaulting to purchasing increasingly expensive carbon credits, efficient data centers must focus on significantly lowering power consumption to reduce crippling increases in operating costs. According to Fabricated knowledge, energy costs are rising higher than any other costs.

In fact, two major hyperscalers reported significant increases in 2022, according to the Fabricated Knowledge report:

- Microsoft reports $800 million greater than expected energy costs

- Amazon reports 2x higher energy costs in the last two years

However, there is hope. A major player in the cloud computing and enterprise software market, Oracle, is reversing the trend toward higher costs and improving financial results with the adoption of Cloud Native Processors.

Rethinking power consumption at the CPU and server level is creating a path toward true efficiency and sustainability.

It’s time to think about data center efficiency differently

How do data centers become more efficient? Build your data center from the ground up with microprocessors designed specifically for the challenges of modern compute. Cloud Native Processors are delivering 3x data center efficiency over legacy x86 processors today.

Historically, CPU performance has been measured at the watt level and microprocessors compared in isolated workloads outside of actual multi-tenant compute environments with multiple workloads running. Innovations in microprocessor architecture design are changing how efficiency is measured—taking it from the watt to the rack. Performance per rack—according to industry analyst, Patrick Morehead, is the right metric.

Measuring data center efficiency at the performance per rack level opens the aperture to include both improved performance and increased power savings. Combined, these two key metrics revolutionize data center operations and take the power efficiency challenge head on.

5 ways Cloud Native Processors change the conversation

Recent studies by Ampere have revealed that Cloud Native Processors can deliver 3x greater data center efficiency than legacy x86 processors. How is this achieved? First, because they aren’t built on an existing architecture, Cloud Native Processor innovators were able to start over and design microprocessors specifically for the challenges of today’s data centers.

Here are 5 ways that Cloud Native Processors are answering the challenges of today’s modern computing environments:

1. High performance CPUs designed from the ground up for multi-tenant cloud-scale environments are intrinsically more efficient 2. Greater rack efficiency lessens data center sprawl 3. Less heat generation reduces cooling requirement 4. Reduced waste heat lowers carbon emissions 5. Less data center build-out solves energy limitations

Given the efficiency and performance of these innovative processors, Ampere estimates* that in one year, a 100,000 square foot data center servicing 18 billion requests could realize $31.5M in energy savings based on average US electricity costs.

Retire, replace, and renew with Cloud Native Processors

As energy costs go up, some data center operators may be questioning whether the right time is now to change existing hardware, or if they should wait.

Here are 5 reasons to deploy Cloud Native Processors in your data center:

1. Native software stacks for Cloud Native Processors is growing more robust daily and software compatibility is less and less of a barrier. 2. Retiring aging, inefficient x86 servers can save both space and power significantly while increasing compute capacity by 3x or more. 3. The operating cost of continuing to run your data center on legacy hardware will continue to climb as hardware gets more and more power hungry. 4. Switching to Cloud Native Processors can yield 3X the compute capacity for the same space. 5. Moratoriums on data center build out and limits on access to energy will soon make switching to efficient hardware a necessity.

Getting started deploying efficiency in your data center

Cloud Native Processors are designed with architecture transitions in mind and prioritize maintainability, performance, and portability—allowing data center operators to focus on their customers and products. Hundreds of packages and images support the cloud native architecture today, and the software ecosystem is growing.

Built for sustainable cloud computing, Ampere’s first Cloud Native Processors deliver predictable high performance, platform scalability, and power efficiency unprecedented in the industry.

This blog is part of a series about data center efficiency.

- 5 Keys to Ultimate Data Center Efficiency and Performance

- How HPE and Ampere are Tripling Data Center Efficiency

- How to Shrink Your Data Center and Increase Capacity

Learn more about data center efficiency in our eBook

Triple Data Center Efficiency with Cloud Native Processors

Watch our webinar:

How to Triple Efficiency, Double Performance, and Save in Your Data Center

Ready to get started saving in your data center? Talk to our expert sales team about partnerships or get more information or trial access to Ampere Systems through our Developer Access Programs.

Footnotes

The web services study here is based on performance and power data for many typical workloads using single node performance comparisons measured and published by Ampere Computing.