How to Shrink Your Data Center and Increase Capacity

How to Shrink Your Data Center and Increase Capacity

As the demand for cloud compute continues to grow, cloud service providers and enterprises have become more strategic about where they place new facilities. The real estate mantra of “location, location, location” has been translated into building data centers based on the availability of cheap and plentiful power or near places with access to hydro-electric dams or colder climates to take advantage of more sustainable energy sources or natural cooling options.

In the absence of unlimited and inexpensive energy and real estate, data center build-out has become a major challenge for the cloud computing industry. Around the world, moratoriums and limitations on data center expansion—as well as tension in the capital markets—are creating barriers to growth in some of the most desirable data center locations, including:

- Singapore

- Amsterdam

- Ireland

- London

- Frankfurt

- Virginia

- Arizona

And the list keeps growing. Most recently, Dallas and Chicago joined the list of places experiencing the power crunch because of limits on data center energy consumption. At this point in history, data center operators must make a critical decision: either continue to build out or find solutions that will optimize the space inside the data center.

Maintaining the status quo requires building out in less desirable locations or expanding current data centers to accommodate growing compute demand. Instead, by adopting hardware solutions that consume less power, scale with workload linearly and fully utilize rack space —such as servers powered by Cloud Native Processors—operators have an opportunity to decrease the physical footprint of existing data centers and reduce data center sprawl.

Let’s take a deeper dive into the many ways Cloud Native Processors help solve real estate constraints in the data center when compared to x86 processors:

1. Lower energy consumption .

2. Reclaim unused rack space

3. Provide a quicker path to Net-Zero initiatives

4. Provide higher performance for the majority of data center workloads

1. Real Estate Challenges are Tied to Energy Costs and Access

Power requirements continue to rise even as energy supply constraints become the biggest impediment to data center growth.

For decades, legacy X86 processor suppliers have been focusing on increasing performance. With each generation of processing products, performance improvements have not adequately addressed the elephant in the room: the need to drastically reduce power consumption.

This myopic focus on performance shifts consumer attention away from the impact of the energy inefficiency of the processors. At data center scale, the inefficiency becomes severe by bloating power requirements of mega data centers into the hundreds of megawatts and contributing to data center sprawl.

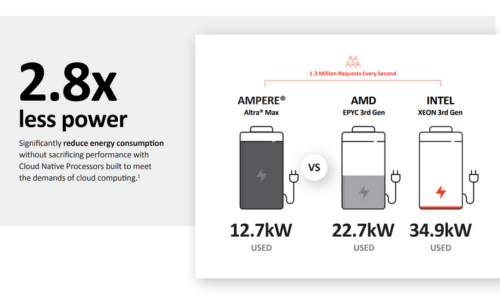

Moving away from the traditional processor architecture and adopting Cloud Native Processors changes the trajectory for the industry. Data center operators and their customers no longer need to choose between improved performance or less power consumption. Microprocessors designed specifically for cloud native computing use up to 2.8 times less power, while delivering the same or better performance as their x86 counterparts.

Reducing the amount of energy consumed at the CPU level is key to reducing the physical footprint of the data center. Each low power Cloud Native Processor enabled server opens more power budget for additional servers to be added to each individual rack—thereby reducing wasted rack space and shrinking the data center footprint. When measuring data center efficiency, performance per rack is the right metric.

2. Performance Per Rack Frees Up Data Center Space

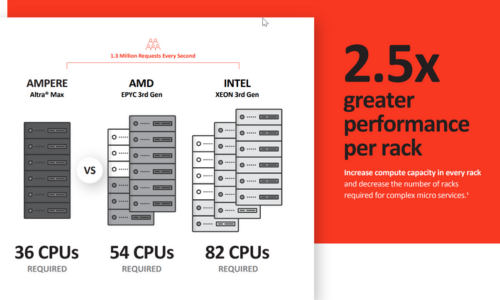

While CPU performance can be measured in a single workload, overall data center efficiency can only be measured when processors perform in real-world scenarios with millions of requests and multiple workloads running simultaneously. While legacy x86 processors can show some efficiency in a single workload under ideal conditions, only Cloud Native Processors deliver the combination of lower power and higher performance per rack that leads to true sustainability as workloads scale out.

Performance per rack can be explained by looking at rack pitch and rack density. Rack pitch is the measurement of how closely you can pack a row of racks together. Each row must have adjacent hot aisle spacing and cold aisle spacing between the next row, and if a rack is under-utilized it ultimately forces building a new row—significantly increasing the datacenter size.

Rack density refers to how many servers can be housed in a single rack. More power efficient servers allow data center operators to fully populate racks and eliminate spare bays within a rack. Until the advent of Cloud Native Processors, the power requirements of legacy x86 processors required using racks with empty space—pushing out the data center boundary to create the sprawl we are experiencing today.

In smaller installations and edge computing environments where ambient temperatures are already close to operational limits, expanding compute requires adding more power. Every watt added for additional compute resources requires doubling or tripling the same wattage when accounting for fan power—whereas cloud native solutions increase compute capacities without exceeding existing power budgets or thermal constraints. For those deployments where only space is the critical factor, being able to put more cores into a socket is key—something only Cloud Native Processors enable.

Especially in large data centers, servicing millions of requests a second, we see improvements of up to 2.5 times better performance per rack with Cloud Native Processors—even for the most demanding workloads.

Some of the most prominent workloads benefitting from the performance and efficiency of Cloud Native Processors, include:

- Web services

- Search

- Big Data

- Artificial Intelligence (AI)

- Video Services

- Gaming

- Storage

Getting more performance per rack is key to overall data center efficiency and performance. Space savings begins with CPUs that draw less power in the servers. Less power consumption per server translates to more densely populated racks and reduced data center infrastructure, including less equipment needed for power delivery, cooling, and interconnect.

3. Scalability Improves Performance and increases Compute Density

At data center scale, workloads in the cloud consume many cores worth of computing power. In fact, the term, “Cloud Native”, was first used to describe software concepts for building larger applications by combining many processes or threads—normally stateless and independent. In the cloud native framework, these applications are combined at scale to deliver much bigger services, including social networks, eCommerce sites, online banking, and the many everyday apps used by billions of people globally.

- Legacy architecture processors try to keep up with these rising demands through scale-up techniques, including:

- Higher frequency scaling

- Larger instruction sets with wider vectors

- Hyperthreading

- Incorporation of specialized, power-hungry accelerators

These performance-improving techniques do not consider the physical footprint, or the increased power consumption required to run them. Additions to CPUs—such as accelerators—benefit only a small number of clients, yet they add the extra power draw to everyone deploying them. In contrast, Cloud Native Processors—like the Ampere Altra Family—take a different approach to scalability. By packing lots of space efficient cores together on the same die and interconnecting them with high performance mesh technology and pipelined cache architecture, Cloud Native Processors efficiently feed every core from main memory.

Ampere Cloud Native Processors scale linearly from 1 to 128 cores—allowing customers to fully utilize all cores with no individual core performance penalty. Conversely, x86 processors have limited performance and require customers to set utilization thresholds between 50% and 60%. Increasing core utilization beyond this watermark limits individual core performance—an unacceptable tradeoff for cloud service providers.

Scale-out multi-core design of Cloud Native Processors creates efficiencies in several ways, including:

- Delivering more than twice the performance

- Consuming up to 60% less power at scale

- Enabling linear performance scaling

- Reducing the physical footprint

Better space efficiency results in higher compute density per square foot and results in less supporting infrastructure and materials—including concrete, steel, network switches, air conditioning, power conditioning, and others.

4. Less Physical Space Accelerates NetZero Initiatives

The tremendous growth of the cloud—and data generated across many industries—has motivated data center operators to pursue design efficiencies from operational and capital cost perspectives. Concerns for sustainability, the inefficiencies associated with energy consumption, and the environmental impact of physical infrastructure sprawl have pushed the carbon footprint of data centers into the spotlight.

Progress toward lowering the environmental impact of data centers, has included investing billions of dollars in renewable energy offsets for scope 2 GHG emissions and increasingly efficient Power Usage Effectiveness (PUE) ratings achieved by focusing on hot/cold aisle layout and modern cooling technology. Still, the growth of cloud computing and increasing corporate urgency to move beyond carbon neutral to net zero, remain major challenges.

While past efforts on PUE have yielded meaningful gains in overall datacenter efficiency, PUE efforts have misdirected the fundamental focus where data center efficiency begins: the compute infrastructure. Efficiencies in both power usage and real-estate consumption start with the server itself and are best measured at the rack level.

Without a change in approach, processors—and the servers where they’re installed—will continue to draw more power from one generation to the next. As a result, we will be unable to combat data center sprawl and rising energy consumption resulting from ever-growing compute demands.

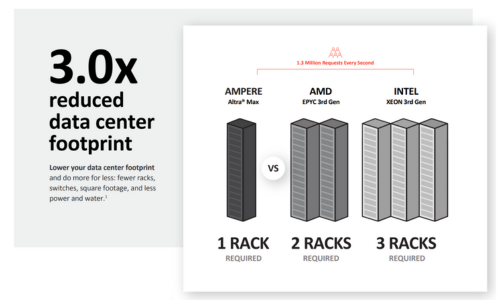

Rack level efficiencies scale up to the datacenter in a linear manner—impacting the size and number of data centers needed now and, in the future. Improving rack density is the most valuable tool for operators and architects seeking to reduce the physical and carbon footprint of a datacenter.

Ampere has published rack efficiency metrics to help operators evaluate service implementations that require scale compute resources. Our analysis shows that typical cloud native workloads can be up to 3 times more efficient than legacy X86 vendor-based solutions—using 1 rack vs 3 for Intel and 2 for AMD in a typical web service example.

5. Cloud Native for Every Compute Environment

Power constraints are everywhere and not all environments have the same power and space restrictions as a large-scale data center. In lower power environments—such as an edge data center or in an embedded computing device—the inherent power restriction limits the amount of compute performance possible. By delivering more compute performance per watt, Cloud Native Processors deliver higher performance within any power budget. This translates to more work done in a smaller power or space envelope.

In space constrained environments—such as those common in the edge computing industry—rack density and resources for cooling and housing data centers become limiting factors. Since these facilities need to be closer to densely populated areas, edge data centers don’t have as many options for where they can be built as their larger cloud data center counterparts. While brownfield installations are more of a possibility for smaller edge data centers, space conservancy within the center is also of greater concern.

Cloud Native Processors are a natural fit for the space, power, and cooling constraints of edge and embedded computing—and those applications where devices run solely on battery power. Real estate proximity, access to power, and availability of greenfield opportunities will continue to be limiting factors for data center build-outs. Scalable and efficient, Cloud Native Processors reduce data center power consumption and physical space requirements—a foundation for rack level efficiency and a future of sustainable compute in any size installation.

Built for sustainable cloud computing, Ampere’s Cloud Native Processors deliver predictable high performance, platform scalability, and power efficiency unprecedented in the industry.

This blog is part of a series about data center efficiency

- 5 Keys to Ultimate Data Center Efficiency and Performance

- How HPE and Ampere are Tripling Data Center Efficiency

- 3 Reasons Expensive Power is the Greatest Data Center Challenge

- How Can Data Centers be More Sustainable?

Learn more about data center efficiency in our eBook

Triple Data Center Efficiency with Cloud Native Processors

Watch our webinar:

How to Triple Efficiency, Double Performance, and Save in Your Data Center

Ready to get started saving in your data center? Talk to our expert sales team about partnerships or get more information or trial access to Ampere Systems through our Developer Access Programs.

**Footnotes **

The web services study here is based on performance and power data for many typical workloads using single node performance comparisons measured and published by Ampere Computing.