5 Keys to Ultimate Data Center Efficiency and Performance: A Complete Guide

The increasing demand for cloud computing, the need for big data analytics, and the rise of artificial intelligence (AI) workloads—among other factors—are driving the growth of data center services. While demand generates growth opportunities for data center operators, it has also created unnecessary data center sprawl and the need for more infrastructure and more power.

Today, data center operators face multiple challenges, including:

- Rising capacity demands

- Soaring energy consumption

- Real estate constraints

- Carbon emission regulations

How Cloud Native Processors Triple Data Center Efficiency

Deploying Cloud Native Processors is key to ultimate data center efficiency, allowing you to do more with less, including:

- Reduce rack space up to 3x

- Get up to 2.5x better performance per rack

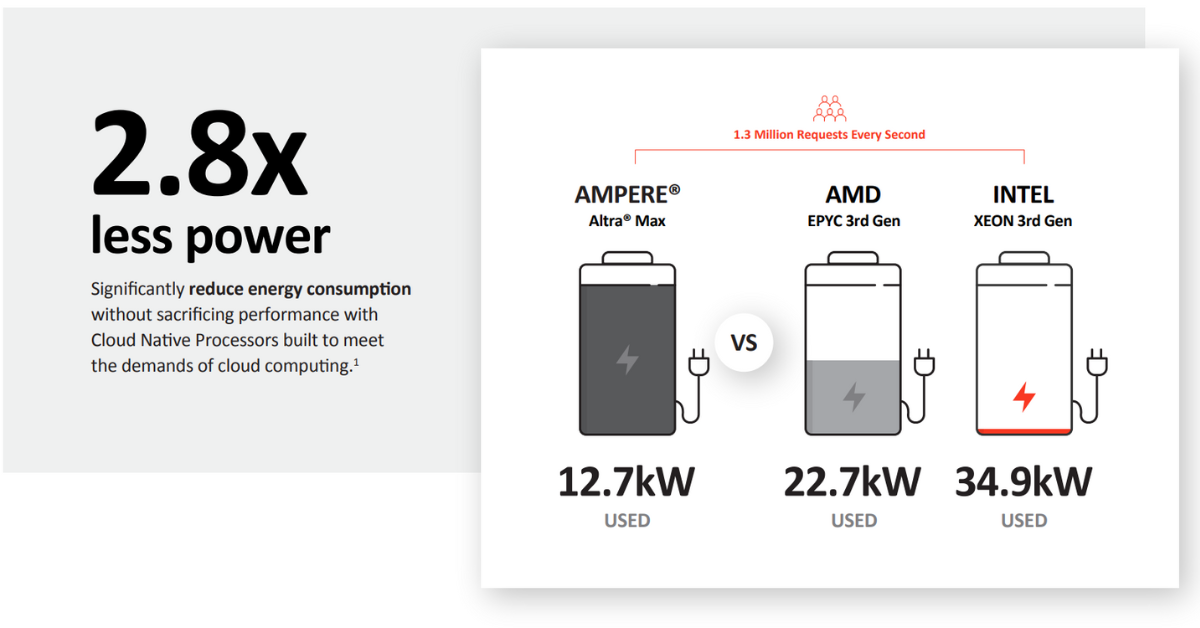

- Consume up to 2.8x less power

- Save $31.5 million yearly (Based on a 100,000 square foot data center servicing 18 billion request per second)

- Reduce carbon emissions equivalent to 39,035 cars off the road

Until the advent of Cloud Native Processors, data center operators have been contending with the inadequate performance and efficiency of legacy x86 processors—designed 40 years ago for use in client computing devices. Legacy CPU manufacturers have attempted to keep up with the demands of the persistent capacity growth of the cloud, but scalability and energy consumption continue to plague the data center industry.

Since Cloud Native Processors are designed specifically to address the needs of growing data centers and the shift to cloud computing, they are inherently more efficient and deliver greater sustainability and higher performance than legacy processors.

the demands of the persistent capacity growth of the cloud, but scalability and energy consumption

continue to plague the data center industry.

Measuring workload or service efficiency requires looking beyond commonly cited benchmarks or data points taken at the single CPU level. These numbers are often misleading because they do not consider what the workload at scale will consume in space, power, and other data center resources. Performance per rack is a more valuable metric for estimating the overall efficiency of a particular processing infrastructure and a scale unit meaningful to data center architects.

that has consistent performance like cloud native – this rack level measurement is the best measurement.

The performance per rack metric represents a new approach for evaluating scale performance in power constrained environments. Adopting solutions that deliver up to 2,5x better performance per rack eliminates the need for data center operators and their customers to choose between power efficiency and performance—yielding more sustainable designs for the modern cloud era.

Let’s look at the 5 key areas that make data centers more efficient and sustainable over time.

#1. Reducing rack space by up to 3x

Reducing your data center footprint is key to optimizing operations and increasing efficiency. Cloud Native Processors deliver the same or more compute power as their legacy counterparts while occupying less space. By packing in more cores on a single die, these power-optimized CPUs give data center infrastructure providers more cores per rack.

With more compute capacity in every rack, you can decrease the overall data center footprint by up to 3x and free up floor space for additional capacity—or simply conserve the unused space and energy for the future. Less racks for the same or better compute capacity translate into many tangible benefits, including:

- Reducing energy consumption: Fewer racks require less power and cooling, thereby lowering energy requirements and operating costs. Some estimates show that 30% to 40% of total energy costs are used for cooling.

- Curbing new data center builds: Fewer racks within an existing data center leave spare capacity for the future—without increasing its physical footprint.

- Shrinking infrastructure: Fewer racks reduce the need for everything that comprises the data center infrastructure—from network gear to power delivery and management—further reducing the power and cooling demand for every rack saved.

#2 Getting 2.5x better performance per rack with Cloud Native Processors

Power efficiency and performance are two of the pressing compute challenges Cloud Native Processors have overcome with rack level efficiency—providing a path to a sustainable data center ecosystem. Rack level efficiency is achieved by Cloud Native Processors in several ways, including:

- Scalability

- Reduced power consumption

- Higher rack density

- Less physical space requirements

While the competition claims better efficiency through increased compute performance, this is only half of the story. Cloud Native Processors deliver efficiency in 2 dimensions: performance and low power. When competitors attempt to increase performance at the expense of much higher power consumption, runaway energy and space demands result—further aggravating the power consumption and cost challenges of the data center market.

To understand the state of the market and why the combination of power efficiency and performance are key to stopping the runaway energy and space demands that define the industry today, we need to look at how efficiency is measured.

Using synthetic microbenchmarks is a common practice in the semiconductor industry. While benchmarks—like SpecRate-Integer or SIR—are useful to gauge the raw theoretical performance of comparable processors under high load, they do not represent real-world performance of complex services at scale.

When the most commonly occurring microservices deployed in containers—applications such as NGINX, Redis, Kafka, MongoDB MySQL and more—are tested and tuned to simulate common services found in the cloud today, the results show Cloud Native Processors deliver unprecedented performance per rack for a variety of services, including:

Sustainability is achieved by combining performance with power efficiency. Cloud Native services such as these can expect to see 2x to 3x better performance at the rack level–leading the way as a solution for data centers of all sizes.

#3 Same performance with 2.8x lower power consumption

Getting enough power to consistently deliver uninterrupted services today, ensuring the availability of enough power to continue to meet rapidly growing demand, and the concerns over the continual rise in energy costs have spurred operators to seek solutions that will lead them into the new era of cloud computing.

In addition, energy limits and moratoriums on data center expansion are a reality across the globe. To gain community support and meet the energy and space limits now being imposed, data center operators must prove that they are doing all they can to mitigate the environmental impact of their data centers.

Key to community concerns are the impacts of significant energy use which can lead to higher costs for everyone in the neighborhood as well as the threat of service interruptions during high usage times.

Currently, data centers operating with legacy x86 processors are trying to improve energy efficiency in several ways, including:

- Cooling optimization: Using ever more efficient cooling infrastructure and complicated and expensive cooling methodologies.

- Power management policies: Auto-shutdown of idle servers, reducing server refresh rates, and complicated power-saving modes.

- Renewable energy sources: Solar and wind power and other options to reduce reliance on traditional sources of energy.

Unfortunately, focusing solely on innovative cooling solutions does not address the core of the problem. Since the server infrastructure is the largest consumer of power in the data center, heat mitigation efforts are more effective and easier to implement if the server itself is more efficient.

Cloud Native Processors reduce the energy consumed by up to one-third when compared to less efficient CPUs. While energy savings will vary with different workloads, a significant reduction in power is realized across the board.

#4 Efficient data centers save millions every year

Rising costs are a concern for data center operators, as they negatively impact their bottom line and their ability to remain competitive. Although larger data centers may have some efficiencies of scale, the energy crises and growing demand are putting pressure on data centers of all sizes to deliver consistent, scalable services at competitive prices.

While some cost cutting measures can help streamline operations, only adopting Cloud Native Processors will cut the cost of server operations in half. A 100,000 square foot data center servicing 18 billion requests per second and implementing Cloud Native Processors can deliver as much as $31.5 million in energy savings every year—based on US electricity costs.

With the money not spent on delivering power to servers, the possibilities for business growth and increased profitability are numerous, including the ability to reinvest in new or expanded services to customers. Another possibility would be to double the benefit of lower energy consumption by giving back a portion of the savings in green projects to the community or investing in further renewable energy investments. Regardless of how you choose to invest your savings, one thing is certain: cutting energy costs and consumption every year is the only path to a sustainable future.

#5 Contribute to a greener planet with a lower carbon footprint

Purchasing Renewable Energy Credits (RECs), improvements in data center Power Usage Effectiveness (PUE), and Power Purchasing Agreements (PPAs) have become a common practice among data center operators. Carbon offsetting and PUE advancements help data centers move toward more sustainable practices while bolstering Environmental, Social, and Governance (ESG) Goals.

Although carbon offsetting and investments in carbon reduction help data centers meet both social and business goals, sustainable data center growth requires a focus on reducing power consumption at the server level.

Servers represent the largest opportunity to reduce overall energy consumption. Carbon neutrality in the data center can be realized through a combination of green energy generation and curbing energy consumption through servers that consume less energy—powered by Cloud Native Processors. Expending nearly a third of the power to accomplish the same amount of work illustrates how technology innovation at the core is clearing a path to net zero.

generation and curbing energy consumption through servers that consume less energy—powered by Cloud Native Processors.

A new era of sustainable cloud computing has dawned. Modern cloud computing is here and the demands for compute power and performance will continue to put pressure on data center operators already stretched thin by many factors, including:

- Rising energy costs

- Limits on space for expansion

- Aging inefficient compute infrastructure

- Green energy conversion

- Growing competition

These challenges cannot be met by continuing to do what has been done for the past 20 years with x86 processors. Thankfully, there is an answer to data center sustainability and efficiency with Ampere’s Cloud Native Processors.

Built for sustainable cloud computing, Ampere’s first Cloud Native Processors deliver predictable high performance, platform scalability, and power efficiency unprecedented in the industry.

This blog is part of a series about data center efficiency.

- 3 Reasons Expensive Power is the Biggest Data Center Challenge

- How HPE and Ampere are Tripling Data Center Efficiency

- How to Shrink Your Data Center and Increase Capacity

- How Can Data Centers be More Sustainable?

Learn more about data center efficiency in our eBook

Triple Data Center Efficiency with Cloud Native Processors

Watch our webinar:

How to Triple Efficiency, Double Performance, and Save in Your Data Center

Ready to get started saving in your data center? Talk to our expert sales team about partnerships or get more information or trial access to Ampere Systems through our Developer Access Programs.

Footnotes

The web services study here is based on performance and power data for many typical workloads using single node performance comparisons measured and published by Ampere Computing.